OpenVINO deploying DeepSeek-R1 Model Server (OVMS) on Bare metal Windows AIPC

Authors: Kunda Xu, Sapala, Rafal A

DeepSeek-R1 is an open-source reasoning model developed by DeepSeek to address tasks requiring logical inference, mathematical problem-solving, and real-time decision-making. With DeepSeek-R1,you can follow its logic, making it easier to understand and, if necessary, challenge its output. This capability gives reasoning models an edge in fields where outcomes need to be explainable, like research or complex decision-making.

Distillation in AI creates smaller, more efficient models from larger ones, preserving much of their reasoning power while reducing computational demands. DeepSeek applied this technique to create a suite of distilled models from R1, using Qwen and Llama architectures. That allows us to try DeepSeek-R1 capability locally on usual laptops (AIPC).

In this tutorial, we consider deploy deepseek-ai/DeepSeek-R1-Distill-Qwen-7B as a model server on Intel AIPC or AI work station with Windows OS to perform request generation tasks.

Requirements:

- Windows11

- VC_redist : Microsoft Visual C++ Redistributable

- Intel iGPU or ARC GPU: Intel® Arc™ & Iris® Xe Graphics - Windows*

- OpenVINO >=2025.0 : https://github.com/openvinotoolkit/openvino/releases/tag/2025.0.0

- OVMS >=2025.0 : https://github.com/openvinotoolkit/model_server/releases/tag/v2025.0

QuickStart Guide

Step 1. Install python dependencies for the conversion script:

Step 2. Run optimum-cli to download and quantize the model:

If your network access to HuggingFace is unstable, you can try to use a proxy image to pull the model.

Step 3. Deploying Model Server (OVMS) on Bare metal

Download and unpack model server archive for Windows:

Run setupvars script to set required environment variables

Step 4. DeepSeek-R1 model server deploy

Bare metal Host deploy. Required: deploying ovms on Bera metal.

OpenVINO + OVMS can also use Docker contain deploying. Required: Docker engine installed

When using docker as a deployment method, you need to consider whether the hardware performance of the machine is sufficient, because docker contain will also generate additional memory overhead.

For example, when deploying on a laptop or AIPC, due to the limited memory resources, it is more reasonable to use bare metal deployment method

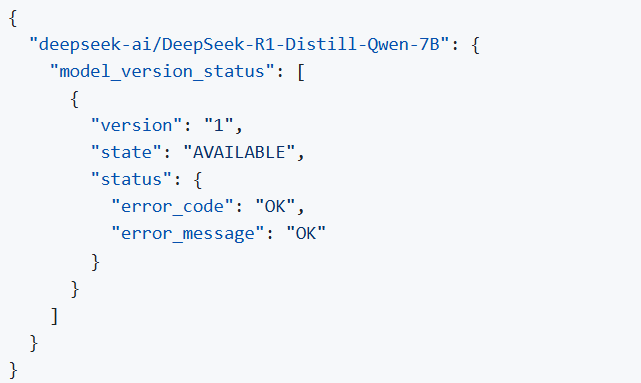

Step 5. Check readiness Wait for the model to load.

You can check the status with a simple command

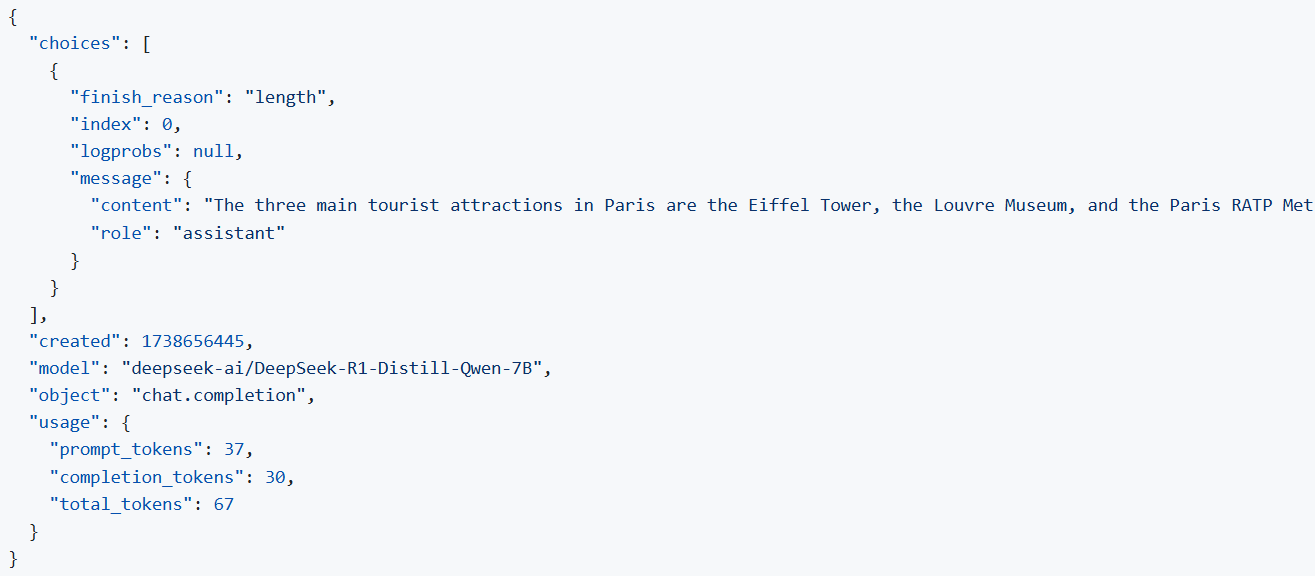

Step 6. Run model server generation

Create a file called request.json ,

and copy the following content into it

You will get the output like the following.

Note: If you want to get the response chunks streamed back as they are generated change stream parameter in the request to true

.png)