Benchmark_app Servers Usage Tips with Low-level Setting for CPU

We provide tips for Benchmark_app in different situations like VPS and bare metal servers. The tips aim at practical evaluation with limited hardware resources. Help customers quickly balance performance and hardware resource requirements when deploying AI on servers. Besides the suggestion of different level settings on devices, the tables of evaluation would help in the analysis and verification.

The benchmark app provides various options for configuring execution parameters. For instance, the benchmark app allows users to provide high-level “performance hints” for setting latency-focused or throughput-focused inference modes. The official online documentation provides a detailed introduction from a technical perspective. To further reduce learning costs, this blog provides guidance for actual deployment scenarios for customers. Besides the high-level hints, the low-level setting as the number of streams will be discussed with the numactl on the CPU.

Benchmark_app Tips for CPU On VPS

Tip 1: For initial model testing, it is recommended to use the high-level settings of Python version Benchmark_app without numactl directly.

Python version Benchmark_app Tool:

In this case, the user is mainly testing the feasibility of the model. The Python benchmark_app is recommended for fast testing, for it is automatically installed when you install OpenVINO Developer Tools using PyPI. The Benchmark C++ Tool needs to be built following the Build the Sample Applications instructions.

For basic usage, the basic configuration options like “-m” PATH_TO_MODEL, “-d” TARGET_DEVICE are enough. If further debugging is required, all configuration options like “-pc” are available in the official documentation.

numactl:

numactl controls the Non-uniform memory access (NUMA) policy for processes or shared memory, which is used as a global CPU control tool.

In this scenario, it is only recommended not to use numactl. Because VPS may not be able to provide real hardware information. Therefore, it is impossible to bind the CPU through numactl.

The following parts will describe how and when to use it.

High-level setting:

The preferred way to configure performance for the first time is using performance hints, for simple and quick deployment. The High-level setting means using the Performance hints“-hint” for setting latency-focused or throughput-focused inference modes. This hint causes the runtime to automatically adjust runtime parameters, such as the number of processing streams and inference batch size.

Low-level setting:

The corresponding configuration of stream and batch is the low-level setting. For the new release 22.3 LTS, Users need to disable the hints of Benchmark_app to try the low-level settings, like nstreams and nthreads.

Note:

- Could not set the device with AUTO, when setting -nstreams.

- However, in contrast to the benchmark_app, you can still combine the hints and individual low-level settings in API. For example, here is the Python code.

The low-level setting without numactl is the same on different kinds of servers. The detail will be analyzed in two parts later.

Benchmark_app Tips for CPU On Dedicated Server

Tip 2: Low-level setting without numactl, for the CPU, always use the streams first and tune with thread.

- nthreads is an integer multiple of nstreams with balancing performance and requirements.

- the Optimal Number of Inference Requests can be obtained, which can be used as a reference for further tuning.

On Dedicated Server:

Compared with VPS, the user gets full access to the hardware. It comes as a physical box, not a virtualized slice of server resources. This means no need to worry about performance drops when other users get a spike in traffic in theory. In practice, a server is shared within the group simultaneously. It is recommended to use performance hints to determine peak performance, and then tune to determine a suitable hardware resource requirement. Therefore, hardware resources (here CPU) need to be set or bound. We recommend not using numactl to control but optimizing with the low-level setting.

Low-level setting:

Stream is commonly the configurable method of this device-side parallelism. Internally, every device implements a queue, which acts as a buffer, storing the inference requests until retrieved by the device at its own pace. The devices may process multiple inference requests in parallel to improve the device utilization and overall throughput.

Unlike”-nireq”, which depends on the needs of actual scenarios, nthreads and nstreams are parameters for performance and overhead. nstreams has high priority. We will verify the conclusion with Benchmark_app. Here, we could test the different settings of the C++ version Benchmark_app with OpenVINO 22.3 LTS on a dedicated server. The C++ version is recommended for benchmarking models that will be used in C++ applications. C++ and Python tools have a similar command interface and backend.

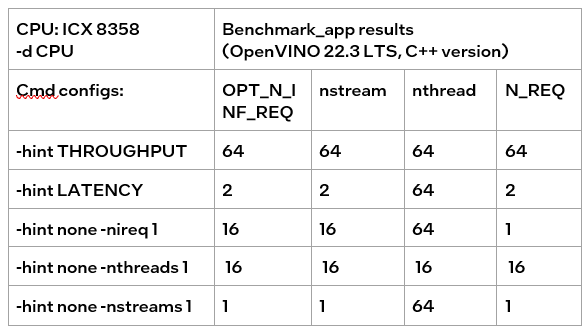

Evaluation:-nireq -nthreads and -nstreams

According to the results of the table, it can be obtained:

If only nireq is set, then nstreams and nthreads will be automatically set by the system according to HW. 64 threads are in the same NUMA node. CPU cores are evenly distributed between execution streams (every 4 threads). nstreams =64/4=16. For the first time, it is recommended not to set nireq. By setting other parameters of benchmark_app, the Optimal Number of Inference Requests can be obtained, which can be used as a reference for further tuning.

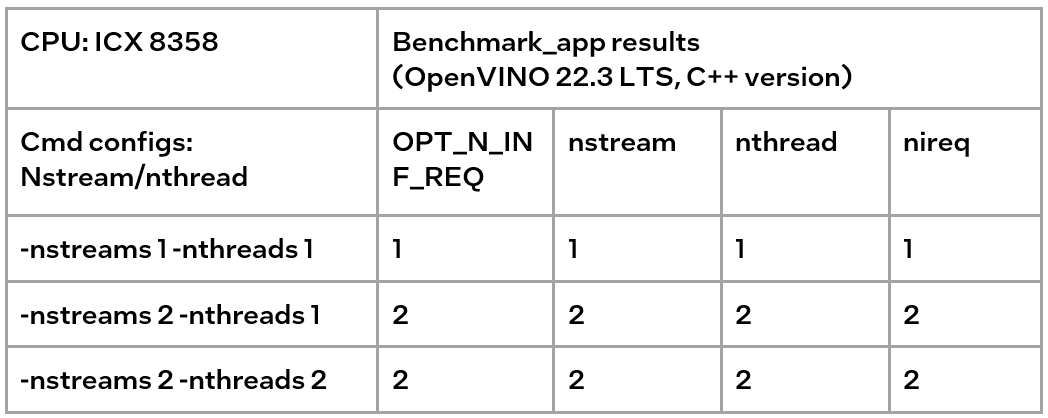

The max value of nthreads depends on the physical core. Setting nthreads alone doesn't make sense. Further tests set two of nireq, nstreams and nthreads at the same time, and the results are listed in the table below.

According to the table, when setting thread and stream simultaneously, there should be more nthreads than the nthreads. Otherwise, the default nthreads is equal to nthreads. The nthreads setting here is equivalent to binding hardware resources for the stream. This operation is implemented in the OpenVINO runtime. Users familiar with Linux may use other methods outside OpenVINO to bind hardware resources, namely numactl. As discussed before, this method is not suitable for VPS. Although not recommended, the following sections analyze the use of numactl on bare metal servers.

Tip3:

numactl is equivalent to the low-level settings on a bare metal server. Ensure the consistency of numactl and low-level settings, when using simultaneously.

The premise is to use numactl correctly, that is, use it on the same socket.

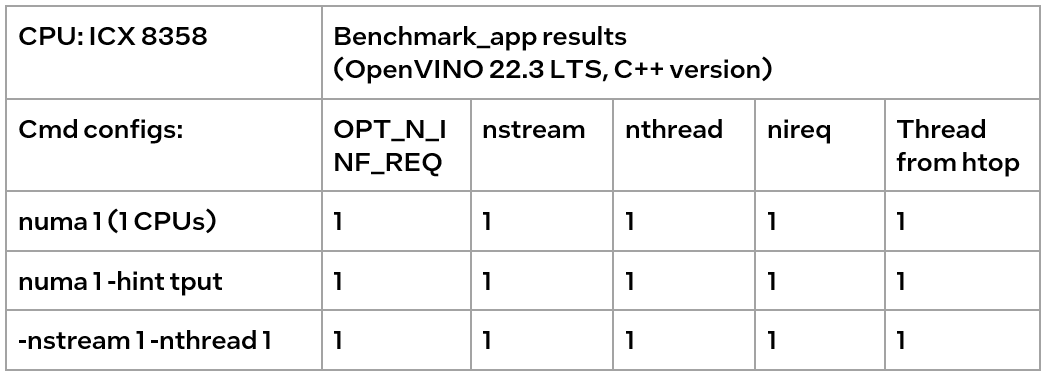

Evaluation: Low-level setting vs numactl

The numactl has been introduced above, and the usage is directly shown here.

numactl-C 0,1 . /benchmark_app -m ‘path to your model’ -hint none -nstreams 1-nthreads 1

Note:

"-C" refers to the CPUs you want to bind. You can check the numa node through “numactl–hardware”.

The actual CPU operation can be monitored through the htop tool.

We could find out that using numactl alone or with the high-level hint is equivalent to the low-level settings. This shows that the principle of binding cores is the same. Although numactl can easily bind the core without any settings, it cannot change the ratio between nstreams and nthreads.

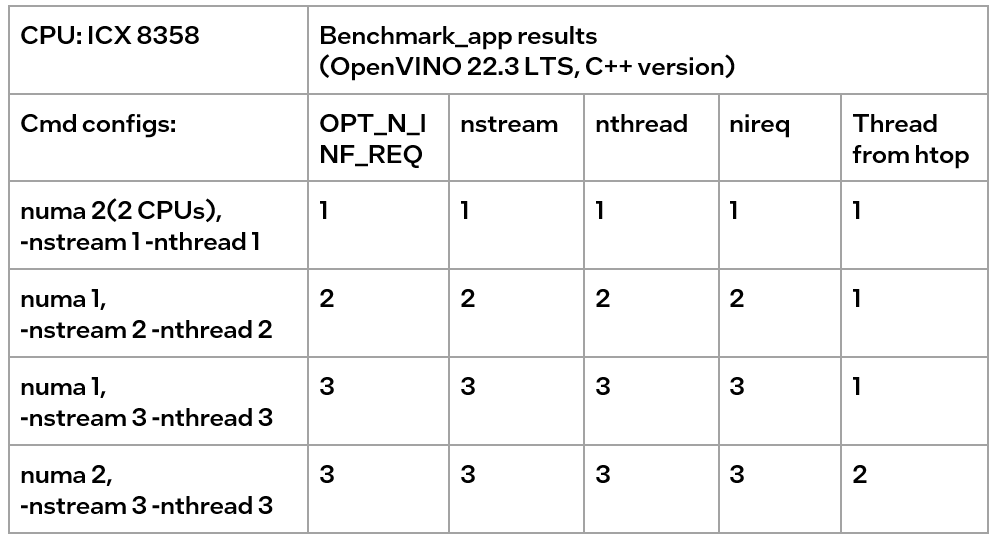

In addition, when numactl and the low-level setting are used simultaneously, the inconsistent settings will cause problems.

We can find the following results, according to the data in the table

- When the number of CPUs (set by NUMA) > nthread, determined by nthread, Benchmark_app results are equal to the number of CPUs monitored by htop

- When the number of CPUs (set by NUMA) < nthread, htop monitors that the number of CPUs is equal to the number controlled by NUMA, but an error message appears in Benchmark_app results, and the latency is exactly equal to the multiple of nstream.

The reason is that numactl is a global control, and benchmark_app can’t be corrected, resulting in latency equal to the result of repeated operations. The printed result is inconsistent with the actual monitoring, meaningless.

Summary:

Tip 1: For initial model testing on VPS, it is recommended to use the high-level settings of Python version Benchmark_app without numactl directly.

Tip 2: To balance the performance and hardware requirement on the bare metal server, a Low-level setting without numactl for the CPU is recommended, always set the nstreams first and tune with nthreads.

Tip 3: numactl is equivalent to the low-level settings on a bare metal server. Ensure the consistency of numactl and low-level setting, when using simultaneously.

.png)