speech recognition

Enable OpenVINO™ Optimization for WeNet

Introduction

The WeNet model provides two-pass approach to unify streaming and non-streaming end-to-end (E2E) speech recognition which is widely used with various HW platforms. In this blog, we provide the OpenVINO™ optimization for WeNet on Intel® platforms.

The public WeNet project is referenced from: wenet-e2e/wenet

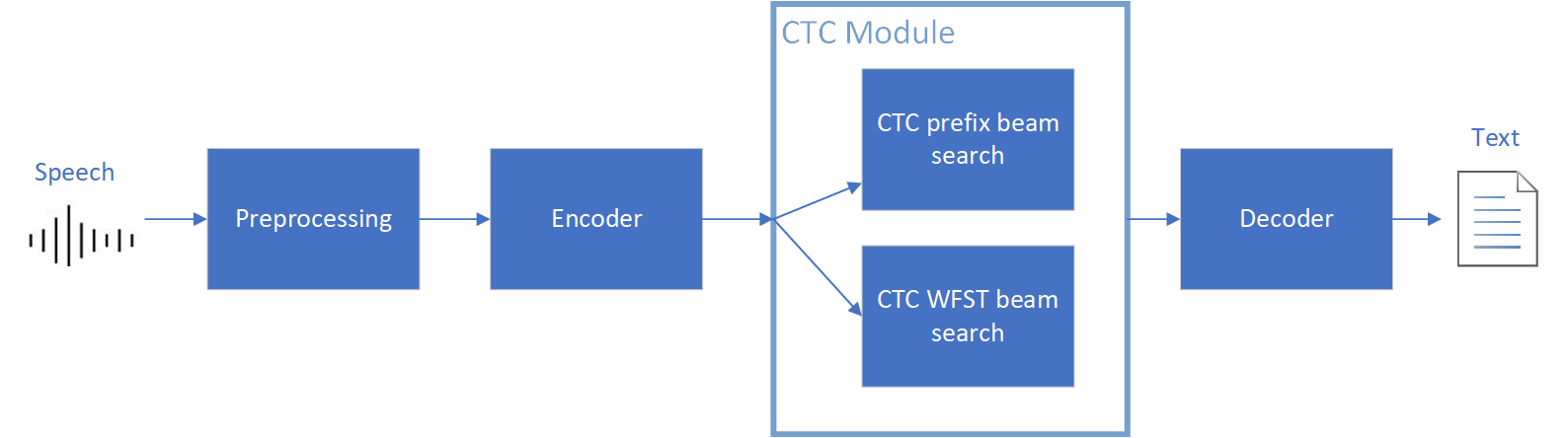

The WeNet model can be considered as a pipeline which is split into 3 parts for decoder, CTC and encoder. Refer the model structure in below picture:

We implement the wrapper function of Automatic Speech Recognition (ASR) model class with OpenVINO™ runtime API programming for these 3 models’ data preparation and inference. Please refer the integrated OpenVINO™ optimization in official project: wenet-e2e/wenet/runtime/openvino

OpenVINO™backend on WeNet

In this project, you do not require to download OpenVINO™ and build the library with WeNet project manually. It’s already fully integrated with OpenVINO™ runtime library for downloading, program compiling and linking. If your operating system is not one of OpenVINO™ runtime library supported, the script will download OpenVINO™ source from Github, and build with CPU plugin to support.

At present, this repository already optimized and validated by OpenVINO™ 2022.3.0 version. Check the operating system which can support OpenVINO™ runtime library directly:

- Windows* 10

- CentOS 7, Red Hat* Enterprise Linux* 8

- Ubuntu* 18.04, 20.04

- Debian 9.13 for X86

- macOS* 10.15

Step 1: Get pretrained ONNX model (Optional)

If you already have the exported ONNX model for WeNet test, you can skip this step.

For users to get pretrained model from WeNet project, you can refer this link:

https://github.com/wenet-e2e/wenet/blob/main/docs/pretrained_models.en.md

Export to 3 ONNX models, including encoder.onnx, ctc.onnx and decoder.onnx by export_onnx_cpu script.

Step 2: Convert ONNX model to OpenVINO™ Intermediate Representation (IR)

Make sure your python environment already installed OpenVINO™ runtime library.

Convert these three ONNX models into IR by OpenVINO™ Model Optimizer command:

Step 3: Build WeNet with OpenVINO™ backend

Please refer system requirement to check if the hardware platform available by OpenVINO™. It will download and install OpenVINO™ library during the CMake configuration.

Some users may cannot easily download OpenVINO™ binary package from server due to firewall or proxy issue. If you failed to download by CMake script, you can download OpenVINO™ package by your selves and put the package to below path:

If you already have OpenVINO™ runtime which is manually built before the WeNet building, you can put the runtime library to below path:

Step 4: Simple inference test

You may run the inference test like below with the speech input audio file (.wav) and model unit file (.txt):

The information of OpenVINO™ integration and results will be print out: