Q2'23: Technology update – low precision and model optimization

Authors

Alexander Kozlov, Nikita Savelyev, Nikolay Lyalyushkin, Vui Seng Chua, Pablo Munoz, Alexander Suslov, Andrey Anufriev, Liubov Talamanova, Yury Gorbachev, Nilesh Jain

Summary

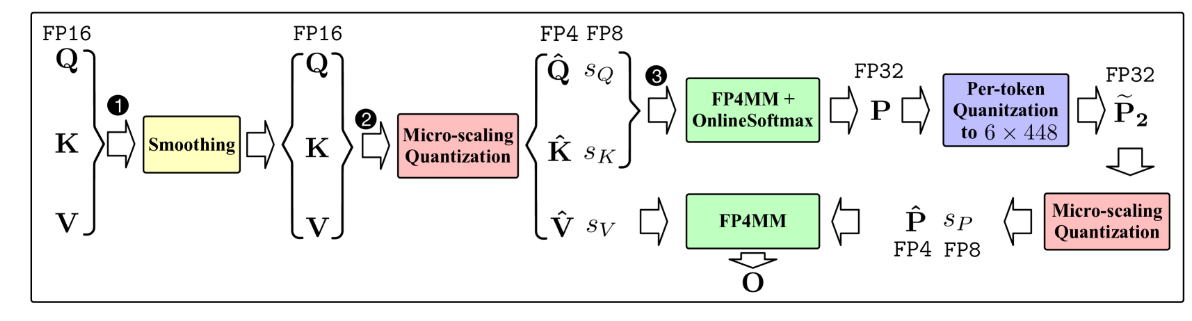

This quarter we observed tremendous interest and breakthroughs in the Large Language Models optimization. Most research is basically focusing on low-bit weights quantization (INT4/INT3) which leads to a substantial reduction in model footprint and significant inference performance improvement in the case if corresponding HW kernels are available. There is also increased interest in low-bit floating-point data types such as FP8 and NF4. In addition, we reviewed relevant papers published on the recent CVPR conference and put them into a separate subsections for your convenience.

Highlights

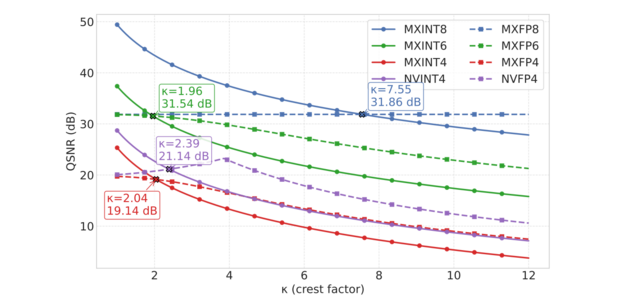

- FP8 versus INT8 for efficient deep learning inference by Qualcomm AI Research (https://arxiv.org/pdf/2303.17951.pdf).A comprehensive study and comparison between FP8 and INT8 precisions for inference. Authors consider various modifications of FP8 data type and they fit inference of different DL models including Transformer and Convolutional networks. They also consider post-training and quantization-aware training settings and how models are mapped to the inference of the data types under consideration in terms of accurate results. The paper also contains an analysis of the HW efficiency of FP8 and INT8. The main conclusion of this paper is thatFP8 types do not provide an optimal solution for low-precision inference compared to INT8 types, especially, in edge scenarios. All the existing problems of INT8 inference can be worked around with mixed integer precisionINT4-INT8-INT16.

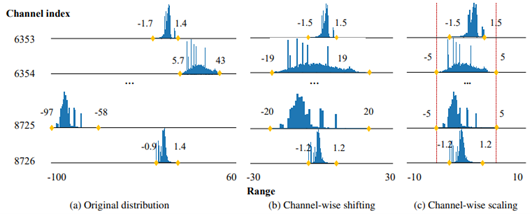

- Outlier Suppression+:Accurate quantization of large language models by equivalent and optimal shifting and scaling by SenseTime and universities of China and US (https://arxiv.org/pdf/2304.09145.pdf).The method represents a continuation of the idea described in Smooth Quant method. Besides the per-channel scaling of activations, authors also adopt the shift operation. They show how these additional operations can be incorporated into the optimizing model in a way that does not hurt performance after quantization. Experiments show that applying the method to various Language models including large OPT-family models allows quantizing them accurately even to the precisions lower than 8-bit, e.g. 6 or 4 bits.

- AWQ: Activation-aware Weight Quantization for LLM Compression and Acceleration by Song Han Lab (https://arxiv.org/pdf/2306.00978.pdf).Authors propose a weights quantization method for Large Language models that is able to achieve 4, 3 and 2 bit compression with a moderate accuracy degradation. In the paper, they claim the importance of the small portion weights (salient weights) which is usually 0.1-1%. The method focuses on the accurate representation of these salient weights by searching for a quantization scaling factor. It also aligns all the weights using a trick similar to the Smooth Quant method but with a focus on weights unification within each channel. The authors also conduct a comparison with the latest GPTQ method and provide a way how to combine these two methods to achieve ultra-low-bit weight compression (2 bits). The method shows significant improvement in the inference speed (1.45x) compared to vanilla GPTQ. Code is available at: https://github.com/mit-han-lab/llm-awq

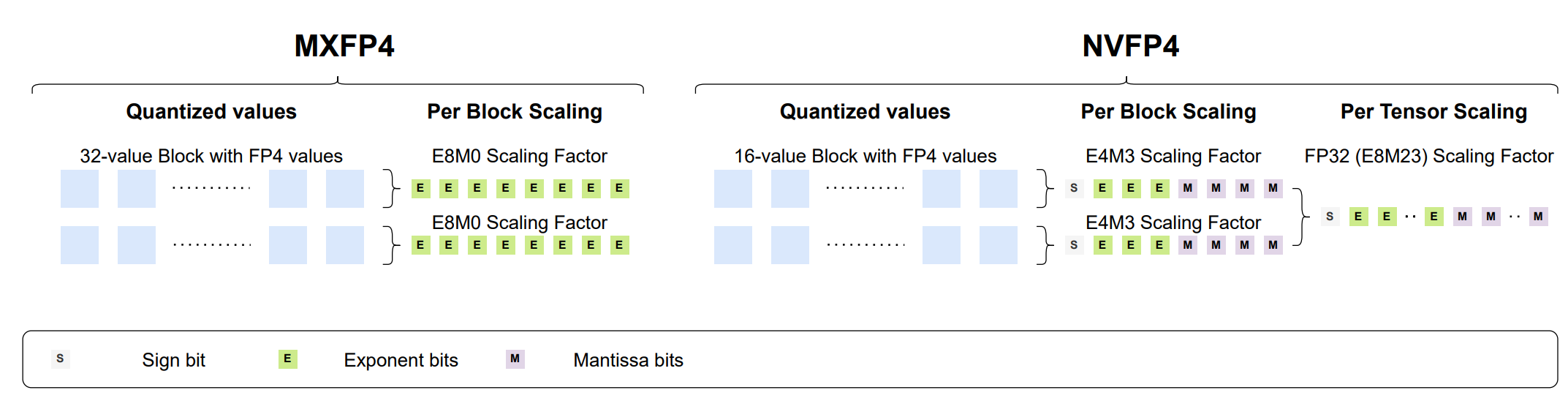

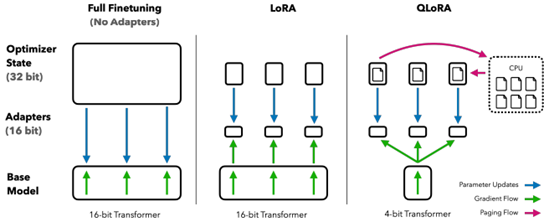

- QLORA: Efficient Finetuning of Quantized LLMs by University of Washington (https://arxiv.org/pdf/2305.14314.pdf).The paper proposes an effective way to reduce memory footprint during the Large Language Models fine-tuning by quantizing most of the weights to 4 bits. A new NormalFloat 4-bit (NF4) data type, that is information-theoretically optimal for normally distributed weights, is introduced for this purpose. Authors also use the so-called double quantization to compress weight quantization parameters and further reduce the model footprint. Most of the workflow is similar to LoRA method. The authors also provide CUDA kernels for fast training. The method is used to tune various LLMs and shows good results(sometimes even better than baselines). It is already integrated with Hugging Face: https://huggingface.co/blog/4bit-transformers-bitsandbytes.

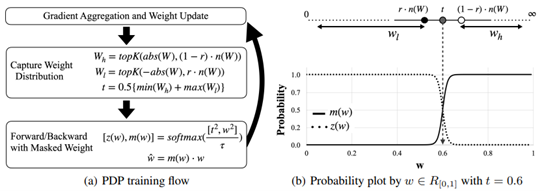

- PDP: Parameter-free Differentiable Pruning is All You Need by Apple Inc. (https://arxiv.org/pdf/2305.11203.pdf).The paper introduces a very simple and compelling idea on how to compute differentiable threshold to obtain the pruning mask based on the desired pruning ratio. The method allows to do it on-fly in a more efficient and stable way for the training so that the decision about which way to prune and which not is being taken during almost the whole training process. The method can be applied in a structured an unstructured way and achieves superior results on both Conv and Transformer-based models.

Papers with notable results

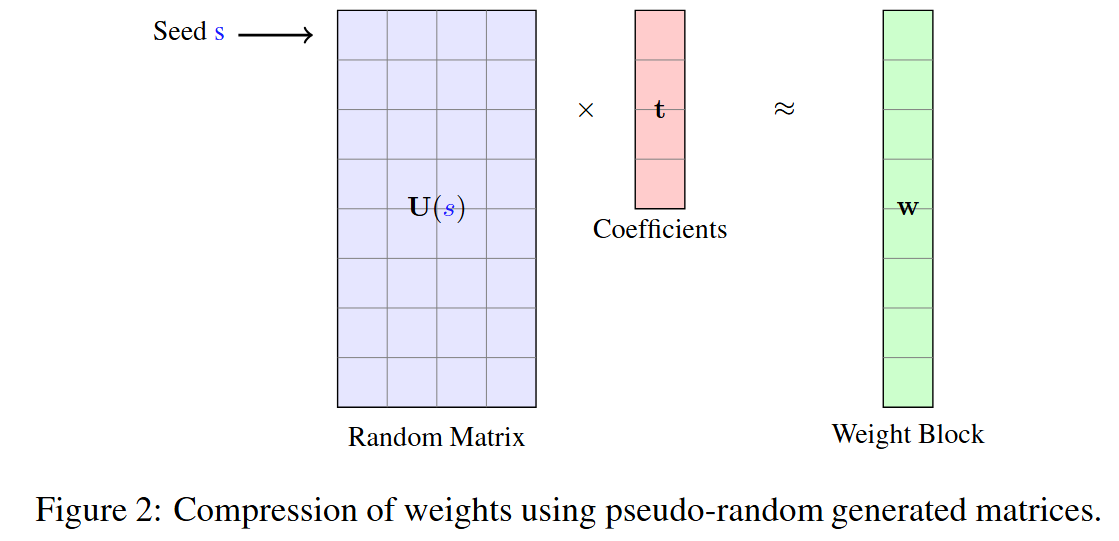

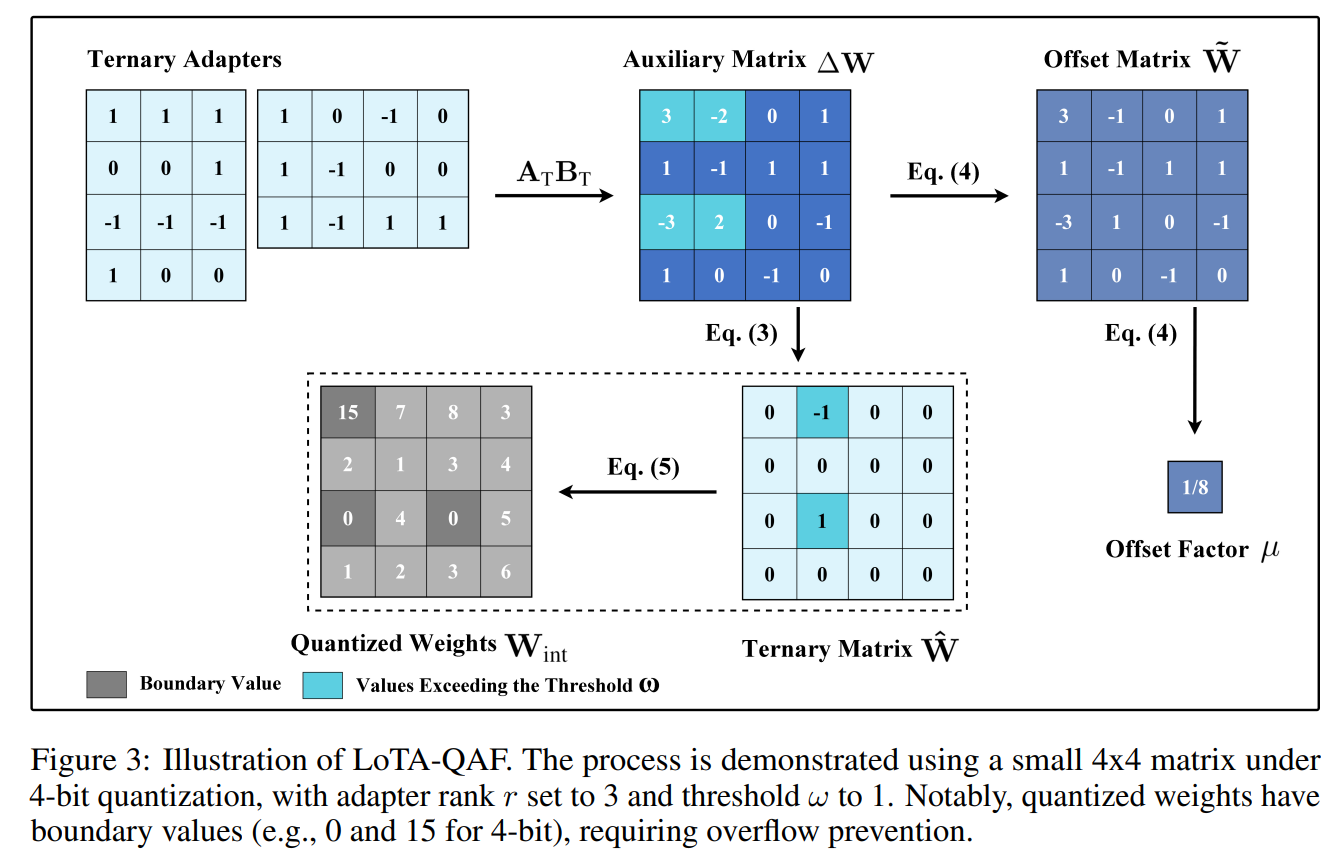

Quantization

- Memory-Efficient Fine-Tuning of Compressed Large Language Models via sub-4-bit Integer Quantization by NAVER Cloud, University of Richmond, SNU AI Center, KAIST AI (https://arxiv.org/pdf/2305.14152.pdf).The paper presents Parameter-Efficient and Quantization-aware Adaptation(PEQA), a quantization-aware PEFT technique that facilitates model compression and accelerates inference. PEQA operates through a dual-stage process: initially, the parameter matrix of each fully-connected layer undergoes quantization into a matrix of low-bit integers and a scalar vector subsequently, fine-tuning occurs on the scalar vector for each downstream task. This compresses the size of the model considerably, leading to a lower inference latency upon deployment and a reduction in the overall memory required. The method demonstrates scalability, task-specific adaptation performance for several well-known models, including LLaMA and GPT-Neo and -J.

- Integer or Floating Point? New Outlooks for Low-Bit Quantization on Large Language Models by Microsoft and universities of China (https://arxiv.org/pdf/2305.12356.pdf).Authors analyze the effectiveness and applicability of INT8/INT4 and FP8/FP4precision to quantization of Large Language Models. They conclude that there is no winner for both weight-only and weights-activations quantization settings. Thus they propose a relatively simple method that selects the optimal per-layer precision for LLM quantization. They provide an extensive comparison on different LLaMA models and compare results with the recent GPTQ (for weight-only) and vanilla FP8/INT8 (for weights-activations) quantization. The proposed method surpasses or outperforms baselines.

- PTQD: Accurate Post-Training Quantization for Diffusion Models by Zhejiang University and Monash University (https://arxiv.org/pdf/2305.10657.pdf). The paper is a post-training quantization framework for diffusion models and unifies a formulation for quantization noise and diffusion perturbed noise. The authors disentangle the quantization noise into correlated and uncorrelated parts regarding its full-precision counterpart. They propose how to correct the correlated part by estimating the correlation coefficient and propose Variance Schedule Calibration to rectify the residual uncorrelated part. The authors also introduce a Step-aware Mixed Precision scheme, which dynamically selects the appropriate bit-widths for synonymous steps, guaranteeing adequate SNR throughout the denoising process. Experiments demonstrate that the method reaches a good performance for mixed-precision post-training quantization of diffusion models on certain tasks.

- LLM-QAT: Data-Free Quantization Aware Training for Large Language Models by Meta AI and Reality Labs (https://arxiv.org/pdf/2305.17888.pdf).The paper proposes a data-free distillation method that leverages generations produced by the pre-trained model, which allows quantizing generative models independent of its training data, similar to post-training quantization methods. The method quantizes both weights and activations, and in addition the KV cache, which can be helpful for increasing throughput and support long sequence dependencies at current model sizes. Authors experiment with LLaMA models of sizes 7B, 13B, and 30B, at quantization levels down to 4- bits. They provide quite an extensive evaluation and compare results with modern quantization methods such as SmoothQuant.

- ZeroQuant-V2: Exploring Post-training Quantization in LLMs from Comprehensive Study to Low Rank Compensation by Microsoft (https://arxiv.org/pdf/2303.08302.pdf). The paper provides a thorough analysis of how the quantization of weights, activations, and weights-activations to different precisions (INT8 andINT4) impacts the accuracy of LLMs of different sizes and architectures (OPT and BLOOM). It states that weight quantization is less sensitive which aligns with common understanding. The authors also compare popular methods round-to-nearest (RTN), GPTQ, ZeroQuant with various quantization settings (per-row, per-group, per-block). Finally, they introduce a technique called Low Rank Compensation (LoRC), which employs low-rank matrix factorization on the quantization error matrix and achieves good accuracy results while being applied on top of other PTQ methods.

- NF4 Isn’t Information Theoretically Optimal (and that’s Good) by Toyota Technological Institute at Chicago (https://arxiv.org/pdf/2306.06965.pdf).Interesting research where the author studies a new NormalFloat4 data type proposed in QLoRA paper. He came up with the following conclusions: (1) The distribution of values to be quantized depends on the quantization block size, so an optimal code should vary with block size (2) NF4 does not assign an equal proportion of inputs to each code value (3) Codes which do have that property are not as good as NF4 for quantizing language models. He attempts to apply these insights to derive an improved code based on minimizing the expected L1 reconstruction error, rather than the quantile-based method. This leads to improved performance for larger quantization block sizes.

- SqueezeLLM: Dense-and-Sparse Quantization by BAIR UC Berkeley (https://arxiv.org/pdf/2306.07629.pdf). The paper shows that LLM (GPT) inference suffers from low arithmetic intensity and is a memory-bound problem. The authors then propose a non-uniform weight quantization method to trade computation for memory footprint. Two novel techniques are introduced. To deal with the outlying weight values, the Dense-and-Sparse decomposition factorizes a layer weight into a pair of matrices with the same shape as original – one for outliers which will be very sparse due to only~0.5% of large values, and the remaining elements are kept in another dense matrix. Since the sparse matrix has little non-zero elements, it can be stored as compressed sparse row (CSR) format without quantization and GEMM/GEMV can be realized via sparse library. As for the dense matrix with its range significantly narrowed than original, the authors formulate a Sensitivity-Based K-means Clustering that find 2bitwidth centroids by minimizing Fisher information metric, an approximate perturbation to the loss function that can be computed efficiently without the expensive 2nd order backprop. Across LLaMa 7B, 13B, 30B and its instruction-following derivatives Vicuna, SqueezeLLM in 4 or 3 bit consistently outperforms SOTA methods GPTQ,AWQ in perplexity evaluated on C4, WikiText-2 and zero-shot MMLU task. On A6000GPU, SqueezeLLM inference with a tailored LUT dequantization kernel show comparable latency to GPTQ.

- Towards Accurate for Vision Transformer by Meituan and Beihang University (https://arxiv.org/pdf/2303.14341.pdf).A practical study where authors highlight the problems of quantization for Vision Transformer models. They propose a bottom-elimination block wise calibration scheme to optimize the calibration metric to perceive the overall quantization disturbance in a block wise manner and prioritize the crucial quantization errors that influence more on the final output more. They also design a quantization scheme for Softmax to maintain the power-law character and keep the function of the attention mechanism. Experiments on various Vision Transformer architectures and tasks (Image Classification, Object Detection, Instance Segmentation) show that accurate 8-bit quantization is achievable for most of the models.

CVPR 2023 conference

- NIPQ: Noise proxy-based Integrated Pseudo-Quantization by Postech and Seoul National University (https://openaccess.thecvf.com/content/CVPR2023/papers/Shin_NIPQ_Noise_Proxy-Based_Integrated_Pseudo-Quantization_CVPR_2023_paper.pdf). Authors highlight a problem with straight-through estimator (STE) represented by a fact that it results in unstable convergence during quantization-aware training (QAT) leading in notable quality degradation. To resolve this issue, they suggest a novel approach called noise proxy-based integrated pseudo-quantization (NIPQ) that updates all quantization parameters (e.g., bit-width and truncation boundary)as well as the network parameters via gradient descent without STE instability. Experiments show that NIPQ outperforms existing quantization algorithms in various vision and language applications by a large margin. This approach is rather general and can be used to improve any QAT pipeline.

- One-Shot Model for Mixed-Precision Quantization by Huawei (https://openaccess.thecvf.com/content/CVPR2023/papers/Koryakovskiy_One-Shot_Model_for_Mixed-Precision_Quantization_CVPR_2023_paper.pdf). Authors focus on a problem of mixed precision quantization where an optimal bit width needs to be selected for every layer of a model. The suggested method, One-Shot MPS, learns optimal bit widths in a gradient-based manner and finds a diverse set of Pareto-front architectures in O(1) time. Authors claim that for large models the proposed method find optimal bit width partition 5 times faster than existing methods.

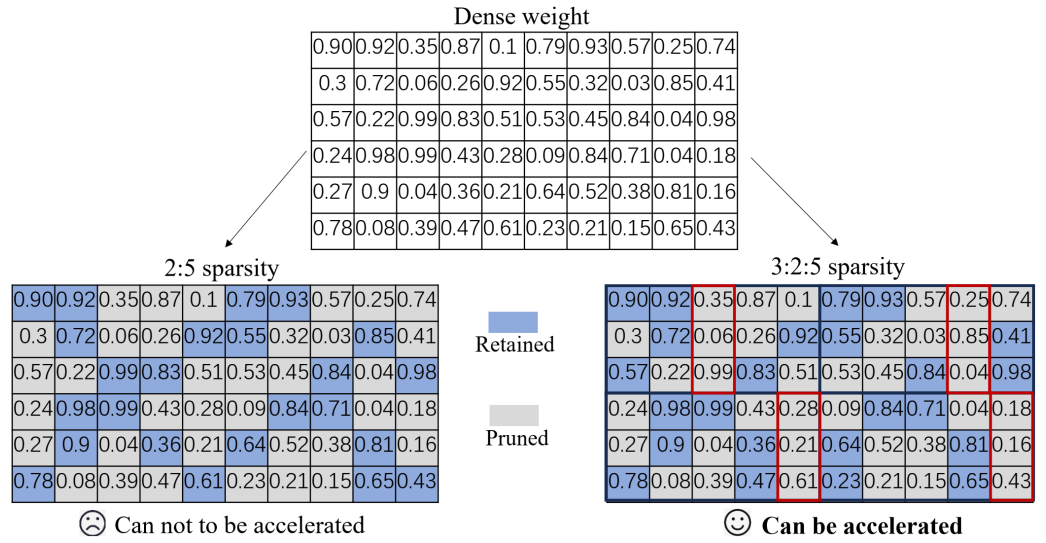

- Boost Vision Transformer with GPU-Friendly Sparsity and Quantization by Fudan University and Nvidia (https://openaccess.thecvf.com/content/CVPR2023/papers/Yu_Boost_Vision_Transformer_With_GPU-Friendly_Sparsity_and_Quantization_CVPR_2023_paper.pdf). In this paper authors suggest an approach to maximally utilize the GPU-friendly fine-grained 2:4 structured sparsity and quantization. Method consists of first pruning an FP16 vision transformer to a sparse representation and then quantizing it further to INT8/INT4 data types. his is done using multiple distillation losses in supervised or even unsupervised regimes. Experiments show about 3x performance boost for INT4quantization with less than 0.5% accuracy drop for classification, detection, and segmentation tasks.

- Q-DETR: An Efficient Low-Bit Quantized Detection Transformer by Beihang University, Zhongguancun Laboratory, Tencent and others (https://openaccess.thecvf.com/content/CVPR2023/papers/Xu_Q-DETR_An_Efficient_Low-Bit_Quantized_Detection_Transformer_CVPR_2023_paper.pdf). Authors show that during quantization of DETR detection transformer its accuracy is significantly degraded because of the information loss occurring in across-attention module. This issue it tackled by (1) distribution alignment of detection queries to maximize the self-information entropy and (2)foreground-aware query matching scheme to effectively transfer the teacher information to distillation-desired features. The resulting 4-bit Q-DETR can theoretically accelerate DETR with ResNet-50 backbone by 6.6x with only 2.6%accuracy drop.

- It also may be seen that some academic effort is targeted at data-free quantization. The following CVPR works suggest improvements in this direction: GENIE: Show Me the Data for Quantization (https://arxiv.org/pdf/2212.04780.pdf) by Samsung Research; Hard Sample Matters a Lot in Zero-Shot Quantization (https://arxiv.org/pdf/2303.13826.pdf) by South China University of Technology and others; Adaptive Data-FreeQuantization (https://arxiv.org/pdf/2303.06869.pdf) by Key Laboratory of Knowledge Engineering with Big Data and others.

Pruning

- DepGraph: Towards Any Structural Pruning by NUS, Zhejiang University and Huawei (https://arxiv.org/pdf/2301.12900.pdf). This paper tackles the highly challenging yet rarely explored aspects of structured pruning - generalized identification of dependent structures across layers for joint sparsification and removal. This problem is non-trivial, stemming from varying dependency in different model architectures, choice of pruning schemes as well as implementation. The authors start with all-to-all layer dependency, detailing the considerations and design decisions, gradually arriving at an intra and inter-layer dependency graph (DepGraph). The paper later shows how to use DepGraph to resolve grouping of dependent layer and structures. In experiments, norm-based regularization (L2) on derived groups is employed for sparsification training. The speedup and accuracy on CNN/CIFAR are competitive with many SOTA works. Most importantly, it shows the applicability of DepGraph not only for CNN but also for transformer, GNN and RNN, also the challenging DenseNet which has nested shortcut connection. This work is a good reference for any model optimization SW framework as structural dependency also exists for quantization and NAS. https://github.com/VainF/Torch-Pruning.

- Structural Pruning for Diffusion Models by NUS (https://arxiv.org/pdf/2305.10924.pdf).The paper introduces a method for Diffusion models pruning. The essence of the method is encapsulated in a Taylor expansion over pruned timesteps, a process that disregards non-contributory diffusion steps and ensembles informative gradients to identify important weights. Empirical assessment, undertaken across four diverse datasets shows that: the method enables approximately a 50%reduction in FLOPs at a mere 10% to 20% of the original training expenditure the pruned diffusion models inherently preserve generative behavior congruent with their pre-trained progenitors at low resolution. The code is available at https://github.com/VainF/Diff-Pruning.

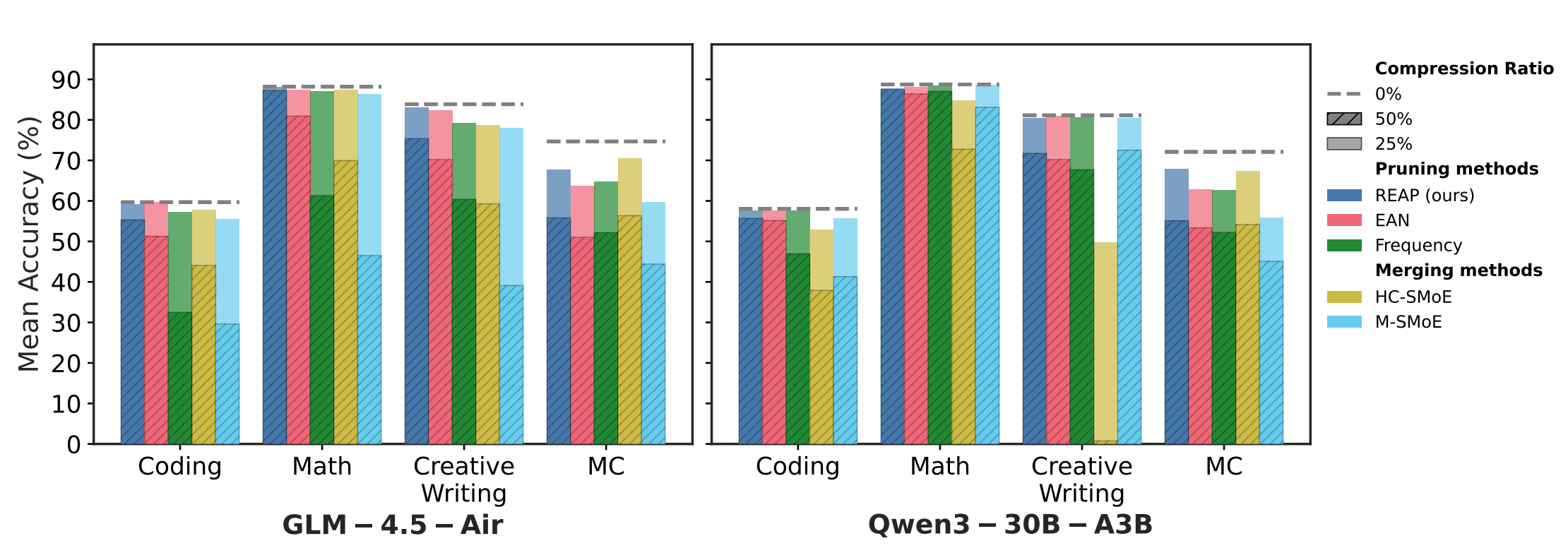

- LLM-Pruner: On the Structural Pruning of Large Language Models by NUS (https://arxiv.org/pdf/2305.11627.pdf).The paper introduces a framework for the task-agnostic structural pruning of the large language model. The main advantage of the framework is the automatic structural pruning framework, where all the dependent structures are grouped without the need for any manual design. To evaluate the effectiveness of LLM-Pruner, authors conduct experiments on three large language models:LLaMA-7B, Vicuna-7B, and ChatGLM-6B. The compressed models are evaluated using nine datasets to assess both the generation quality and the zero-shot classification performance of the pruned models. The experimental results demonstrate that with the removal of 20% of the parameters, the pruned model maintains 93.6% of the performance of the original model after the light-weight fine-tuning.

- SpQR: A Sparse-Quantized Representation for Near-Lossless LLM Weight Compression by Neural Magic, Yandex, and universities of US, Europe and Russia (https://arxiv.org/pdf/2306.03078.pdf).The paper introduces new compressed format and quantization technique which enables near-lossless compression of LLMs across model scales, while reaching similar compression levels to previous methods. The method works by identifying and isolating outlier weights, which cause particularly large quantization errors, and storing them in higher precision, while compressing all other weights to 3-4 bits, and achieves relative accuracy losses of less than 1% in perplexity for highly-accurate LLaMA and Falcon LLMs. This makes it possible to run 33B parameter LLM on a single 24 GB consumer GPU without any performance degradation at 15% speedup. Code is available at: https://github.com/Vahe1994/SpQR.

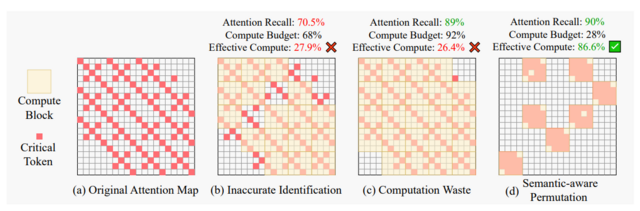

- Dynamic Context Pruning for Efficient and Interpretable Autoregressive Transformers by ETH, CSEM, University of Basel (https://arxiv.org/pdf/2305.15805.pdf).The paper aims to overcome the length-quadratic complexity of the global causal attention and argues that static sparse attention (such as Big Bird, Sparse Attention) is sub-optimal in modeling, requiring pretraining from scratch, and has limited memory benefit during generation. The authors propose a fine-tuning method to learn layer-specific sparse attention which adaptively prune tokens in the context during deployment. Essentially, auxiliary modules are introduced a teach layer to learn the interactions between query and key to predict the retention of tokens. Although additional cost is incurred, high sparsity in long context results in overall memory benefit by reducing storage and retrieval from key-value cache, as well as compute benefit from lesser tokens for attention computation. As compared to adapting GPT2 with local and sparse attention, online context pruning shows lower (better) perplexity and retains perplexity when up to 60% of tokens pruned from context. Mean zero-shot accuracy across a number of tasks is shown maintained at a high level of sparsity.

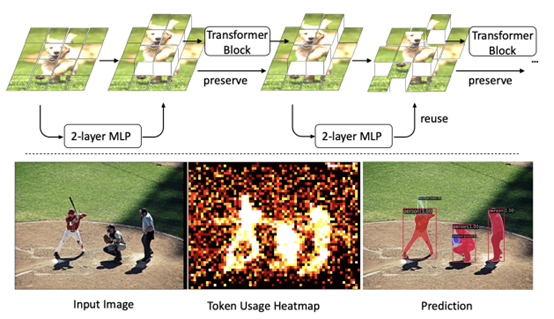

- Revisiting Token Pruning for Object Detection and Instance Segmentation by University of Zurich (https://arxiv.org/pdf/2306.07050.pdf).In this paper, authors investigate token pruning to accelerate inference for object detection and instance segmentation, extending prior works from image classification. Through the experiments, they offer four insights for dense tasks: (i) tokens should not be completely pruned and discarded, but rather preserved in the feature maps for later use. (ii) reactivating previously pruned tokens can further enhance model performance. (iii) a dynamic prunin grate based on images is better than a fixed pruning rate. (iv) a lightweight,2-layer MLP can effectively prune tokens, achieving accuracy comparable with complex gating networks with a simpler design. Authors evaluate the impact of these design choices on COCO dataset and present a method integrating these insights that outperforms prior art token pruning models, significantly reducing performance drop from ∼1.5 mAP to ∼0.3 mAP for both boxes and masks.

- PRUNING MEETS LOW-RANK PARAMETER-EFFICIENT FINE-TUNING by Zhejiang University and Monash University (https://arxiv.org/pdf/2305.18403.pdf).The paper introduces a parameter importance criterion for large pre-trained models that works with LoRA. With the gradients of the low-rank decomposition, it can approximate the importance of pre-trained parameters without a need to compute their gradients. Based on this, authors introduce LoRA Prune, an approach that unifies PEFT with pruning. Experiments on computer vision and natural language processing tasks demonstrate that LoRA Prune outperforms the compared pruning methods and achieves competitive performance with other (quantization-based) PEFT methods.

CVPR 2023 conference

- Integral Neural Networks by Thestage.ai and Huawei (https://openaccess.thecvf.com/content/CVPR2023/papers/Solodskikh_Integral_Neural_Networks_CVPR_2023_paper.pdf). A new family of deep neural networks called Integral Neural Networks (INN) is introduced in this paper. The weights of INNs are represented as continuous N-dimensional functions, and they are applied by continuous integration operation. During inference, continuous layers can be discretized into fixed representation with an arbitrary resolution. This can be used to prune the model to a desired degree without any fine-tuning and suffering only a small performance loss. Authors also suggest how a pre-trained CNN can be converted to INN. Results show that INNs achieve the same accuracy as CNNs while performing much better when pruned without fine-tuning. For example, a 30% pruned Integral ResNet18 has a 2% accuracy drop on ImageNet compared to 65% accuracy drop for a regular ResNet18.

- Joint Token Pruning and Squeezing Towards More Aggressive Compression of Vision Transformers by MEGVII Technology and Tsinghua University (https://openaccess.thecvf.com/content/CVPR2023/papers/Wei_Joint_Token_Pruning_and_Squeezing_Towards_More_Aggressive_Compression_of_CVPR_2023_paper.pdf). Authors attempt to improve vision transformer computational costs by pruning redundant tokens. Unlike traditional token pruning methods, the proposed Token Pruning & Squeezing module (TPS)approach also squeezes the pruned tokens into the kept ones according to their similarity. Experiments on various ViTs demonstrate the effectiveness of the method and higher robustness to the errors of the token pruning policy .Especially, for DeiT-tiny and -small TPS shrinks computational budget by 35% while improving the accuracy by 1-6% compared to baselines on ImageNet classification.

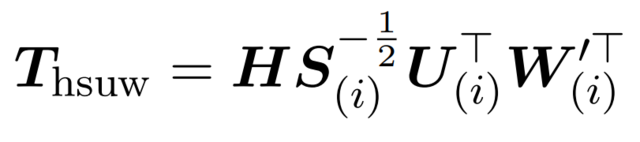

- Global Vision Transformer Pruning with Hessian-Aware Saliency by Nvidia, Berkeley and Duke University (https://arxiv.org/pdf/2110.04869.pdf). Authors propose a first systematic approach to global structural pruning of vision transformers by redistributing the parameters both across transformer blocks and between different structures within the block. Pruning is performed according to a novel Hessian-based criteria comparable across all layers and structures. A new architecture of ViT is proposed called Novel ViT (NViT) obtained by iterative pruning of DeiT-Base. NViT-Base achieves 2.5x FLOPs reduction and 1.9x performance speedup with almost no accuracy degradation. Based on this and other results authors claim outperforming prior state of the art by a large margin.

Neural Architecture Search

- PreNAS: Preferred One-Shot Learning Towards Efficient Neural Architecture Search by Alibaba Group (https://arxiv.org/pdf/2304.14636.pdf ). The authors demonstrate the use of zero-cost proxies to accelerate the training and improve the sample efficiency of weight-sharing NAS. Their method groups Transformer isomers and discards a subset of each group based on each architecture configuration zero-cost score. The reduced search space is then used during training. Pre-NAS outperforms alternative state-of-the-start NAS methods for both Vision Transformers and architectures based on convolution operations.

- Mixture-of-Supernets: Improving Weight-Sharing Supernet Training with Architecture-Routed Mixture-of-Experts by Meta and the University of British Columbia (https://arxiv.org/abs/2306.04845). Authors tackle several issues in traditional weight-sharing NAS, i.e., in NLP tasks, there is an observed performance gap between the selected architectures and training the same architectures from scratch, and additional training is required to improve the final accuracy of the Pareto front. The proposed method uses Mixture of Experts (MoE) to improve the underlying weight-sharing mechanisms. The authors demonstrate the approach using machine translation models, which achieve state-of-the-art performance.

- LayerNAS: Neural architecture search in polynomial complexity by Google Research (https://arxiv.org/pdf/2304.11517.pdf). Authors propose LayerNAS, an algorithm that enforces a sequential search process, transforming multi-objective NAS into a combinatorial optimization problem. LayerNAS outperforms several NAS baseline models in top 1 accuracy. However, it often obtains these improvements with models that have a larger number of parameters and MAdds. LayerNAS is not as efficient as One-shot NAS, but future work will attempt the application of LayerNAS insights into One-shot NAS approaches.

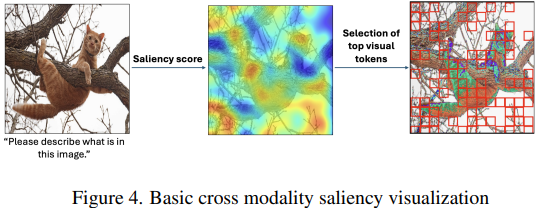

Other

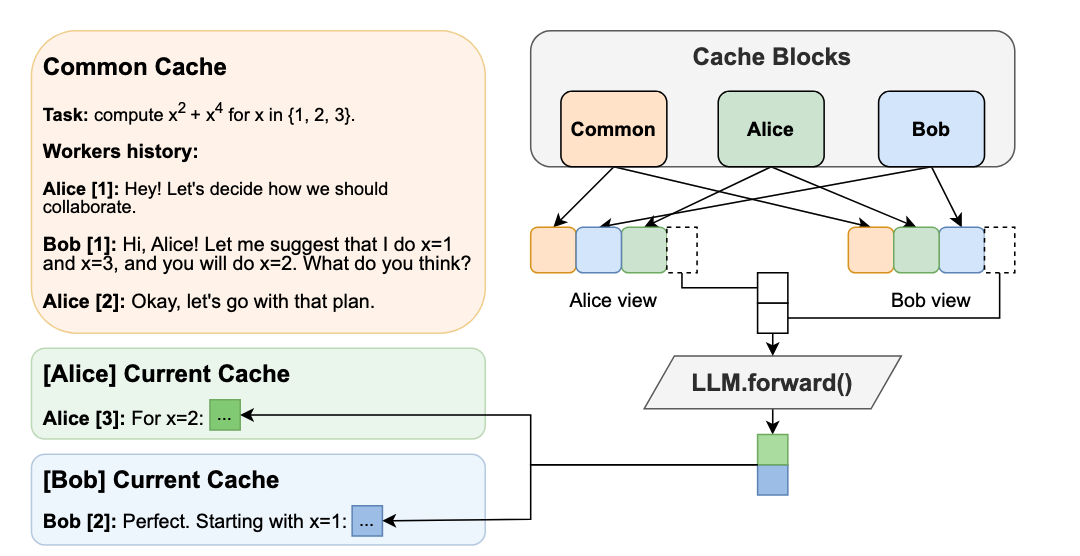

- Inference with Reference: Lossless Acceleration of Large Language Models by Microsoft (https://arxiv.org/pdf/2304.04487.pdf). Authors study accelerating LLM’s inference by improving the efficiency of auto regressive decoding. Authors observe what in many real-world applications an LLM’s output tokens often come from its context and they propose LLMA, inference-with-reference decoding mechanism to accelerate LLM inference by exploiting the overlap between an LLM’s output and reference that is available for many practical scenarios. Experiments show that LLMA method can generate identical results as greedy decoding but achieve over 2x speed-up across different model sizes in practical application scenarios like retrieval-augmented and cache-assisted generation.

- Scaling Down to Scale Up: A guide to Parameter-Efficient Fine-Tuning by The University of Massachusetts (https://arxiv.org/pdf/2303.15647.pdf).This survey summarizes over 40 papers related to parameter-efficient fine-tuning methods between February 2019 and February 2023. It provides taxonomy (see figure 2) and highlights key methods in each category with pseudocodes, and compares qualitatively in the aspects of storage, backprop, and inference efficiency (Table 1). It is a good time-saving paper to keep up with the space.

- On Architectural Compression of Text-to-Image Diffusion Models by Nota Inc. Korea (https://arxiv.org/pdf/2305.15798.pdf).This work compresses pretrained Stable Diffusion (v1.4) by handpicked removal of multiple residual and attention blocks in the denoising UNet. The derived models are subsequently trained with diffusion loss in conjunction with knowledge distillation to match teacher’s noise prediction and intermediate feature maps. Authors produce 3 models (base, small, tiny) with each training only utilizing a single A100 GPU and 0.1% of text-image pairs from LAION AestheticsV2 6.5+. On Xeon Cascade Lake and RTX3090, latency of a single 512x512 text-to-image generation (25-step denoising) has been shown to improve by 30-45%. Authors also show the applicability of the distilled models for Dream Booth personalization, demonstrating up to 99% performance of Dream Booth with original Stable Diffusion model.

Deep Learning Software

- JaxPruner: A Concise Library for Sparsity Research by Google Research (https://arxiv.org/pdf/2304.14082.pdf). Google Research open-sources weight pruning framework for the research of network sparsification and sparse network training in Jax ecosystem. JaxPruner works seamlessly with popular Jax Optimizer (Optax) and provides a common abstraction for weight masking, mask update scheduler, pruning regularity, sparse training (straight through estimator) and sparse model storage format. In the companion paper, JaxPruner implements a set of baseline sparsity algorithms and demonstrates easy integration to Jax framework of various domains such as FedJax (Federated Learning), t5x (NLP), Dopamine & Acme(Deep RL). See https://github.com/google-research/jaxpruner for more details.

.png)