OpenVINO™ Enable PaddlePaddle Quantized Model

OpenVINO™ is a toolkit that enables developers to deploy pre-trained deep learning models through a C++ or Python inference engine API. The latest OpenVINO™ has enabled the PaddlePaddle quantized model, which helps accelerate their deployment.

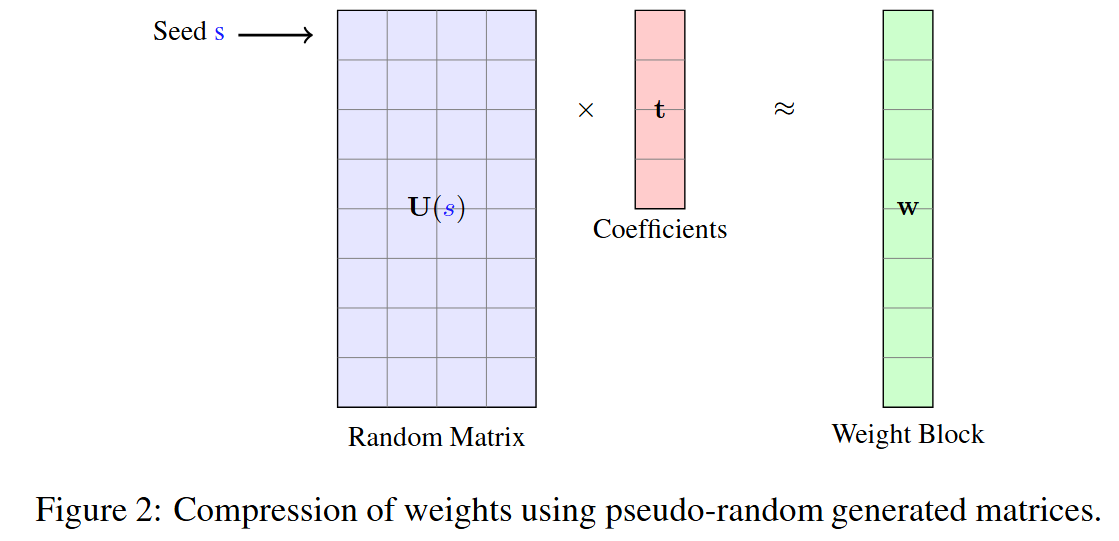

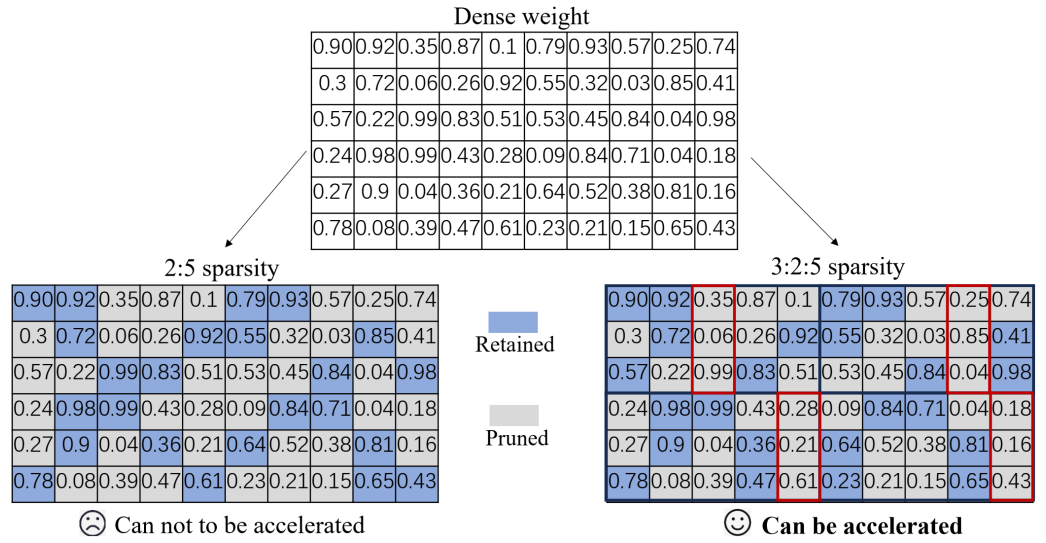

From floating-point model to quantized model in PaddlePaddle

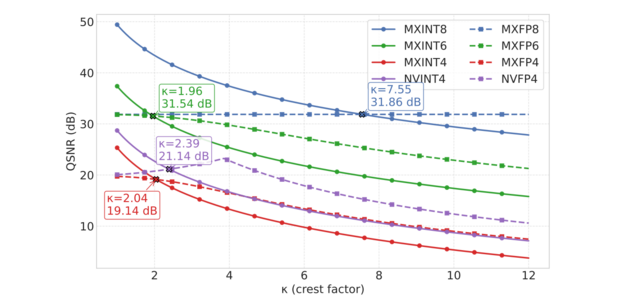

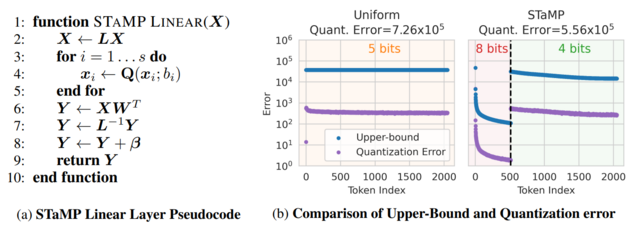

Baidu releases a toolkit for PaddlePaddle model compression, named PaddleSlim. The quantization is a technique in PaddleSlim, which reduces redundancy by reducing full precision data to a fixed number so as to reduce model calculation complexity and improve model inference performance. To achieve quantization, PaddleSlim takes the following steps.

- Insert the quantize_linear and dequantize_linear nodes into the floating-point model.

- Calculate the scale and zero_point in each layer during the calibration process.

- Convert and export the floating-point model to quantized model according to the quantization parameters.

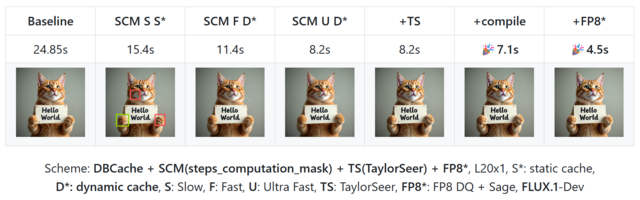

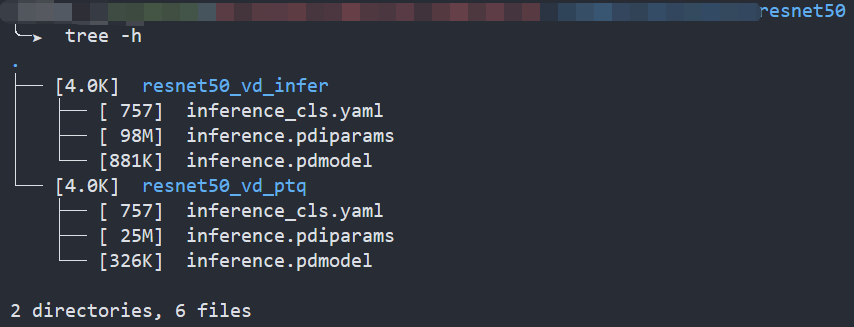

As the Figure1 shows, Compared to the floating-point model, the size of the quantized model is reduced by about 75%.

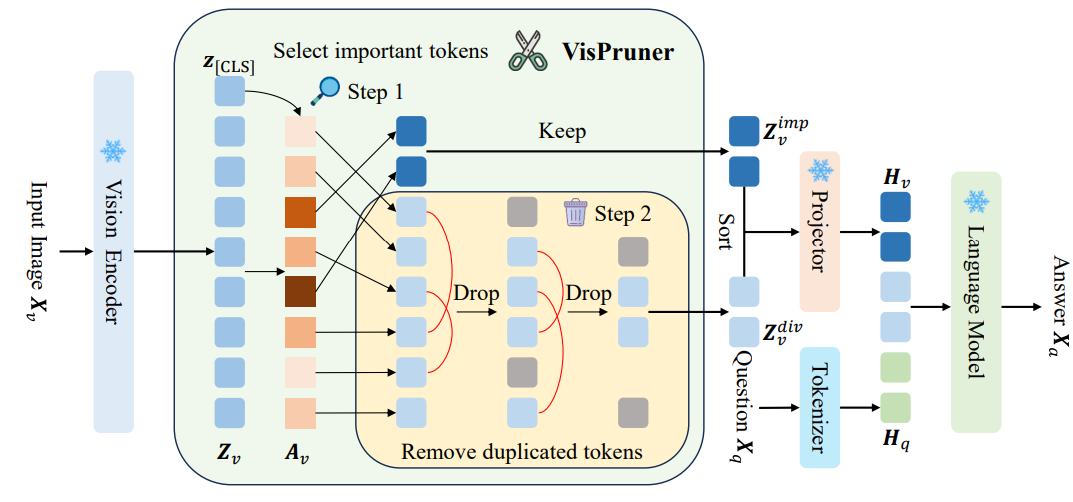

Enable PaddlePaddle quantized model in OpenVINO™

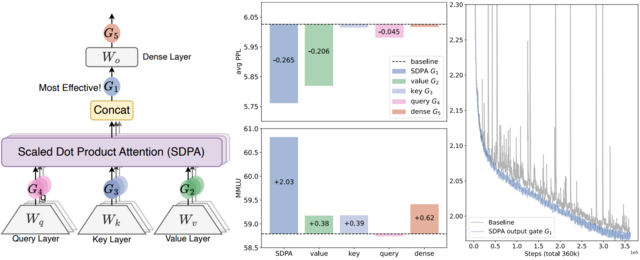

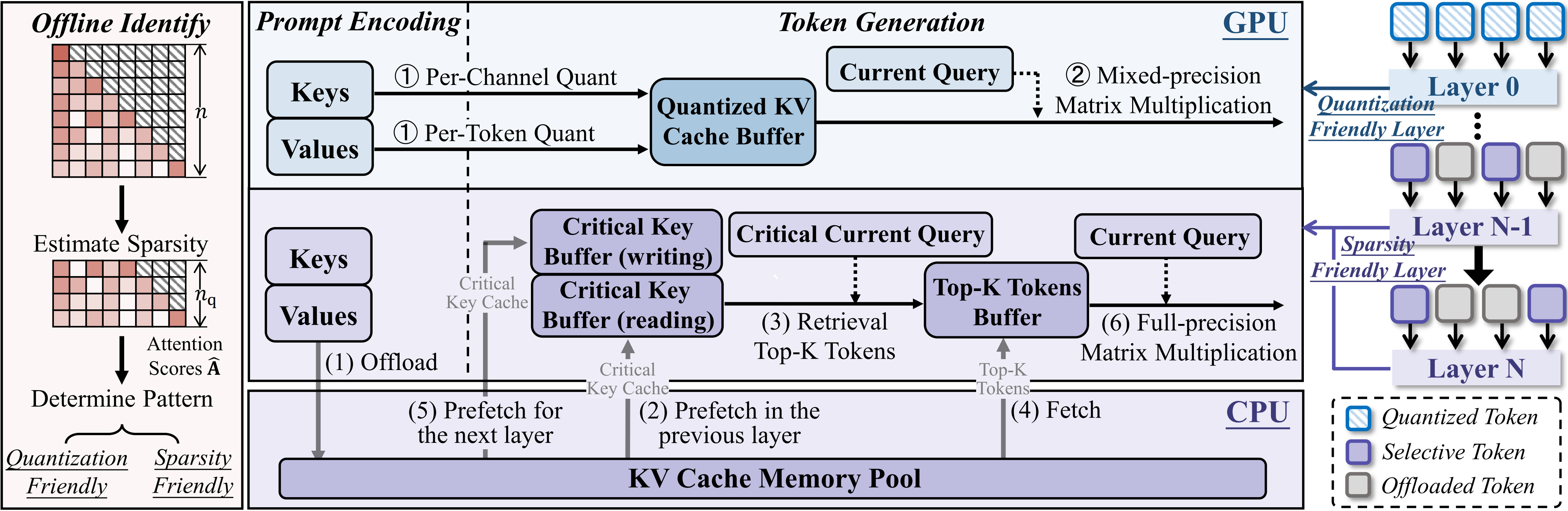

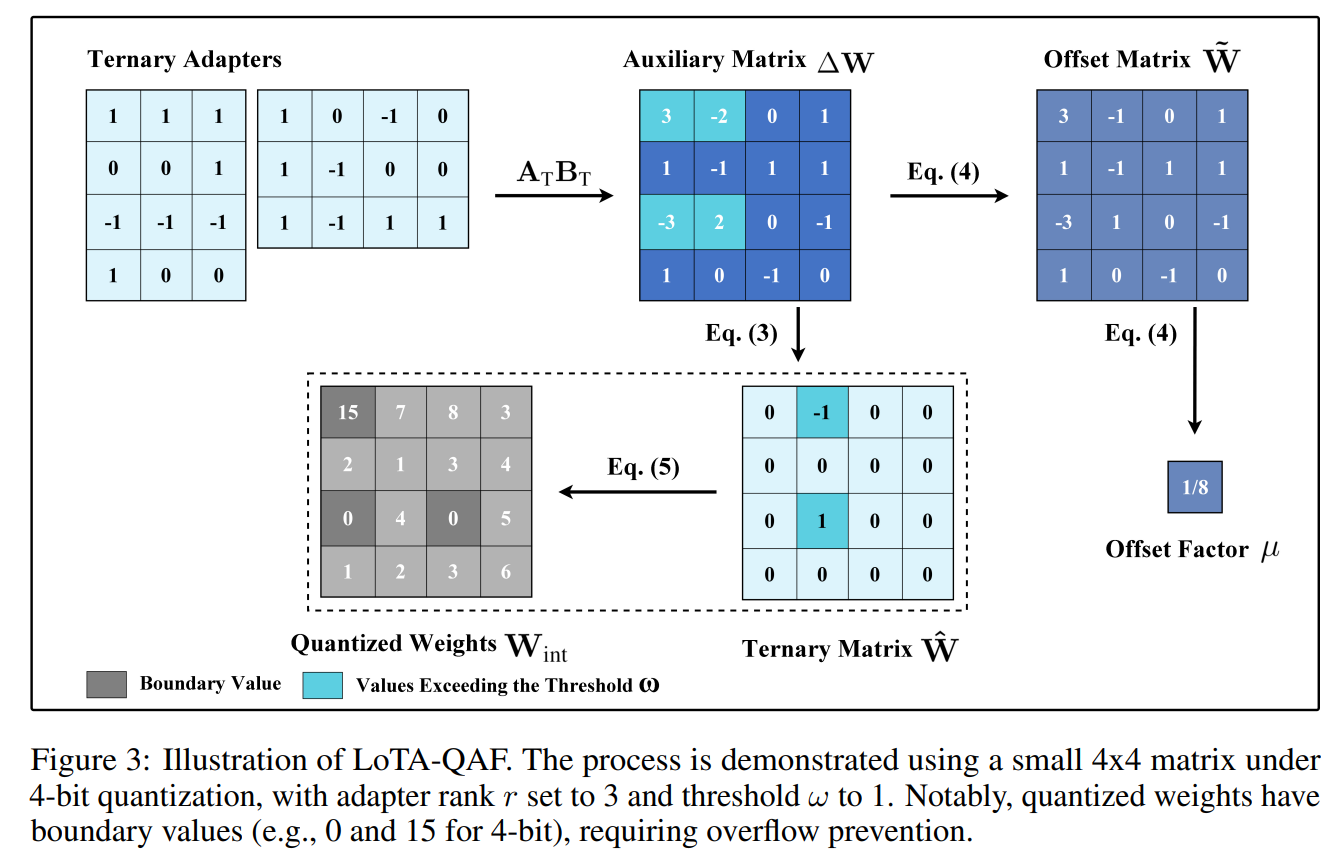

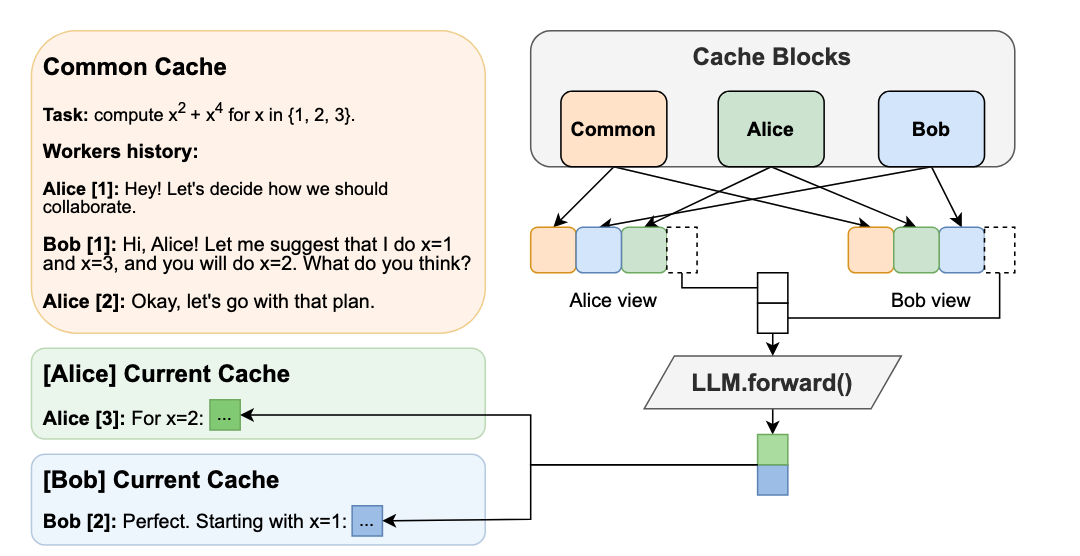

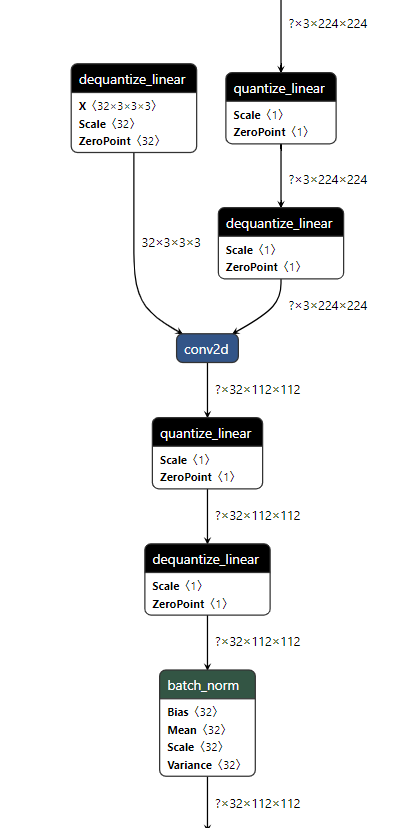

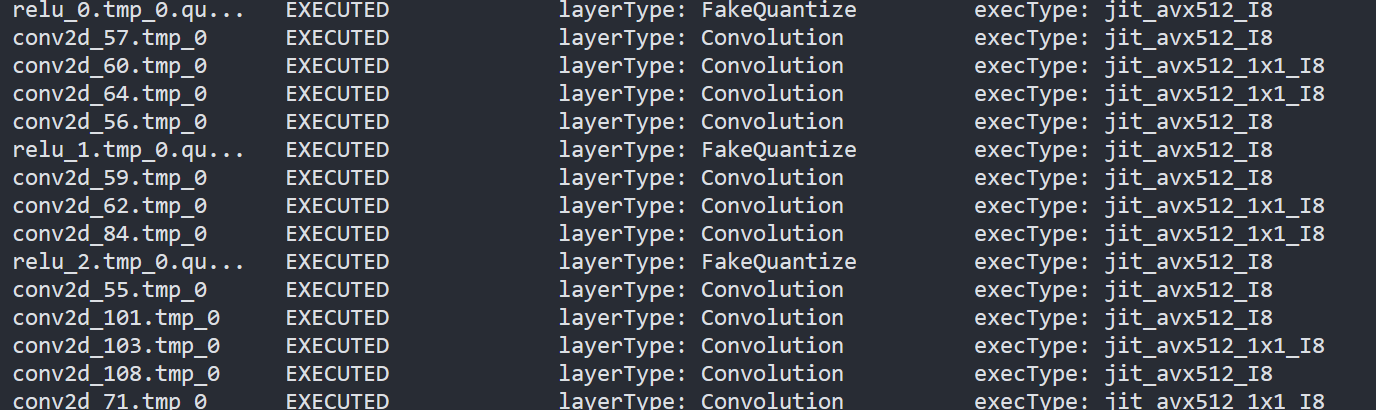

As the Figure2.1 shows, paired quantize_linear and dequantize_linear nodes appear intermittently in the model.

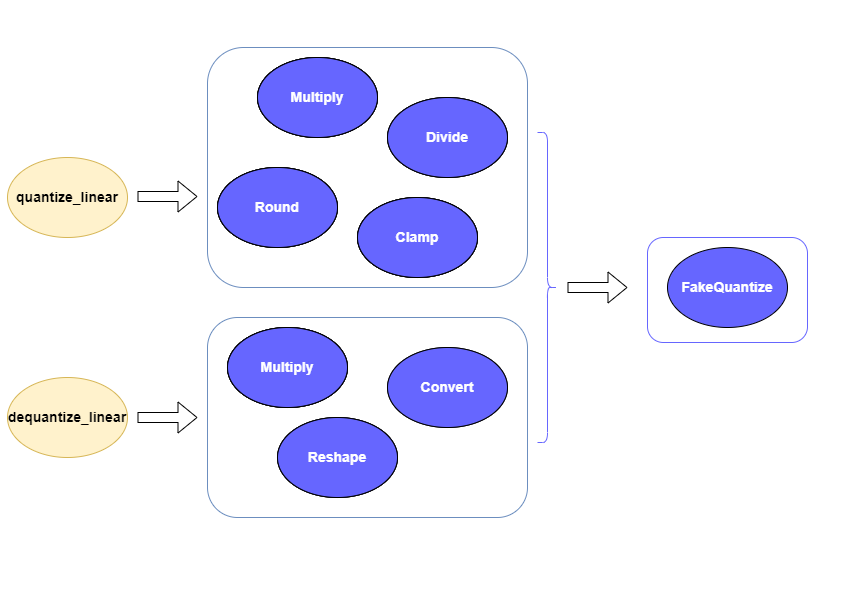

In order to enable PaddlePaddle quantized model, both quantize_linear and dequantize_linear nodes should be mapped first. And then, quantize_linear and dequantize_linear pattern scan be fused into FakeQuantize nodes and OpenVINO™ transformation mechanism will simplify and optimize the model graph in the quantization mode.

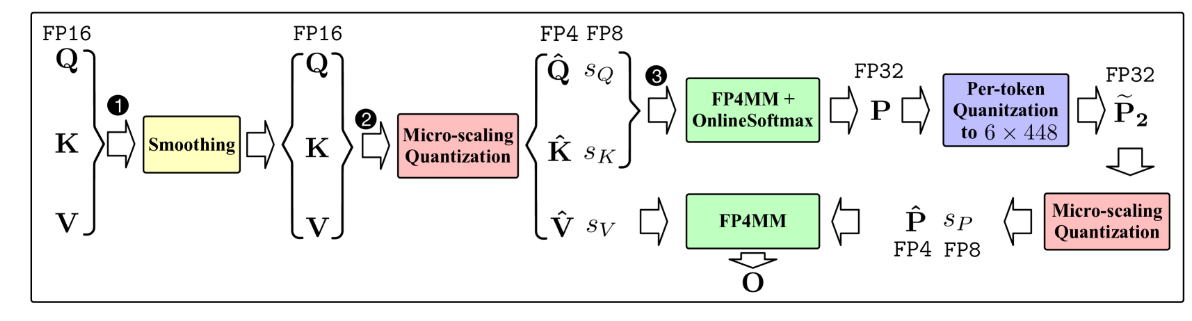

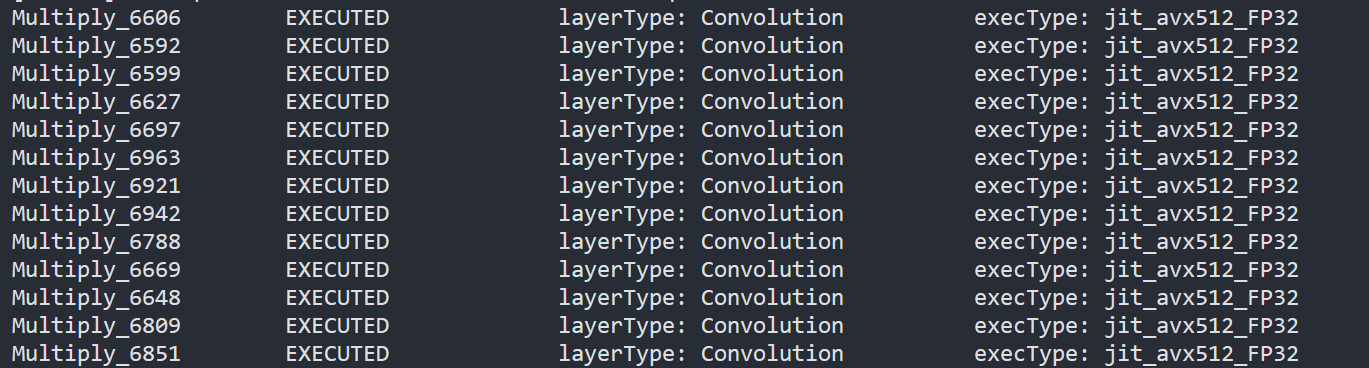

To check the kernel execution function, just profile and dump the execution progress, you can use benchmark_app as an example. The benchmark_app provides the option"-pc", which is used to report the performance counters information.

- To report the performance counters information of PaddlePaddle resnet50 float model, we can run the command line:

- To report the performance counters information of PaddlePaddle resnet50 quantized model, we can run the command line:

By comparing the Figure2.3 and Figure2.4, we can easily find that the hotpot layers of PaddlePaddle quantized model are dispatched to integer ISA implementation, which can accelerate the execution.

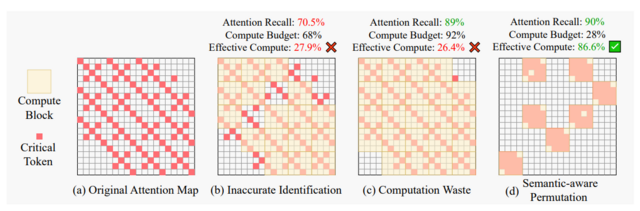

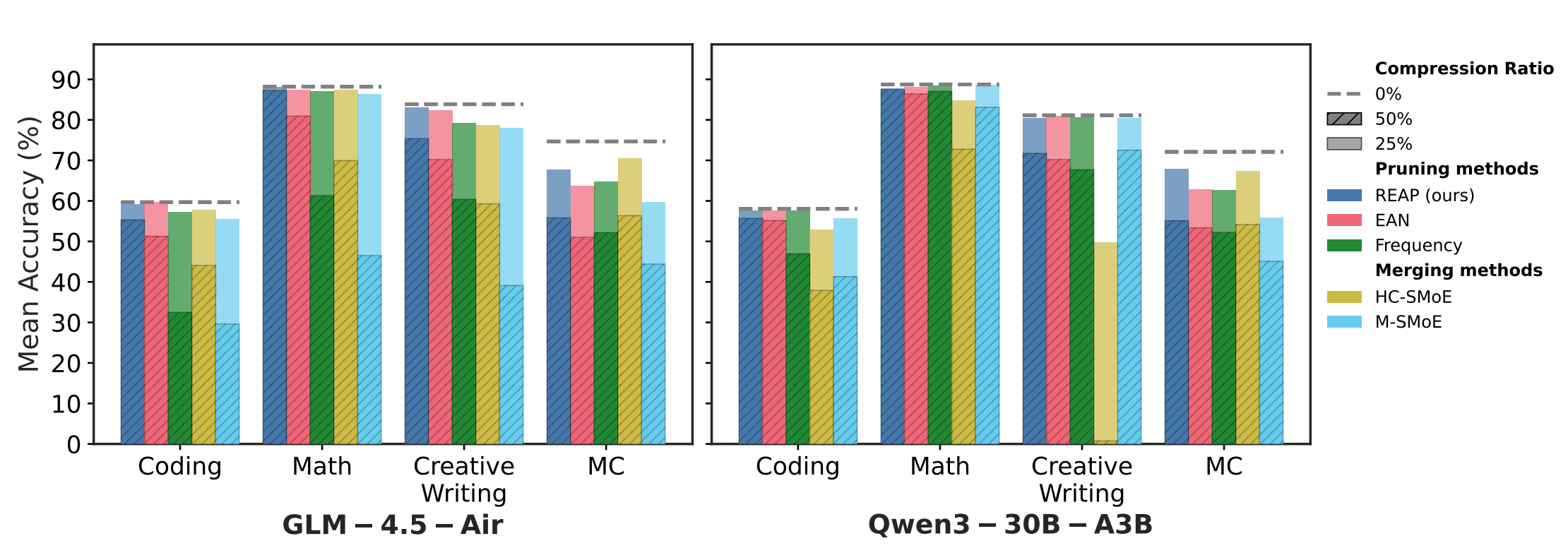

Accuracy

We compare the accuracy between resnet50 floating-point model and post training quantization(PaddleSlim PTQ) model. The accuracy of PaddlePaddle quantized model only decreases slightly, which is expected.

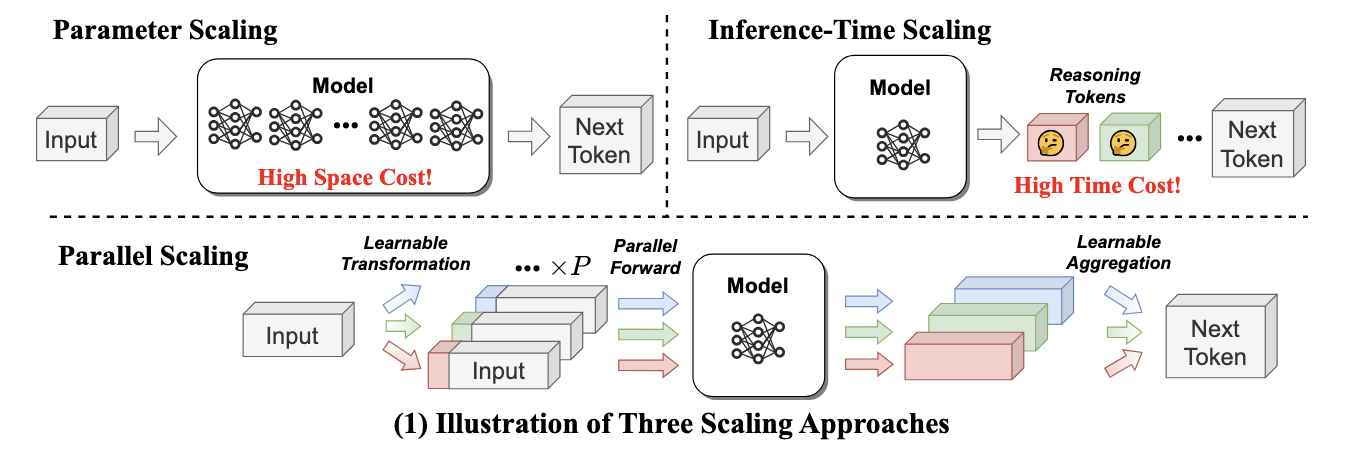

Performance

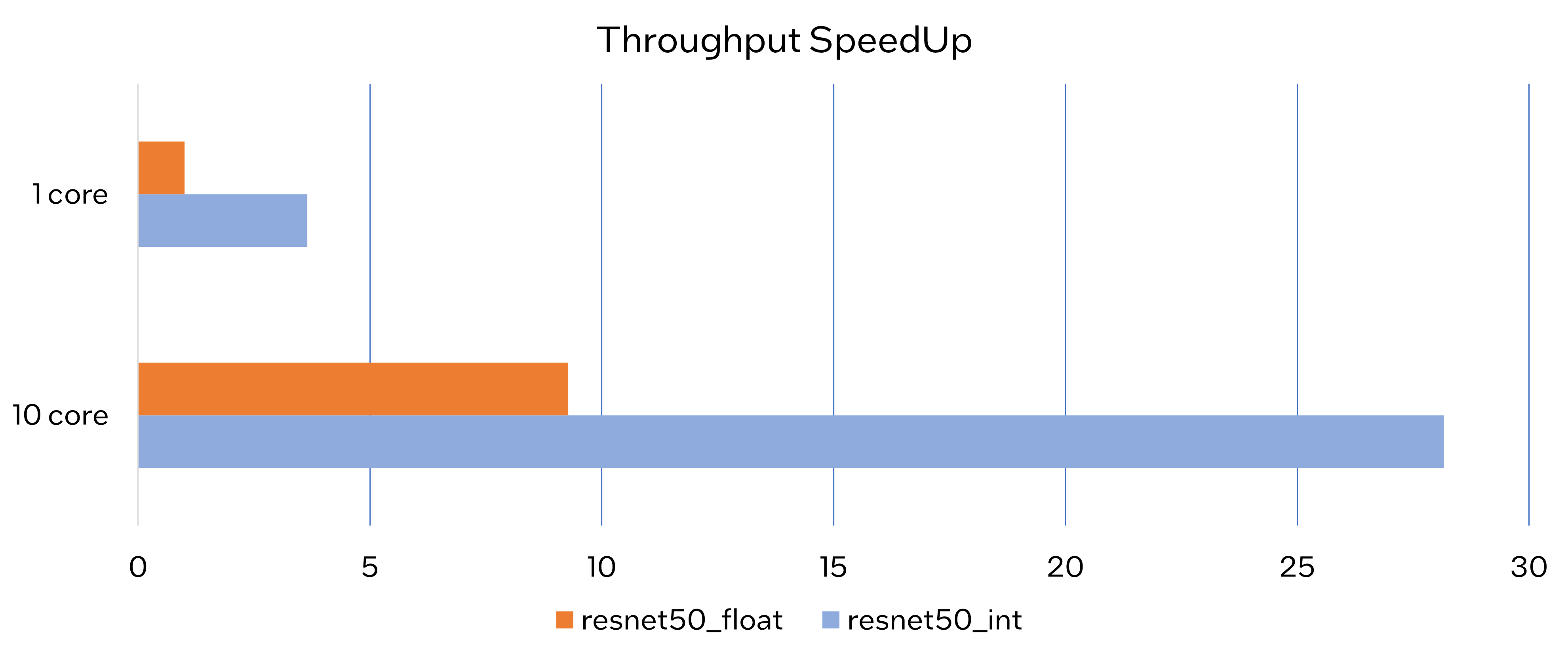

Throughput Speedup

The throughput of PaddlePaddle quantized resnet50 model can improve >3x.

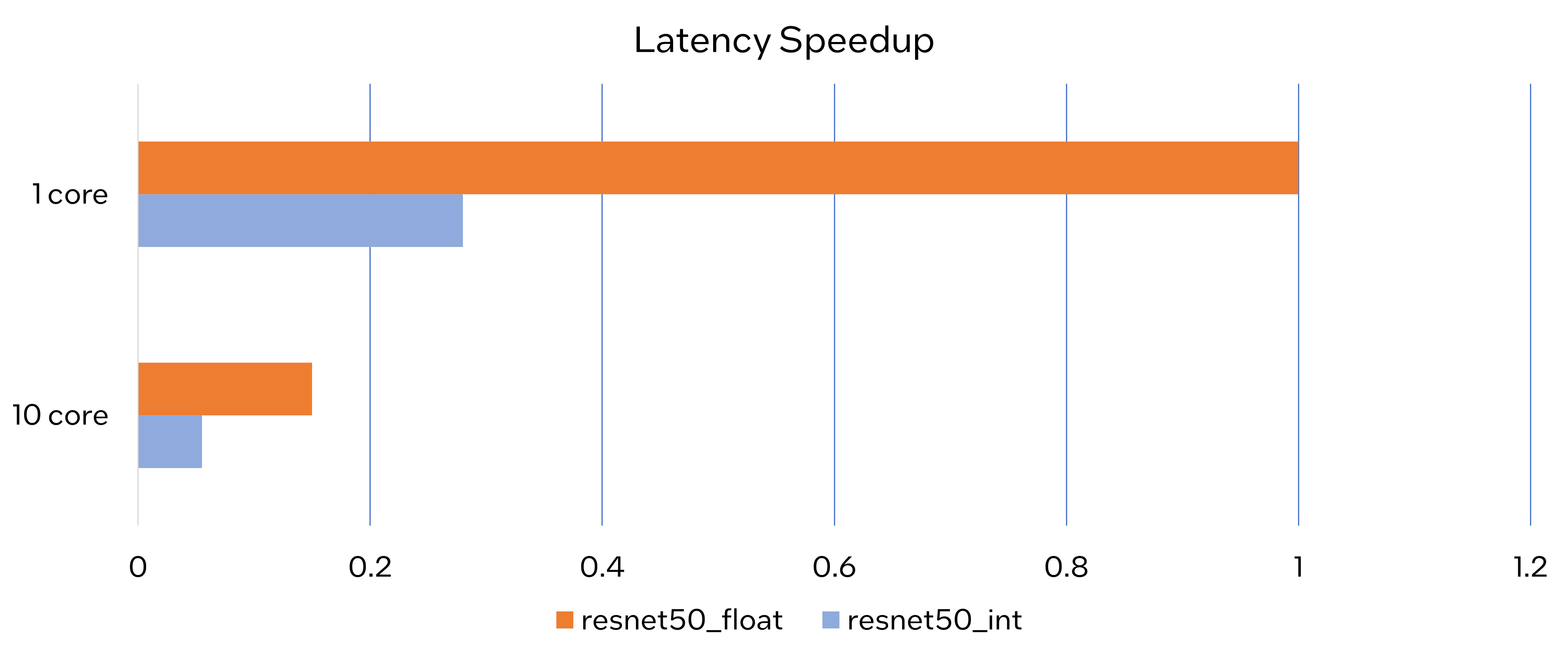

Latency Speedup

The latency of PaddlePaddle quantized resnet50 model can reduce about 70%.

Conclusion

In this article, we elaborated the PaddlePaddle quantized model in OpenVINO™ and profiled the accuracy and performance. By enabling the PaddlePaddle quantized model in OpenVINO™, customers can accelerate both throughput and latency of deployment easily.

Notices & Disclaimers

- The accuracy data is collected based on 50000 images of val dataset in ILSVRC2012.

- The throughput performance data is collected by benchmark_app with data_shape "[1,3,224,224]" and hint throughput.

- The latency performance data is collected by benchmark_app with data_shape "[1,3,224,224]" and hint latency.

- The machine is Intel® Xeon® Gold 6346 CPU @3.10GHz.

- PaddlePaddle quantized model can be achieve at https://github.com/PaddlePaddle/FastDeploy/blob/develop/docs/en/quantize.md.

.png)