OpenVINO.GenAI Delivers C API for Seamless Language Interop with Practical Examples in .NET

Authors: Tong Qiu, Xiake Sun

Starting with OpenVINO.GenAI 2025.1, the C API has been introduced, primarily to enhance interoperability with other programming languages, enabling developers to more effectively utilize OpenVINO-based generative AI across diverse coding environments.

Compared to C++, C's ABI is more stable, often serving as an interface layer or bridge language for cross-language interoperability and integration. This allows developers to leverage the performance benefits of C++ in the backend while using other high-level languages for easier implementation and integration.

As a milestone, we have currently delivered only the LLMPipeline and its associated C API interface. If you have other requirements or encounter any issues during usage, please submit an issue to OpenVINO.GenAI

Currently, we have implemented a Go application Ollama using the C API (Please refer to https://blog.openvino.ai/blog-posts/ollama-integrated-with-openvino-accelerating-deepseek-inference), which includes more comprehensive features such as performance benchmarking for developers reference.

Now, let's dive into the design logic of the C API, using a .NET C# example as a case study, based on the Windows platform with .NET 8.0.

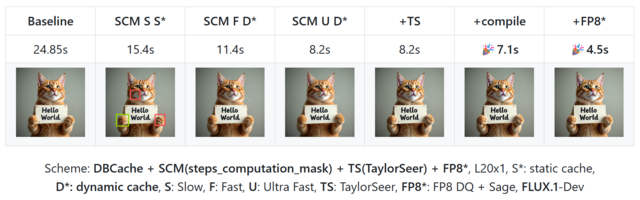

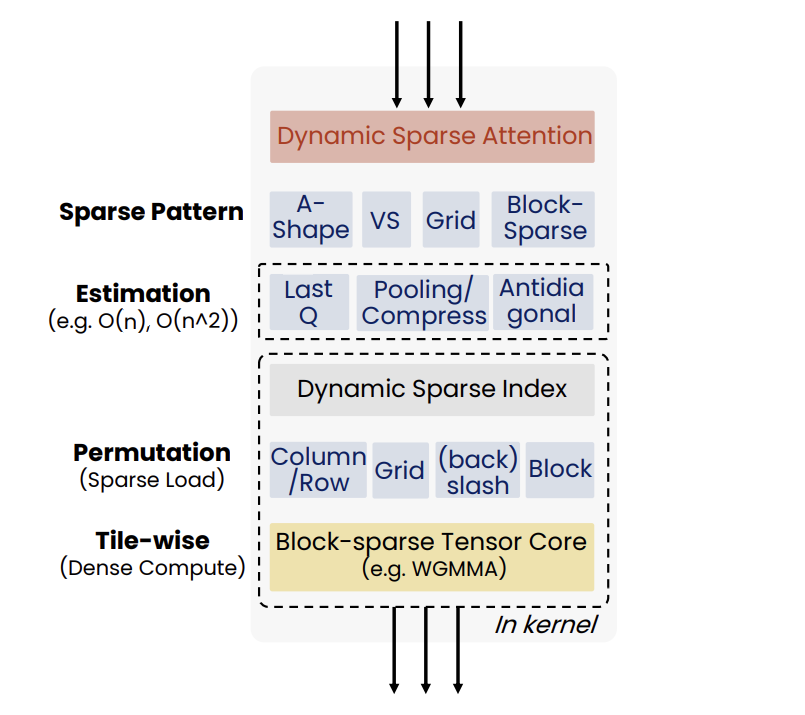

Live Demo

Before we dive into the details, let's take a look at the final C# version of the ChatSample, which supports multi-turn conversations. Below is a live demo

How to Build a Chat Sample by C#

P/Invoke: Wrapping Unmanaged Code in .NET

First, the official GenAI C API can be found in this folder https://github.com/openvinotoolkit/openvino.genai/tree/master/src/c/include/openvino/genai/c . We also provide several pure C samples https://github.com/openvinotoolkit/openvino.genai/tree/master/samples/c/text_generation . Now, we will build our own C# Chat Sample based on the chat_sample_c. This sample can facilitate multi-turn conversations with the LLM.

C# can access structures, functions and callbacks in the unmanaged library openvino_genai_c.dll through P/Invoke. This example demonstrates how to invoke unmanaged functions from managed code.

public static class NativeMethods

{

DllImport("openvino_genai_c.dll", CallingConvention = CallingConvention.Cdecl)]

public static extern ov_status_e ov_genai_llm_pipeline_create(

[MarshalAs(UnmanagedType.LPStr)] string models_path,

[MarshalAs(UnmanagedType.LPStr)] string device,

out IntPtr pipe);

[DllImport("openvino_genai_c.dll", CallingConvention = CallingConvention.Cdecl)]

public static extern void ov_genai_llm_pipeline_free(IntPtr pipeline);

//Other methods

The dynamic library openvino_genai_c.dll is imported, which relies on openvino_genai.dll. CallingConvention = CallingConvention.Cdecl here corresponds to the default calling convention _cdecl in C, which defines the argument-passing order, stack-maintenance responsibility, and name-decoration convention. For more details, refer to Argument Passing and Naming Conventions.

Additionally, the return value ov_status_e reuses an enum type from openvino_c.dll to indicate the execution status of the function. We need to implement a corresponding enum type in C#, such as

public enum ov_status_e

{

OK = 0,

GENERAL_ERROR = -1,

NOT_IMPLEMENTED = -2,

//...

}

Next, we will implement our C# LLMPipeline, which inherits the IDisposable interface. This means that its instances require cleanup after use to release the unmanaged resources they occupy. In practice, object allocation and deallocation for native pointers are handled through the C interface provided by OpenVINO.GenAI. The OpenVINO.GenAI library takes full responsibility for memory management, which ensures memory safety and eliminates the risk of manual memory errors.

public class LlmPipeline : IDisposable

{

private IntPtr _nativePtr;

public LlmPipeline(string modelPath, string device)

{

var status = NativeMethods.ov_genai_llm_pipeline_create(modelPath, device, out _nativePtr);

if (_nativePtr == IntPtr.Zero || status != ov_status_e.OK)

{

Console.WriteLine($"Error: {status} when creating LLM pipeline.");

throw new Exception("Failed to create LLM pipeline.");

}

Console.WriteLine("LLM pipeline created successfully!");

}

public void Dispose()

{

if (_nativePtr != IntPtr.Zero)

{

NativeMethods.ov_genai_llm_pipeline_free(_nativePtr);

_nativePtr = IntPtr.Zero;

}

GC.SuppressFinalize(this);

}

// Other Methods

}

Callback Implementation

Next, let's implement the most complex method of the LLMPipeline, the GenerateStream method. This method encapsulates the LLM inference process. Let's take a look at the original C code. The result can be retrieved either via ov_genai_decoded_results or streamer_callback. ov_genai_decoded_results provides the inference result all at once, while streamer_callback allows for streaming inference results. ov_genai_decoded_results or streamer_callback must be non-NULL; neither can be NULL at the same time. For more information please refer to the comments https://github.com/openvinotoolkit/openvino.genai/blob/master/src/c/include/openvino/genai/c/llm_pipeline.h

// code snippets from //https://github.com/openvinotoolkit/openvino.genai/blob/master/src/c/include/openvino/genai/c/llm_// pipeline.h

typedef enum {

OV_GENAI_STREAMMING_STATUS_RUNNING = 0, // Continue to run inference

OV_GENAI_STREAMMING_STATUS_STOP =

1, // Stop generation, keep history as is, KV cache includes last request and generated tokens

OV_GENAI_STREAMMING_STATUS_CANCEL = 2 // Stop generate, drop last prompt and all generated tokens from history, KV

// cache includes history but last step

} ov_genai_streamming_status_e;

// ...

typedef struct {

ov_genai_streamming_status_e(

OPENVINO_C_API_CALLBACK* callback_func)(const char* str, void* args); //!< Pointer to the callback function

void* args; //!< Pointer to the arguments passed to the callback function

} streamer_callback;

// ...

OPENVINO_GENAI_C_EXPORTS ov_status_e ov_genai_llm_pipeline_generate(ov_genai_llm_pipeline* pipe,

const char* inputs,

const ov_genai_generation_config* config,

const streamer_callback* streamer,

ov_genai_decoded_results** results);

The streamer_callback structure includes not only the callback function itself, but also an additional void* args for enhanced flexibility. This design allows developers to pass custom context or state information to the callback.

For example, in C++ it's common to pass a this pointer through args, enabling the callback function to access class members or methods when invoked.

// args is a this pointer

void callback_func(const char* str, void* args) {

MyClass* self = static_cast<MyClass*>(args);

self->DoSomething();

}

This C# code defines a class StreamerCallback that helps connect a C callback function with a C# method. It wraps a C function pointer MyCallbackDelegate and a void* args into a struct.

- ToNativePTR method constructs the streamer_callback structure, allocates a block of memory, and copies the structure's data into it, allowing it to be passed to a native C function.

- GCHandle is used to safely pin the C# object so that it can be passed as a native pointer to unmanaged C code.

- CallbackWrapper method is the actual function that C code will call.

[UnmanagedFunctionPointer(CallingConvention.Cdecl)]

public delegate ov_genai_streamming_status_e MyCallbackDelegate(IntPtr str, IntPtr args);

[StructLayout(LayoutKind.Sequential)]

public struct streamer_callback

{

public MyCallbackDelegate callback_func;

public IntPtr args;

}

public class StreamerCallback : IDisposable

{

public Action<string> OnStream;

public MyCallbackDelegate Delegate;

private GCHandle _selfHandle;

public StreamerCallback(Action<string> onStream)

{

OnStream = onStream;

Delegate = new MyCallbackDelegate(CallbackWrapper);

_selfHandle = GCHandle.Alloc(this);

}

public IntPtr ToNativePtr()

{

var native = new streamer_callback

{

callback_func = Delegate,

args = GCHandle.ToIntPtr(_selfHandle)

};

IntPtr ptr = Marshal.AllocHGlobal(Marshal.SizeOf<streamer_callback>());

Marshal.StructureToPtr(native, ptr, false);

return ptr;

}

public void Dispose()

{

if (_selfHandle.IsAllocated)

_selfHandle.Free();

}

private ov_genai_streamming_status_e CallbackWrapper(IntPtr str, IntPtr args)

{

string content = Marshal.PtrToStringAnsi(str) ?? string.Empty;

if (args != IntPtr.Zero)

{

var handle = GCHandle.FromIntPtr(args);

if (handle.Target is StreamerCallback self)

{

self.OnStream?.Invoke(content);

}

}

return ov_genai_streamming_status_e.OV_GENAI_STREAMMING_STATUS_RUNNING;

}

}

Then We implemented the GenerateStream method in class LLMPipeline.

public void GenerateStream(string input, GenerationConfig config, StreamerCallback? callback = null)

{

IntPtr configPtr = config.GetNativePointer();

IntPtr decodedPtr;// placeholder

IntPtr streamerPtr = IntPtr.Zero;

if (callback != null)

{

streamerPtr = callback.ToNativePtr();

}

var status = NativeMethods.ov_genai_llm_pipeline_generate(

_nativePtr,

input,

configPtr,

streamerPtr,

out decodedPtr

);

if (streamerPtr != IntPtr.Zero)

Marshal.FreeHGlobal(streamerPtr);

callback?.Dispose();

if (status != ov_status_e.OK)

{

Console.WriteLine($"Error: {status} during generation.");

throw new Exception("Failed to generate results.");

}

return;

}

We use the following code to invoke our callback and GenerateStream.

pipeline.StartChat(); // Start chat with keeping history in kv cache.

Console.WriteLine("question:");

while (true)

{

string? input = Console.ReadLine();

if (string.IsNullOrWhiteSpace(input)) break;

using var streamerCallback = new StreamerCallback((string chunk) =>

{

Console.Write(chunk);

});

pipeline.GenerateStream(input, generationConfig, streamerCallback);

input = null;

Console.WriteLine("\n----------\nquestion:");

}

pipeline.FinishChat(); // Finish chat and clear history in kv cache.

About Deployment

We can directly download the OpenVINO official release of the LLM's IR from Hugging Face using this link.

git clone https://huggingface.co/OpenVINO/Phi-3.5-mini-instruct-int8-ov

The OpenVINO.GenAI 2025.1 package can be downloaded via this link.

The C# project directly depends on openvino_genai_c.dll, which in turn has transitive dependencies on other toolkit-related DLLs, including Intel TBB libraries.

To ensure proper runtime behavior, all the DLLs delivered with OpenVINO.GenAI — including openvino_genai_c.dll and its dependencies — are bundled and treated as part of the C# project’s runtime dependencies.

We use the following cmd commands to download the genai package and copy all the required dependent DLLs to the directory containing the *.csproj file.

curl -O https://storage.openvinotoolkit.org/repositories/openvino_genai/packages/2025.1/windows/openvino_genai_windows_2025.1.0.0_x86_64.zip

tar -xzvf openvino_genai_windows_2025.1.0.0_x86_64.zip

xcopy /y openvino_genai_windows_2025.1.0.0_x86_64\runtime\bin\intel64\Release\*.dll "C:\path\to\ChatSample\"

xcopy /y openvino_genai_windows_2025.1.0.0_x86_64\runtime\3rdparty\tbb\bin\*.dll "C:\path\to\ChatSample\"

Full Implementation

Please refer to https://github.com/apinge/openvino_ai_practice/tree/main/ov_genai_interop/ov_genai_interop_net, to access the full implementation.

.png)