AI-pipeline

Ollama Integrated with OpenVINO, Accelerating DeepSeek Inference

Authors: Hongbo Zhao, Fiona Zhao, Tong Qiu

Why Choose the Ollama + OpenVINO Combination?

Dual-Engine Driven Technical Advantages

The integration of Ollama and OpenVINO delivers a powerful dual-engine solution for the management and inference of large language models (LLMs). Ollama offers a streamlined model management toolchain, while OpenVINO provides efficient acceleration capabilities for model inference across Intel hardware (CPU/GPU/NPU). This combination not only simplifies the deployment and invocation of models but also significantly enhances inference performance, making it particularly suitable for scenarios demanding high performance and ease of use.

You can find more information on github repository:

https://github.com/openvinotoolkit/openvino_contrib/tree/master/modules/ollama_openvino

Core Value of Ollama

1. Streamlined LLM Management Toolchain: Ollama provides a user-friendly command-line interface, enabling users to effortlessly download, manage, and run various LLM models.

2. One-Click Model Deployment: With simple commands, users can quickly deploy and invoke models without complex configurations.

3. Unified API Interface: Ollama offers a unified API interface, making it easy for developersto integrate into various applications.

4. Active Open-Source Community: Ollama boasts a vibrant open-source community, providing users with abundant resources and support.

Limitations of Ollama

Currently, Ollama only supports llama.cpp as itsbackend, which presents some inconveniences:

1. Limited Hardware Compatibility: llama.cpp is primarily optimized for CPUs and NVIDIA GPUs, and cannot fully leverage the acceleration capabilities of Intel GPUs or NPUs, resulting in suboptimal performance in high-performance computing scenarios.

2. Performance Bottlenecks: For large-scale models or high-concurrency scenarios, the performance of llama.cpp may fall short, especially when handling complex tasks, leading to slower inference speeds.

Breakthrough Capabilities of OpenVINO

1. Deep Optimization for Intel Hardware (CPU/iGPU/Arc dGPU/NPU): OpenVINO is deeply optimized for Intel hardware, fully leveraging the performance potential of CPUs, iGPUs, dGPUs, and NPUs.

2. Cross-Platform Heterogeneous Computing Support: OpenVINO supports cross-platform heterogeneous computing, enabling efficient model inference across different hardware platforms.

3. Model Quantization and Compression Toolchain: OpenVINO provides a comprehensive toolchain for model quantization and compression, significantly reducing model size and improving inference speed.

4. Significant Inference Performance Improvement: Through OpenVINO's optimizations, model inference performance can be significantly enhanced, especially for large-scale models and high-concurrency scenarios.

5. Extensibility and Flexibility Support: OpenVINO GenAI offers robust extensibility and flexibility for Ollama-OV, supporting pipeline optimization techniques such as speculative decoding, prompt-lookup decoding, pipeline parallelization, and continuous batching, laying a solid foundation for future pipeline serving optimizations.

Developer Benefits of Integration

1. Simplified Development Experience: Retains Ollama's CLI interaction features, allowing developers to continue using familiar command-line tools for model management and invocation.

2. Performance Leap: Achieves hardware-level acceleration through OpenVINO, significantly boosting model inference performance, especially for large-scale models and high-concurrency scenarios.

3. Multi-Hardware Adaptation and Ecosystem Expansion: OpenVINO's support enables Ollama to adapt to multiple hardware platforms, expanding its application ecosystem and providing developers with more choices and flexibility.

Three Steps to Enable Acceleration

1. Download Precompiled Executables

please refer to : https://github.com/zhaohb/ollama_ov/tree/main?tab=readme-ov-file#google-driver

2.Configure OpenVINO GenAI Environment

For Windows systems, first extract the downloaded OpenVINO GenAI package to the directory openvino_genai_windows_2025.2.0.0.dev20250320_x86_64, then execute the following commands:

cd openvino_genai_windows_2025.2.0.0.dev20250320_x86_64

setupvars.bat

3. Set Up cgocheck

Windows:

set GODEBUG=cgocheck=0

Linux:

export GODEBUG=cgocheck=0

At this point, the executable files have been downloaded, and the OpenVINO GenAI, OpenVINO, and CGO environments have been successfully configured.

Custom Model Deployment Guide

Since the Ollama Model Library does not support uploading non-GGUF format IR models, we will create an OCI image locally using OpenVINO IR that is compatible with Ollama. Here, we use the DeepSeek-R1-Distill-Qwen-7B model as an example:

1. Download the OpenVINO IR Model

Download the model from ModelScope:

pip install modelscope

modelscope download --model zhaohb/DeepSeek-R1-Distill-Qwen-7B-int4-ov --local_dir ./DeepSeek-R1-Distill-Qwen-7B-int4-ov2. Package the Downloaded OpenVINO IR Directory

Compress the directory into a *.tar.gz file:

tar -zcvf DeepSeek-R1-Distill-Qwen-7B-int4-ov.tar.gz DeepSeek-R1-Distill-Qwen-7B-int4-ov3. Create a Modelfile

Define the model configuration in a Modelfile:

FROM DeepSeek-R1-Distill-Qwen-7B-int4-ov.tar.gz

ModelType "OpenVINO"

InferDevice "GPU"

PARAMETER stop ""

PARAMETER stop "```"

PARAMETER stop "</User|>"

PARAMETER stop "<|end_of_sentence|>"

PARAMETER stop "</|"

PARAMETER max_new_token 4096

PARAMETER stop_id 151643

PARAMETER stop_id 151647

PARAMETER repeat_penalty 1.5

PARAMETER top_p 0.95

PARAMETER top_k 50

PARAMETER temperature 0.84. Create an Ollama-Compatible Model

Use the Modelfile to create a model supported by Ollama:

ollama create DeepSeek-R1-Distill-Qwen-7B-int4-ov:v1 -f Modelfile

With these steps, we have successfully created the DeepSeek-R1-Distill-Qwen-7B-int4-ov:v1 model, which is now ready for use with the Ollama OpenVINO backend.

DeepSeek Janus-Pro Model Enabling with OpenVINO

1. Introduction

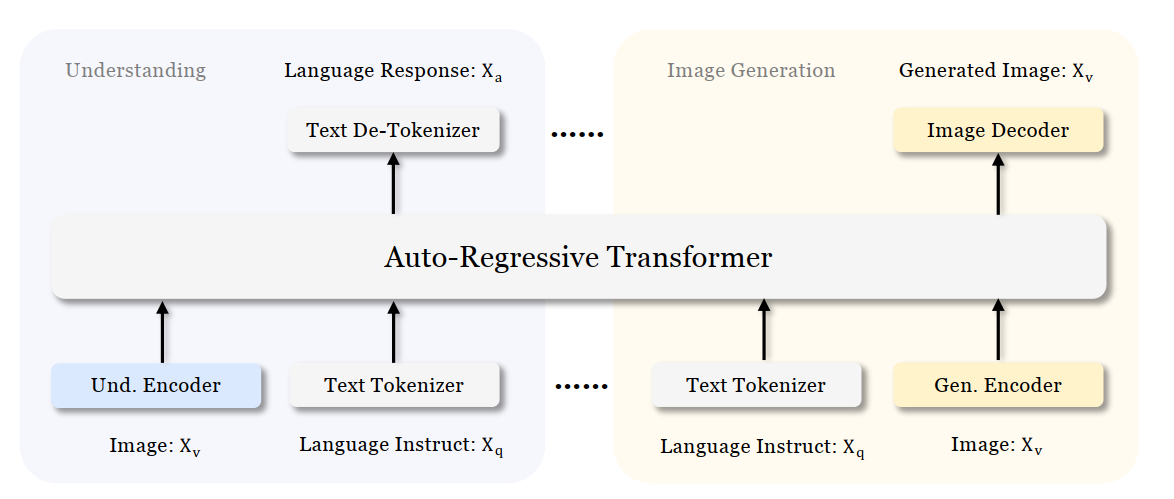

Janus is a unified multimodal understanding and generation model developed by DeepSeek. Janus proposed decoupling visual encoding to alleviate the conflict between multimodal understanding and generation tasks. Janus-Pro further scales up the Janus model to larger model size (deepseek-ai/Janus-Pro-1B & deepseek-ai/Janus-Pro-7B) with optimized training strategy and training data, achieving significant advancements in both multimodal understanding and text-to-image tasks.

Figure 1 shows the architecture of Janus-Pro, which decouples visual encoding for multimodal understanding and visual generation. “Und. Encoder” and “Gen. Encoder” are abbreviations for “Understanding Encoder” and “Generation Encoder”. For the multimodal understanding task, SigLIP vision encoder used to extract high-dimensional semantic features from the image, while for the vision generation task, VQ tokenizer used to map images to discrete IDs. Both the understanding adaptor and the generation adaptor are two-layer MLPs to map the embeddings to the input space of LLM.

In this blog, we will introduce how to deploy Janus-Pro model with OpenVINOTM runtime on the intel platform.

2. Janus-Pro Pytorch Model to OpenVINOTM Model Conversion

2.1. Setup Python Environment

2.2 Download Janus Pytorch model (Optional)

2.3. Convert Pytorch Model to OpenVINOTM INT4 Model

The converted OpenVINO will be saved in Janus-Pro-1B-OV directory for deployment.

3. Janus-Pro Inference with OpenVINOTM Demo

In this section, we provide several examples to show Janus-Pro for multimodal understanding and vision generation tasks.

3.1. Multimodal Understanding Task – Image Caption with OpenVINOTM

Prompt: Describe image in details

Input image:

Generated Output:

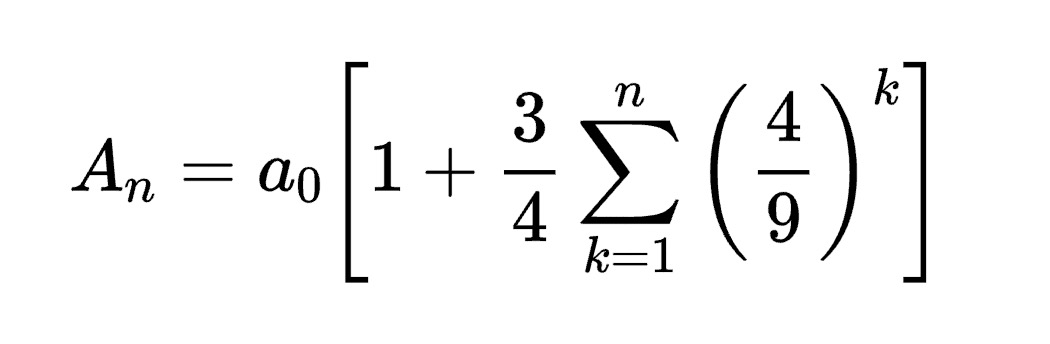

3.2. Multimodal Understanding Task – Equation Description with OpenVINOTM

Prompt: Generate the latex code of this formula

Input Image:

Generated Output:

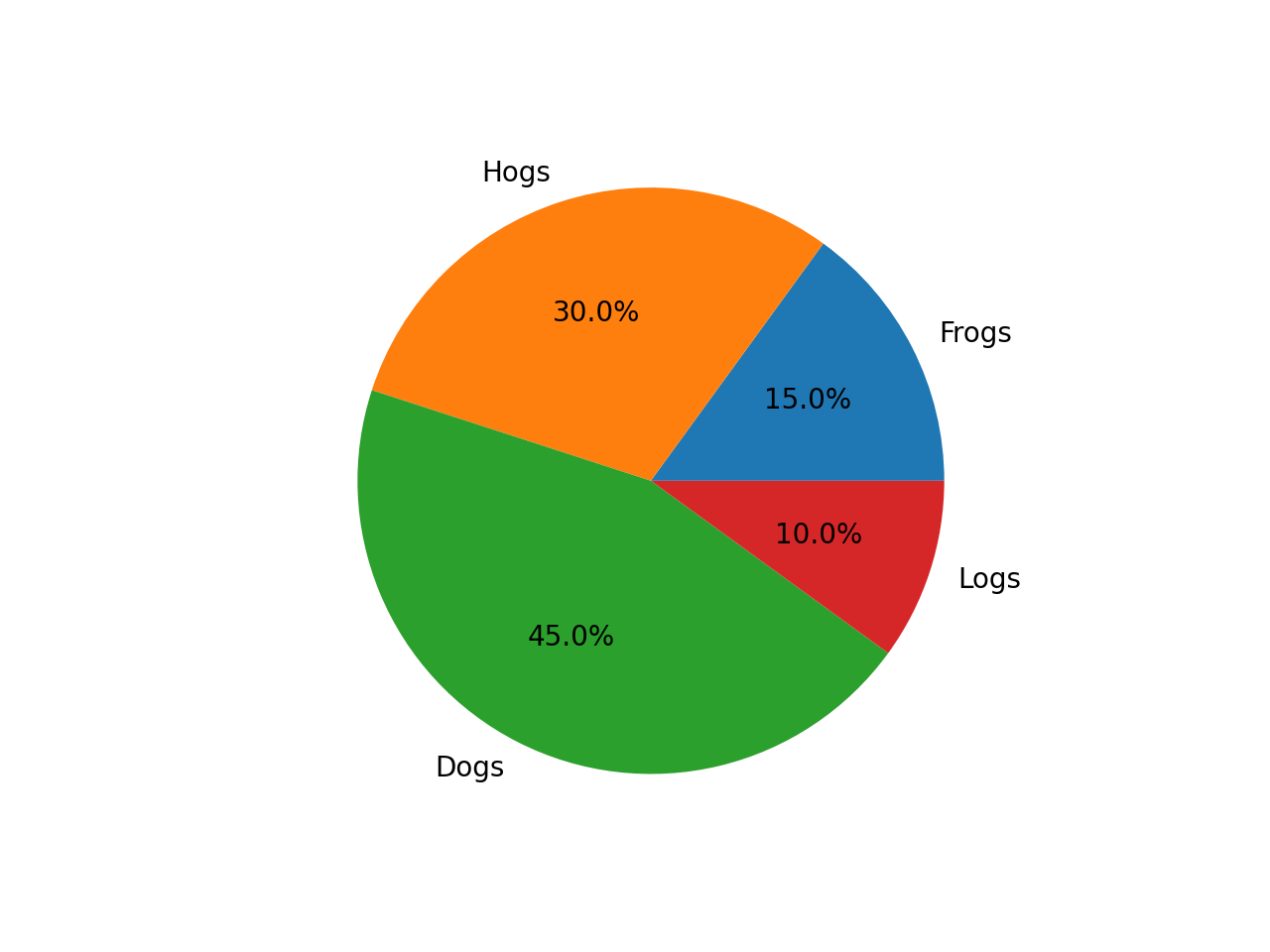

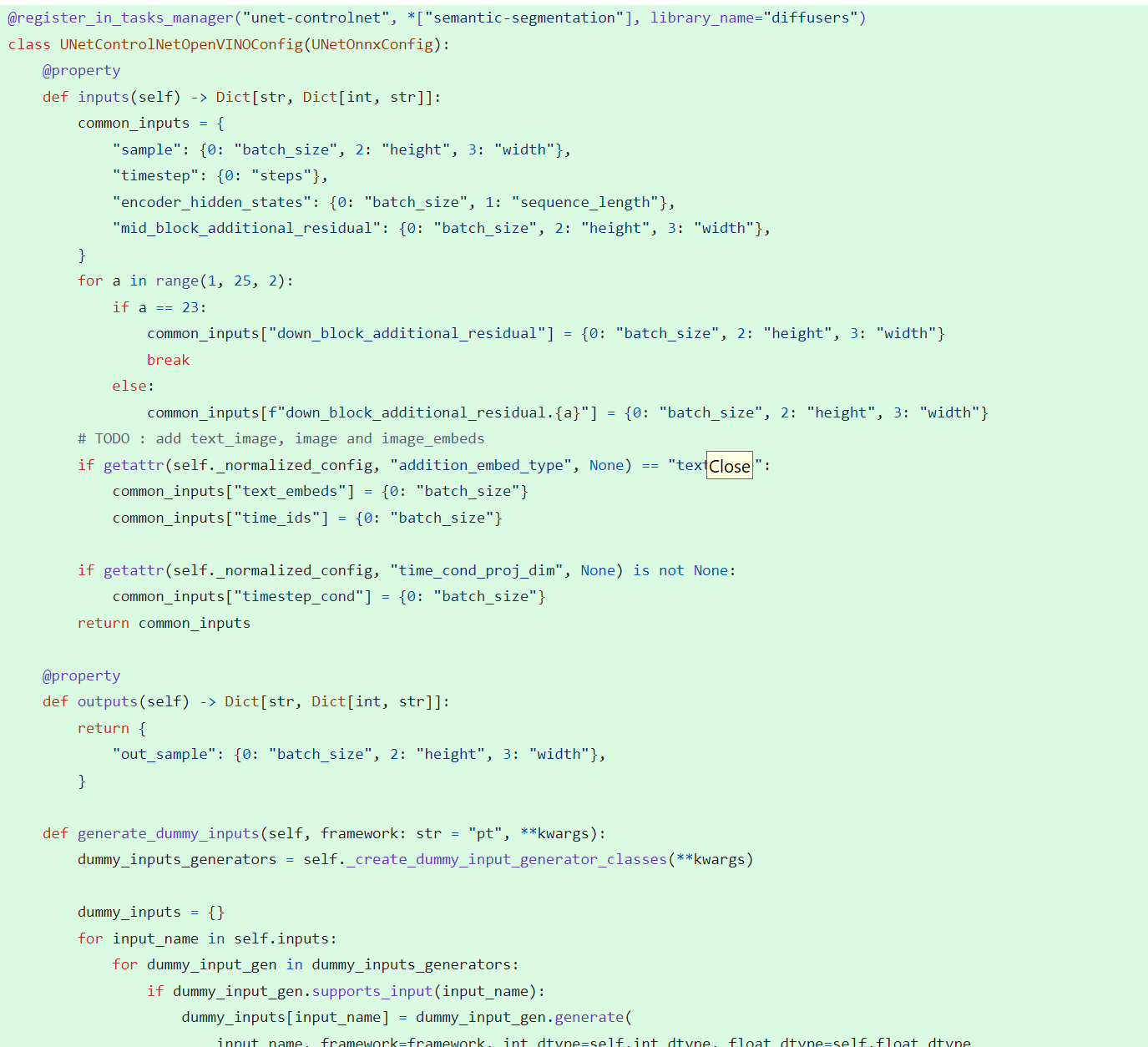

3.3. Multimodal Understanding Task – Code Generation with OpenVINOTM

Prompt: Generate the matplotlib pyplot code for this plot

Input Image:

Generated Output:

3.4. Vision Generation Task with OpenVINOTM

Input Prompt: A cute and adorable baby fox with big brown eyes, autumn leaves in the background enchanting, immortal, fluffy, shiny mane, Petals, fairyism, unreal engine 5 and Octane Render, highly detailed, photorealistic, cinematic, natural colors.

Generated image:

4. Performance Evaluation & Memory Usage Analysis

We also provide benchmark scripts to evaluate Janus-Pro model performance and memory usage with OpenVINOTM inference, you may specify model name and device for your target platform.

4.1. Benchmark Janus-Pro for Multimodal Understanding Task with OpenVINOTM

Here are some arguments for benchmark script for Multimodal Understanding Task:

--model_id: specify the Janus OpenVINOTM model directory

--prompt: specify input prompt for multimodal understanding task

--image_path: specify input image for multimodal understanding task

--niter: specify number of test iteration, default is 5

--device: specify which device to run inference

--max_new_tokens: specify max number of generated tokens

By default, the benchmark script will run 5 round multimodal understanding tasks on target device, then report pipeline initialization time, average first token latency (including preprocessing), 2nd+ token throughput and max RSS memory usage.

4.2. Benchmark Janus-Pro for Text-to-Image Task with OpenVINOTM

Here are some arguments for benchmark scripts for Text-to-Image Task

--model_id: specify the Janus OpenVINO TM model directory

--prompt: specify input prompt for text-to-image generation task

--niter: specify number of test iteration

--device: specify which device to run inference

By default, the benchmark script will run 5 round image generation tasks on target device, then report the pipeline initialization time, average image generation latency and max RSS memory usage.

5. Conclusion

In this blog, we introduced how to enable Janus-Pro model with OpenVINOTM runtime, then we demonstrated the Janus-Pro capability for various multimodal understanding and image generation tasks. In the end, we provide python script for performance & memory usage evaluation for both multimodal understanding and image generation task on target platform.

OpenVINO GenAI Serving (OGS) update

Authors: Xiake Sun, Su Yang, Tianmeng Chen, Tong Qiu

OpenVINO GenAI Server (OGS) Update:

-Update LLM: stream generation, reset handle, multi-round chat, model cache config

-Support VLM

-Support Reranker for RAG sample

-Support BLIP image embedding for photo search with DB

-Support C++ GUI with imgui for photo search

Now we scale the text embedding to image embedding for RAG sample and support multi-Vector Retriever for RAG.

- Multi-Vector Retriever for RAG on text: QA over Document

- Multi-Vector Retriever for RAG on image: Photo search with DB retrieval

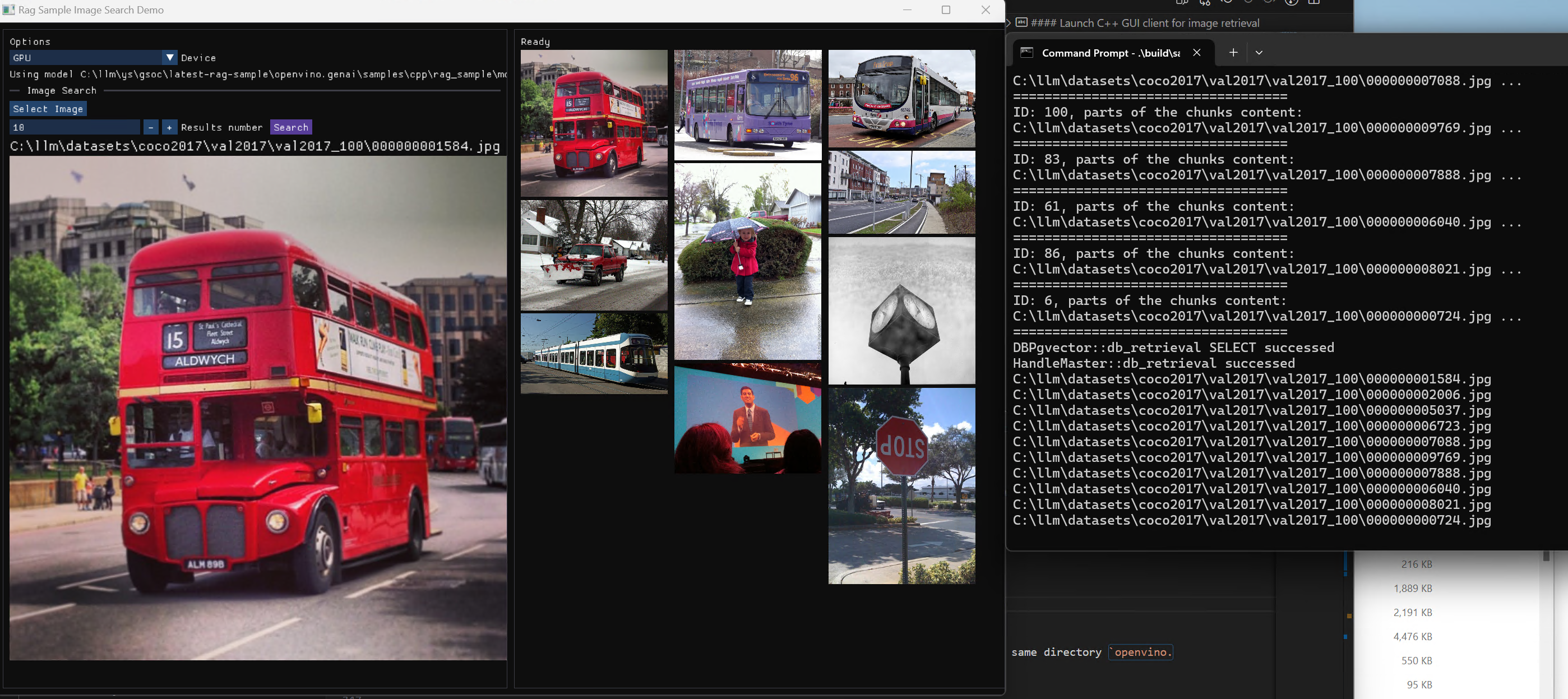

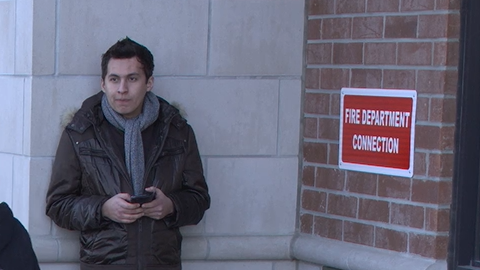

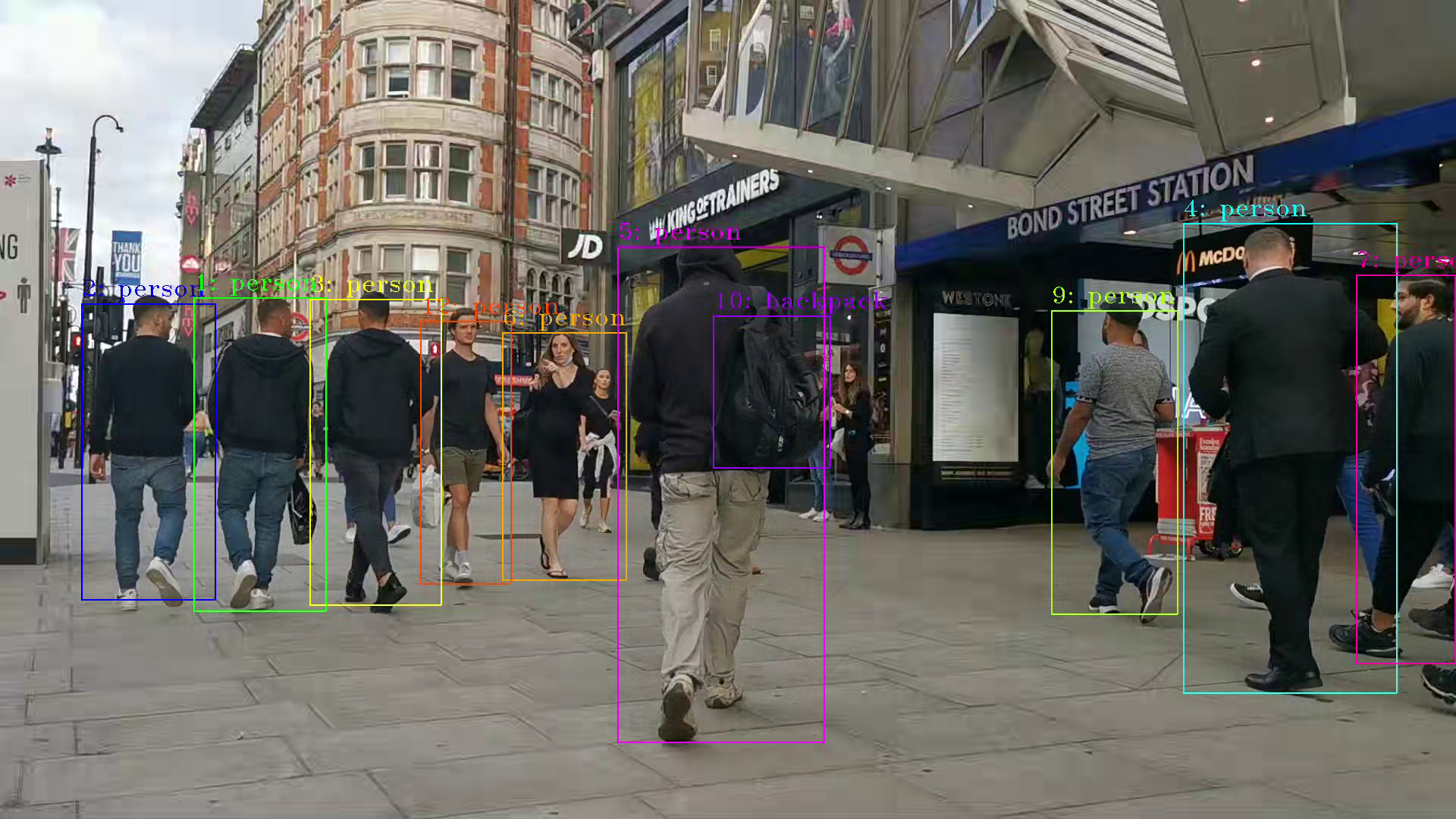

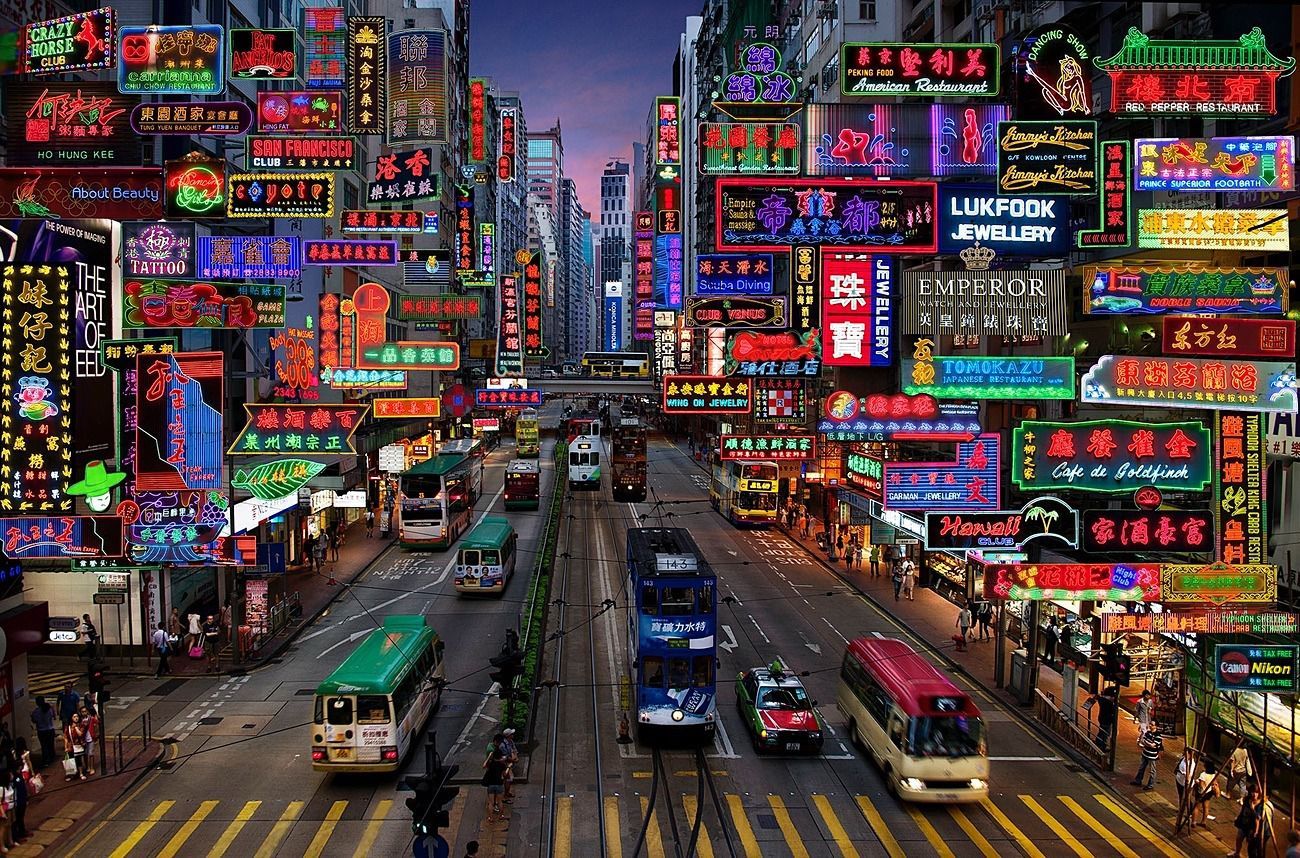

Here is a photo search sample with image embedding.

Usage 2: Photo Search with DB retrieval

Steps:

1.use python client to create image vector DB (PostgreSQL)

2.use GUI to search image

Here is a sample image to demonstrate GUI usage on client platform. we search the bus photo with top 10 similar images from the 100 images which are embedded into Vector DB.

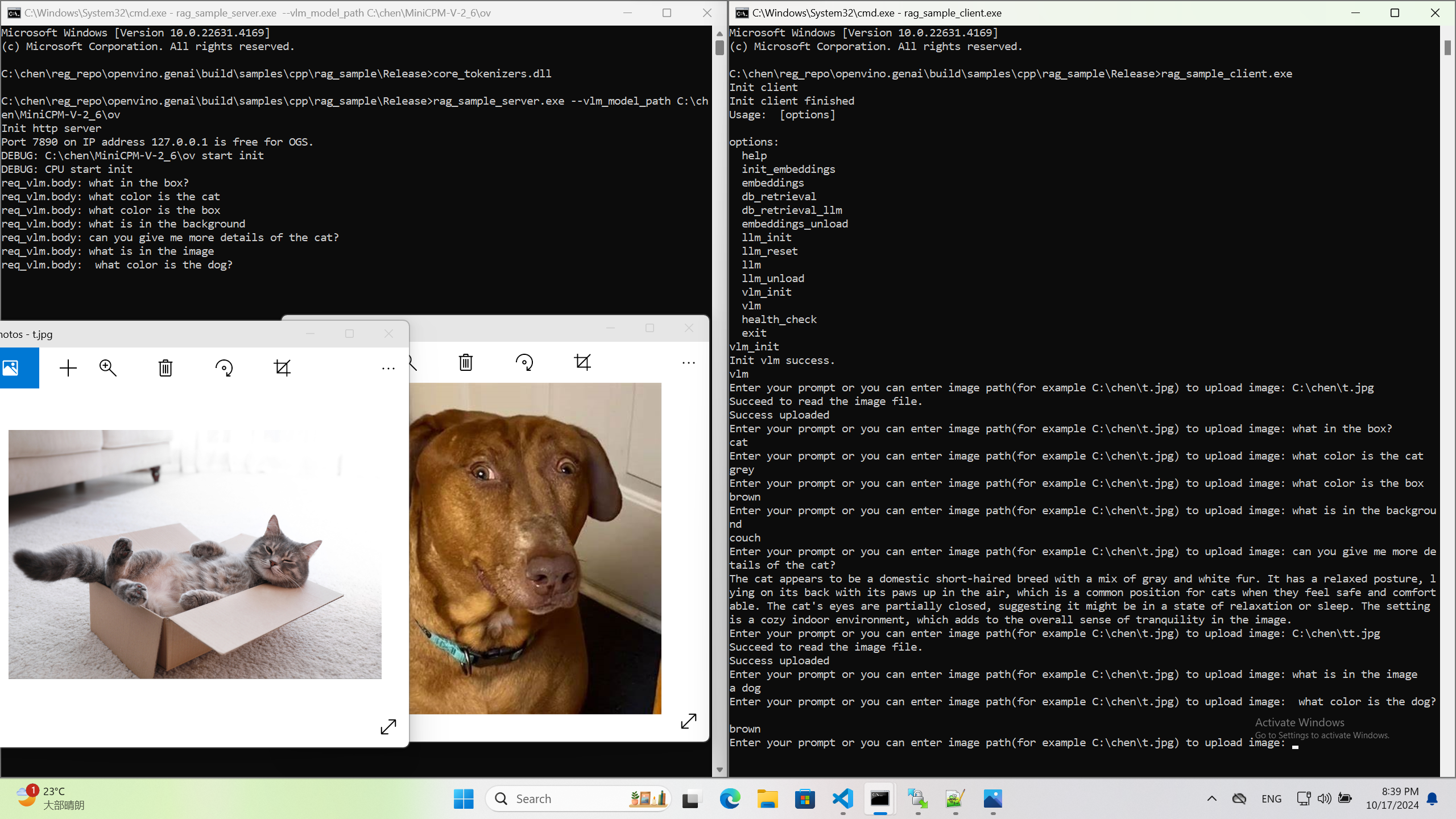

Usage 3: Chat with images via MiniCPM-V

Once we have created a multimodal vector DB through image embedding, we can further communicate with the image through VLM.

We integrate the C++ GenAI sample visual_language_chat with openbmb/MiniCPM-V-2_6.

Here is the demo image on client platform.

InternVL2-4B model enabling with OpenVINO

Authors: Hongbo Zhao, Fiona Zhao

Introduction

InternVL2.0 is a series of multimodal large language models available in various sizes. The InternVL2-4B model comprises InternViT-300M-448px, an MLP projector, and Phi-3-mini-128k-instruct. It delivers competitive performance comparable to proprietary commercial models across a range of capabilities, including document and chart comprehension, infographics question answering, scene text understanding and OCR tasks, scientific and mathematical problem solving, as well as cultural understanding and integrated multimodal functionalities.

You can find more information on github repository: https://github.com/zhaohb/InternVL2-4B-OV

OpenVINOTM backend on InternVL2-4B

Step 1: Install system dependency and setup environment

Create and enable python virtual environment

conda create -n ov_py310 python=3.10 -y

conda activate ov_py310Clone the InternVL2-4B-OV repository from github

git clonehttps://github.com/zhaohb/InternVL2-4B-OV

cd InternVL2-4B-OVInstall python dependency

pip install -r requirement.txt

pip install --pre -U openvino openvino-tokenizers --extra-index-url https://storage.openvinotoolkit.org/simple/wheels/nightlyStep2: Get HuggingFace model

huggingface-cli download --resume-download OpenGVLab/InternVL2-4B --local-dir InternVL2-4B--local-dir-use-symlinks False

cp modeling_phi3.py InternVL2-4B/modeling_phi3.py

cp modeling_intern_vit.py InternVL2-4B/modeling_intern_vit.pyStep 3: Export to OpenVINO™ model

python test_ov_internvl2.py -m ./InternVL2-4B -ov ./internvl2_ov_model -llm_int4_com -vision_int8 -llm_int8_quan -convert_model_only

Step4: Simple inference test with OpenVINO™

python test_ov_internvl2.py -m ./InternVL2-4B -ov ./internvl2_ov_model -llm_int4_com -vision_int8-llm_int8_quanQuestion: Please describe the image shortly.

Answer:

The image features a close-up view of a red panda resting on a wooden platform. The panda is characterized by its distinctive red fur, white face, and ears. The background shows a natural setting with green foliage and a wooden structure.

Here are the parameters with descriptions:

python test_ov_internvl2.py --help

usage: Export InternVL2 Model to IR [-h] [-m MODEL_ID] -ov OV_IR_DIR [-d DEVICE] [-pic PICTURE] [-p PROMPT] [-max MAX_NEW_TOKENS] [-llm_int4_com] [-vision_int8] [-llm_int8_quant] [-convert_model_only]

options:

-h, --help show this help message and exit

-m MODEL_ID, --model_id MODEL_ID model_id or directory for loading

-ov OV_IR_DIR, --ov_ir_dir OV_IR_DIR output directory for saving model

-d DEVICE, --device DEVICE inference device

-pic PICTURE, --picture PICTURE picture file

-p PROMPT, --prompt PROMPT prompt

-max MAX_NEW_TOKENS, --max_new_tokens MAX_NEW_TOKENS max_new_tokens

-llm_int4_com, --llm_int4_compress llm int4 weight scompress

-vision_int8, --vision_int8_quant vision int8 weights quantize

-llm_int8_quant, --llm_int8_quant llm int8 weights dynamic quantize

-convert_model_only, --convert_model_only convert model to ov only, do not do inference testSupported optimizations

1. Vision model INT8 quantization and SDPA optimization enabled

2. LLM model INT4 compression

3. LLM model INT8 dynamic quantization

4. LLM model with SDPA optimization enabled

Summary

This blog introduces how to use the OpenVINO™ python API to run the pipeline of the Internvl2-4B model, and uses a variety of acceleration methods to improve the inference speed.

moondream2 model enabling with OpenVINO

Introduction

moondream2 is a small vision language model designed to run efficiently on edge devices. Although the model has a small number of parameters, it provides high-performance visual processing capabilities. It can quickly understand and process input images and respond to user queries. The model was developed by VikhyatK and is released under the permissive Apache 2.0 license, allowing for commercial use.

You can find more information on github repository: https://github.com/zhaohb/moondream2-ov

OpenVINOTM backend on moondream2

Step 1: Install system dependency and setup environment

Create and enable python virtual environment

conda create -n ov_py310 python=3.10 -y

conda activate ov_py310

Clone themoondream2-ov repository from gitHub

git clone https://github.com/zhaohb/moondream2-ov

cd moondream2-ov

Install python dependency

pip install -r requirement.txt

pip install --pre -U openvino openvino-tokenizers --extra-index-url https://storage.openvinotoolkit.org/simple/wheels/nightly

Step 2: Get HuggingFace model

git lfs install

git clone https://hf-mirror.com/vikhyatk/moondream2

git checkout 48be9138e0faaec8802519b1b828350e33525d46

Step 3: Export OpenVINO™ models and simple inference test with OpenVINO™

python3 test_ov_moondream2.py -m /path/to/moondream2 -o /path/to/moondream2_ov

Question: Describe this image.

Answer:

The image shows a modern white desk with a laptop, a lamp, and a notebook on it, set against a gray wall and a wooden floor.

Optimizing MeloTTS for AIPC Deployment with OpenVINO: A Lightweight Text-to-Speech Solution

Authors : Qiu Tong, Zhao Hongbo

MeloTTS released by MyShell.ai, is a high-quality, multilingual Text-to-Speech (TTS) library that supports English, Chinese (mixed English), and various other languages. The strengths of the model lie in its lightweight design, which is well-suited for applications on AIPC systems, coupled with its impressive performance. In this article, I will guide you through the process of converting the model to be compatible with OpenVINO toolkits, enabling it to run on various devices such as CPUs, GPUs and NPUs. Additionally, I will provide a concise overview of the model's inference procedure.

Overview of Model Inference Procedure and Pipeline

For each language type, the pipeline requires only two models (two inference procedures). For instance, English language generation necessitates just the 'bert-base-uncased' model and its corresponding MeloTTS-English. Similarly, for Chinese language generation (which includes mixed English), the pipeline needs only the 'bert-base-multilingual-uncased' and MeloTTS-Chinese. This greatly streamlines the pipeline compared to other TTS frameworks, and the compact size of the models makes them suitable for deployment on edge devices.

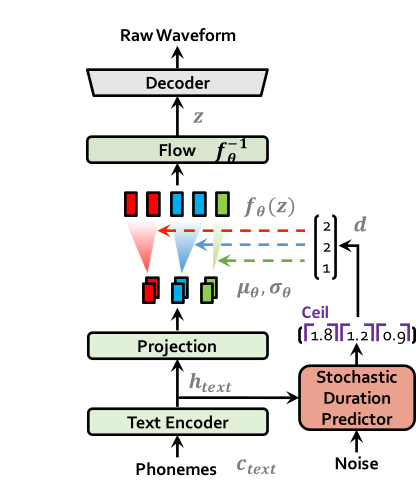

MeloTTS is based on Variational Inference with adversarial learning for end-to-end Text-to-Speech (VITS). The inference process is illustrated in the figure. It encompasses a text encoder, a stochastic duration predictor, a decoder.

The text encoder accepts phones, tones and a hidden layer from a BERT model as input. It then produces the text encoder's output along with its mean value and a logarithmic variance. To align the input texts with the target speech, the outputs from the encoders are processed using a stochastic duration predictor, which generates an alignment matrix. This matrix is then used to expand the mean value and a logarithmic variance (assuming a Gaussian distribution) to obtain the results for the latent variables .Subsequently, the inverse flow transformation is applied to obtain the distribution of final latent variable z, which represents the spectrogram. In the decoder, by upsampling the spectrogram, the final audio waveform is obtained.

def ov_infer(self, phones=None, phones_length=None, speaker_id=None, tones=None, lang_ids=None, bert=None, ja_bert=None, sdp_ratio=0.2, noise_scale=0.6, noise_scale_w=0.8, speed=1.0):

The inference entry is the function. In practical inference, phone refers to the distinct speech sounds, while tone refers to the vocal pitch contour. For Chinese, a phone corresponds to pinyin, and a tone corresponds to one of the four tones. In English, phones are the consonants and vowels, and tones relate to stress patterns. Here, noise_scale and noise_scale_w do not refer to actual noise. Both noise_scale_w and noise_scale are components within the Stochastic Duration Predictor, used to introduce randomness in order to enhance the expressiveness of the model.

Note that MeloTTS does not include a voice cloning component, unlike the majority of other TTS models, which makes it more lightweight. If voice cloning is required, please refer to OpenVoice.

Enable Model for OpenVINO

As previously mentioned, the pipeline requires just two models for each language. Taking English as an example, we must first convert both 'bert-base-uncased' and 'MeloTTS-English' into the OpenVINO IR format.

example_input={

"x": x_tst,

"x_lengths": x_tst_lengths,

"sid": speakers,

"tone": tones,

"language": lang_ids,

"bert": bert,

"ja_bert": ja_bert,

"noise_scale": noise_scale,

"length_scale": length_scale,

"noise_scale_w": noise_scale_w,

"sdp_ratio": sdp_ratio,

}

ov_model = ov.convert_model(

self.model,

example_input=example_input,

)

get_input_names = lambda: ["phones", "phones_length", "speakers",

"tones", "lang_ids", "bert", "ja_bert",

"noise_scale", "length_scale", "noise_scale_w", "sdp_ratio"]

for input, input_name in zip(ov_model.inputs, get_input_names()):

input.get_tensor().set_names({input_name})

outputs_name = ['audio']

for output, output_name in zip(ov_model.outputs, outputs_name):

output.get_tensor().set_names({output_name})

"""

reshape model

Set the batch size of all input tensors to 1

"""

shapes = {}

for input_layer in ov_model.inputs:

shapes[input_layer] = input_layer.partial_shape

shapes[input_layer][0] = 1

ov_model.reshape(shapes)

ov.save_model(ov_model, Path(ov_model_path))

For instance, we convert the MeloTTS-English model from the pytorch format directly by utilizing the openvino.convert_model API along with pseudo input data.

Note that the input and output layers (it is optional) are renamed to facilitate subsequent development. Furthermore, the batch dimension for all inputs is fixed at 1, as multiple batches are not required here (this is also optional).

We further quantized both the BERT and TTS models to int8 using pseudo data. We observed that our method of quantizing the TTS model introduces a slight distortion to the current sound. To suppress this, we implemented DeepFilterNet, which is also very lightweight.

More about model conversion and int8 quantization please refer to MeloTTS-OV .

Run BERT part on NPU

To enhance performance and reduce CPU offloading, we can shift the execution of the BERT model to the NPU on Meteor Lake.

To adapt the model for the NPU, we've converted the model to accept static shape inputs a and pad each input during inference.

def reshape_for_npu(model, bert_static_shape = 32):

# change dynamic shape to static shape

shapes = dict()

for input_layer in model.inputs:

shapes[input_layer] = bert_static_shape

model.reshape(shapes)

ov.save_model(model, Path(ov_model_save_path))

print(f"save static model in {Path(ov_model_save_path)}")

def main():

core = Core()

model = core.read_model(ov_model_path)

reshape_for_npu(model, bert_static_shape=bert_static_shape)

Simple Demo

Here are the audio files generated by the int8 quantized model from OpenVINO.

https://github.com/zhaohb/MeloTTS-OV/tree/speech-enhancement-and-npu/demo

Enable Personalized Text-to-Speech Pipeline with SAMBERT-HifiGAN via OpenVINO Python API

Authors: Tianmeng Chen, Xiake Sun, Fiona Zhao, Su Yang

Introduction

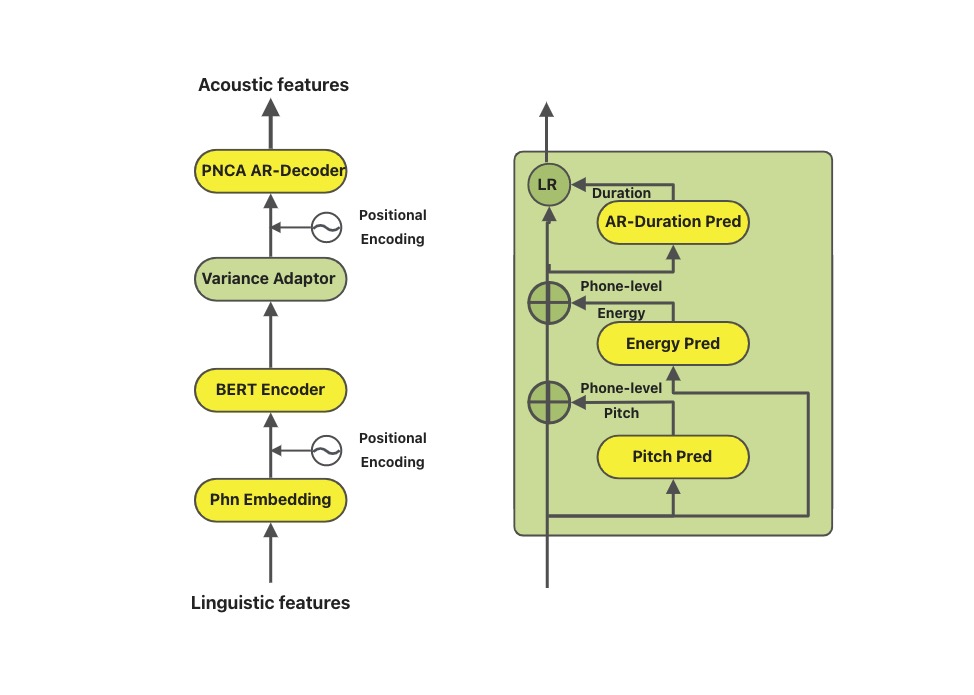

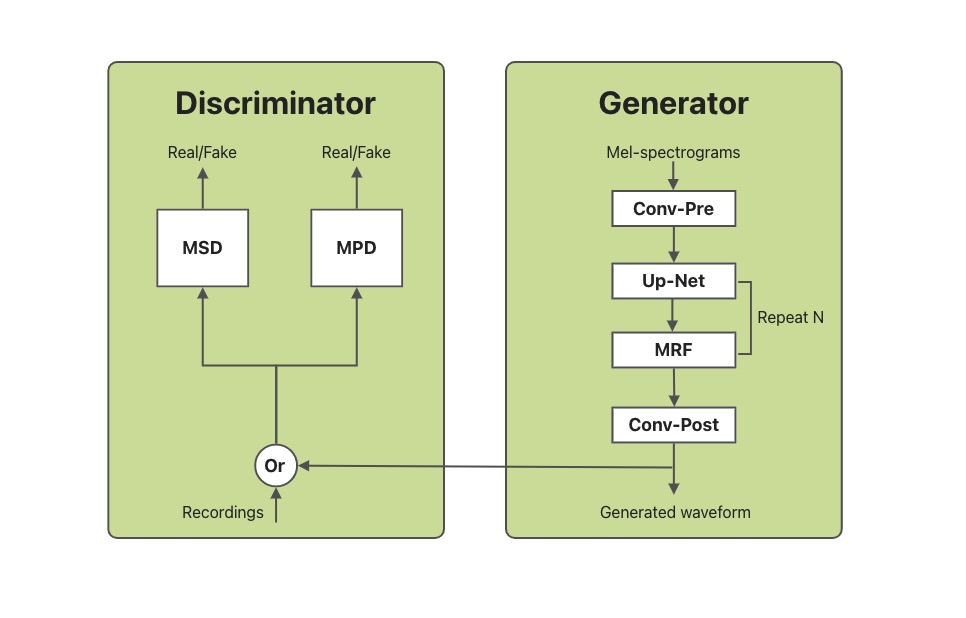

Personalized Speech Synthesis is the process of using some recording devices around you to record certain voice clips of a particular person, and then letting Text-To-Speech (TTS) technology synthesize the voice, manner of speaking, and emotion of a particular person. SAMBERT-HifiGAN is a complete personalized TTS solution designed by Alibaba Damo Institute, which includes the first part of SAMBERT's acoustic model and the second part of the HifiGAN vocoder.

In this blog, we will introduce how to utilize OpenVINOTM Python API to enable the SAMBERT-HifiGAN pipeline. All the project code can be found here.

KAN-TTS by Ali provides a tutorial for training SAMBERT-HifiGAN. A pipeline for personalized speech synthesis based on PyTorch is provided on modelscope, what we will do here is toreplace the PyTorch based part of it with OpenVINOTM. It is worth noting that due to some of the operators in the model, there are some modules that cannot be replaced with OpenVINOTM Python API.

Pre-requisite

Since we need to make changes on the pipeline based on PyTorch backend, the first thing we need to do is to download the KAN-TTS source code and successfully run through the pipeline to get the inputs and outputs of the model as well as the state of the middle layer. Of course we also need the OpenVINOTM environment.

- Get the KAN-TTS source code and create anacondaenvironment.

- Then we install openvino in same environment. Ifyou want specific version of OpenVINOTM, you can install it byyourself through Install OpenVINO™.

- Follow the KAN-TTS practice tutorial of official with readme in ModelScope.

After you finish the pipelining of KAN-TTS, you can get the res folder and ckpt filesspeech_personal_sambert-hifigan_nsf_tts_zh-cn_pretrain_16k .

- Get the OpenVINOTM backend projectsource code and copy the res folder to project folder.

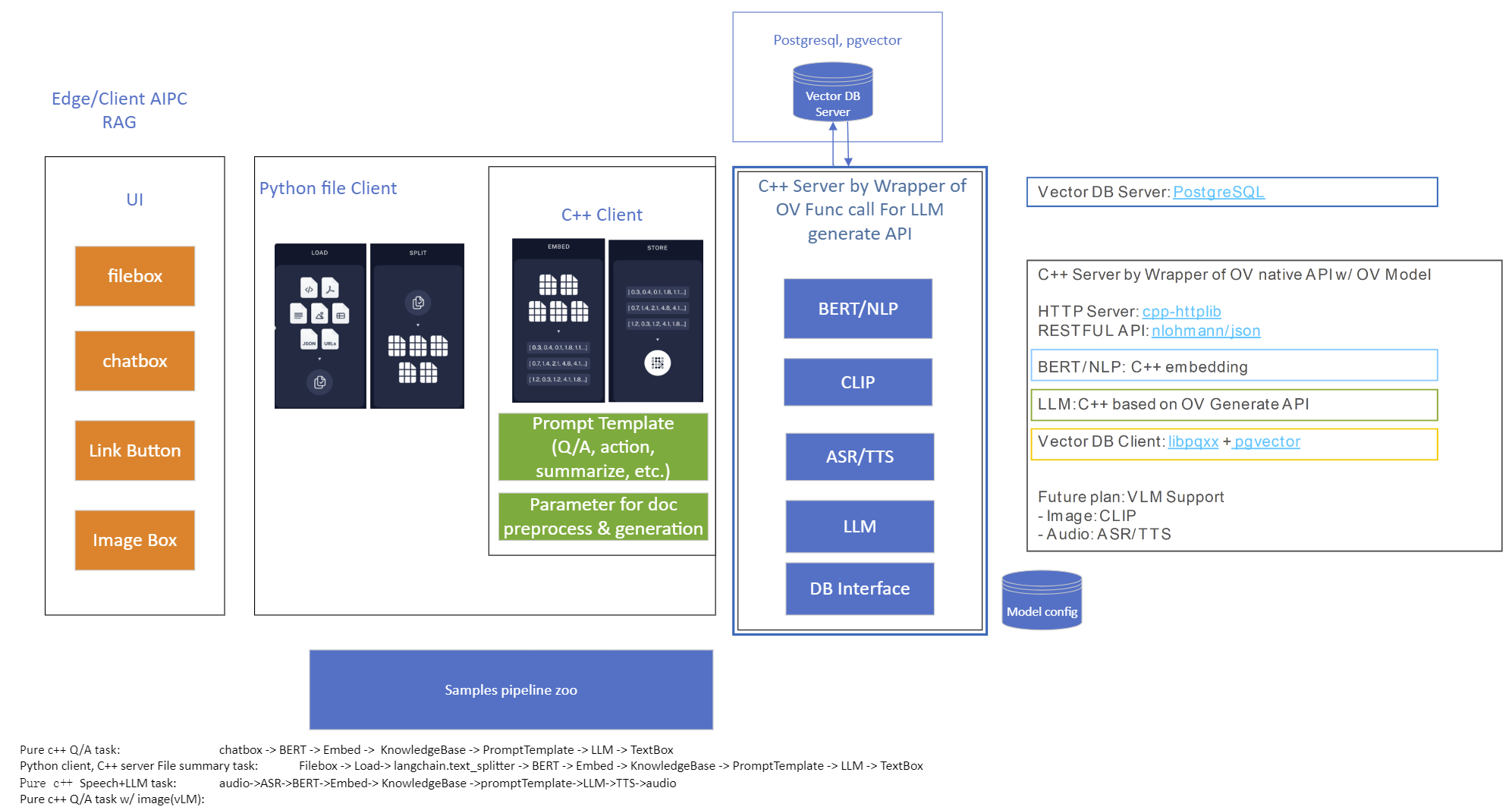

Convert torch modelto openVINOTM model

Converting a torch model to OpenVINOTM requires model inputs. So we usetest.txt as input of SAMBERT and use the res folder as input of HifiGAN.

Aftera few minutes, you will get two converted OpenVINOTM model sambert_encoder.xml sambert_encoder.bin and hifigan_t.xml hifigan_t.bin.

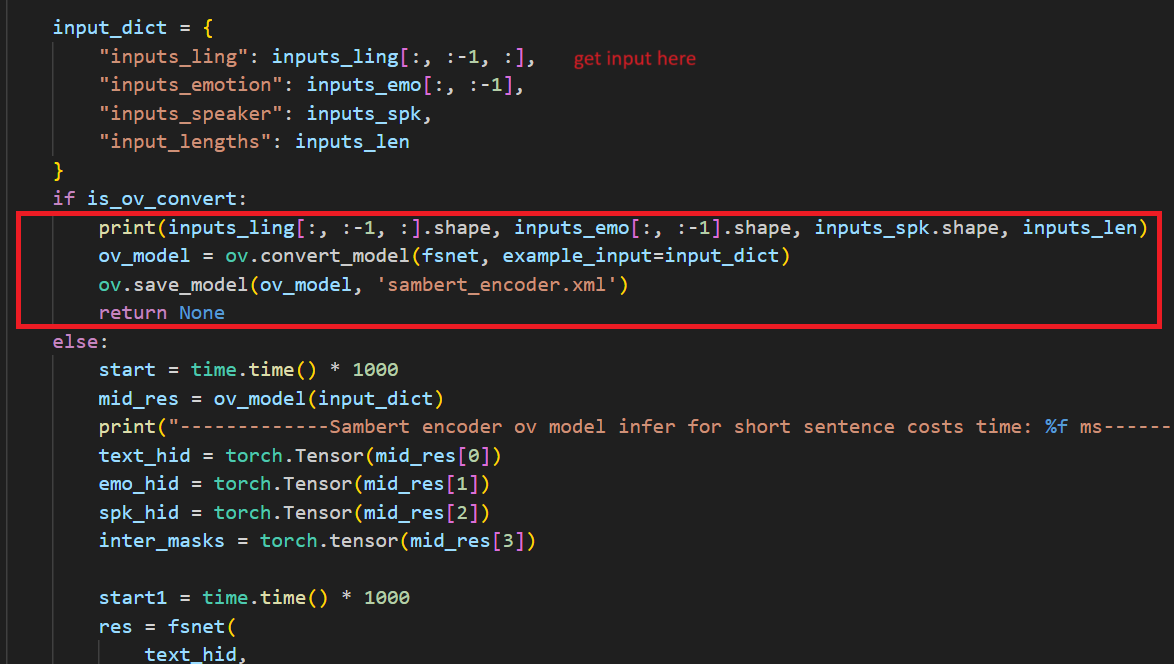

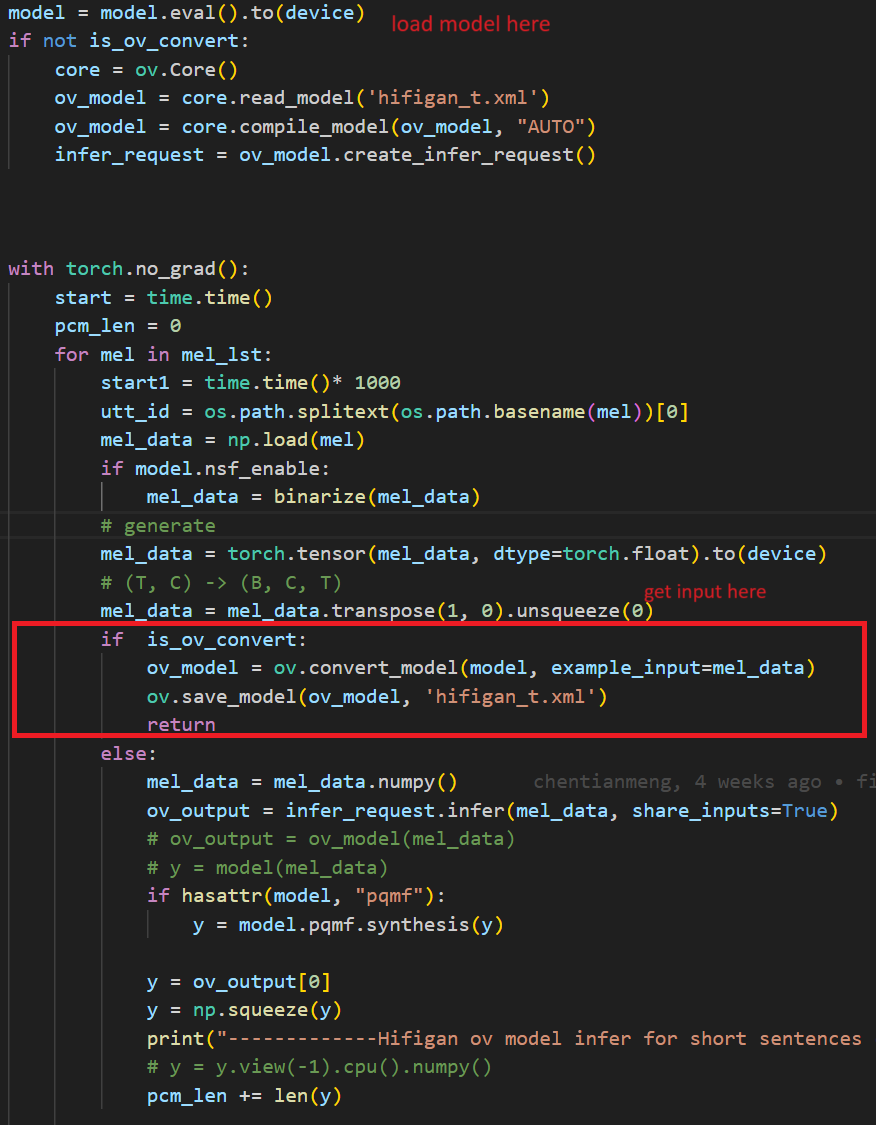

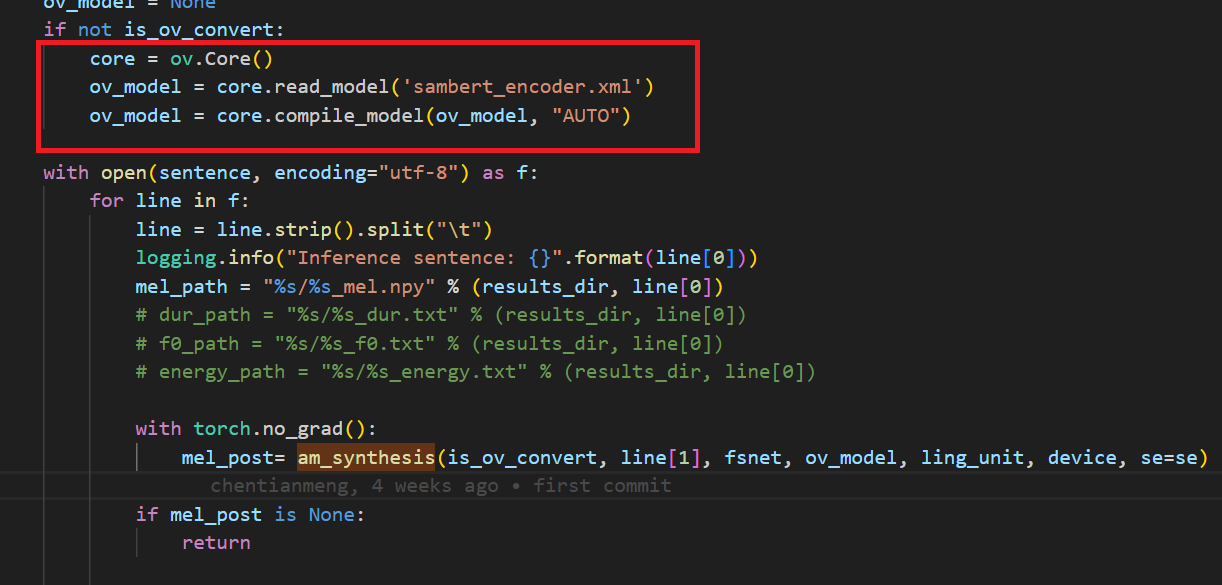

In the code after we load the model and get the inputs, we add the following code to convert the loaded PyTorch backend model to OpenVINOTM backend model and save it.

Run the inferencewith OpenVINOTM model

Before running the inference, the res folder should be renamed to allow for comparisons later.

then run the command below.

After a few minutes, you will get the wav file in res/test_male_ptts_syn. For example in test.txt we write a random sentence:

After running pipeline, we will get a 7 seconds wav file under res folder:

In the code we modified the original pytorch banckend inference code so that pipeline uses openvino backend for inference.

Summary

This blog describes about how to run the SAMBERT-HifiGANpipeline using the OpenVINOTM Python API, please see the source code formore details and modifications.

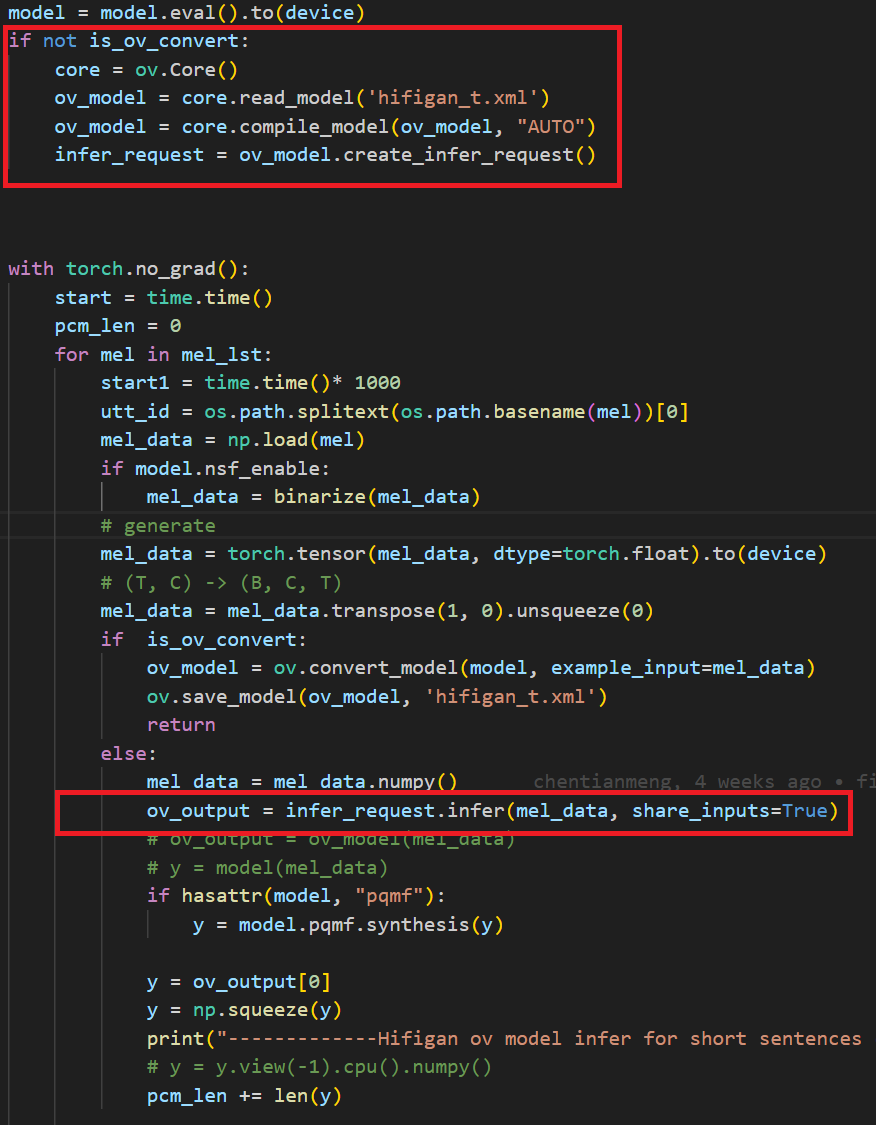

OpenVINO GenAI Serving (OGS)

Authors: Fiona Zhao, Xiake Sun, Wenyi Zou, Su Yang, Tianmeng Chen

Model Server reference implementation based on OpenVINO GenAI Package for Edge/Client AI PC Use Case.

Use Case 1: C++ RAG Sample that supports most popular models like LLaMA 2

This example showcases for Retrieval-Augmented Generation based on text-generation Large Language Models (LLMs): chatglm, LLaMA, Qwen and other models with the same signature and Bert model for embedding feature extraction. The sample fearures ov::genai::LLMPipeline and configures it for the chat scenario. There is also a Jupyter notebook which provides an example of LLM-powered RAG in Python.

Download and convert the model and tokenizers

The --upgrade-strategy eager option is needed to ensure optimum-intel is upgraded to the latest version.

Setup of PostgreSQL, Libpqxx and Pgvector

Langchain's document Loader and Spliter

- Load: document_loaders is used to load document data.

- Split: text_splitter breaks large Documents into smaller chunks. This is useful both for indexing data and for passing it in to a model, since large chunks are harder to search over and won’t in a model’s finite context window.

PostgreSQL

Download postgresql from enterprisedb.(postgresql-16.2-1-windows-x64.exe is tested)

Install PostgreSQL with postgresqltutorial.

Setup of PostgreSQL:

1. Open pgAdmin 4 from Windows Search Bar.

2. Click Browser (left side) > Servers > Postgre SQL 10.

3. Create the user postgres with password openvino (or your own setting)

4. Open SQL Shell from Windows Search Bar to check this setup. 'Enter' to set Server, Database, Port, Username as default and type Password.

libpqxx

'Official' C++ client library (language binding), built on top of C library

Update the source code from https://github.com/jtv/libpqxx in deps\libpqxx

The pipeline connects with DB based on Libpqxx.

pgvector

Open-source vector similarity search for Postgres.

By default, pgvector performs exact nearest neighbor search, which provides perfect recall. It also supports approximate nearest neighbor search (HNSW), which trades some recall for speed.

For Windows, Ensure C++ support in Visual Studio 2022 is installed, then use nmake to build in Command Prompt for VS 2022(run as Administrator). Please follow with the pgvector

Enable the extension (do this once in each database where you want to use it), run SQL Shell from Windows Search Bar with "CREATE EXTENSION vector;".

Printing CREATE EXTENSION shows successful setup of Pgvector.

pgvector-cpp

pgvector support for C++ (supports libpqxx). The headers (pqxx.hpp, vector.hpp, halfvec.hpp) are copied into the local folder rag_sample\include. Our pipeline does the vector similarity search for the chunks embeddings in PostgreSQL, based on pgvector-cpp.

Install OpenVINO, VS2022 and Build this pipeline

Download 2024.2 release from OpenVINO™ archives*. This OV built package is for C++ OpenVINO pipeline, no need to build the source code. Install latest Visual Studio 2022 Community for the C++ dependencies and LLM C++ pipeline editing.

Extract the zip file in any location and set the environment variables with dragging this setupvars.bat in the terminal Command Prompt. setupvars.ps1 is used for terminal PowerShell. <INSTALL_DIR> below refers to the extraction location. Run the following CMD in the terminal Command Prompt.

Notice:

- Install on Windows: Copy all the DLL files of PostgreSQL, OpenVINO and tbb and openvino-genai into the release folder. The SQL DLL files locate in the installed PostgreSQL path like "C:\Program Files\PostgreSQL\16\bin".

- If cmake not installed in the terminal Command Prompt, please use the terminal Developer Command Prompt for VS 2022 instead.

- The openvino tokenizer in the third party needs several minutes to build. Set 8 for -j option to specify the number of parallel jobs.

- Once the cmake finishes, check rag_sample_client.exe and rag_sample_server.exe in the relative path .\build\samples\cpp\rag_sample\Release.

- If Cmake completed without errors, but not find exe, please open the .\build\OpenVINOGenAI.sln in VS2022, and set the solution configuration as Release instead of Debug, then build the llm project within VS2022 again.

Run

Launch RAG Server

rag_sample_server.exe --llm_model_path TinyLlama-1.1B-Chat-v1.0 --llm_device CPU --embedding_model_path bge-large-zh-v1.5 --embedding_device CPU --db_connection "user=postgres host=localhost password=openvino port=5432 dbname=postgres"

Lanuch RAG Client

rag_sample_client.exe

Lanuch python Client

Use python client to send the message of DB init and send the document chunks to DB for embedding and storing.

python client_get_chunks_embeddings.py --docs test_document_README.md

Creating AI Pipeline for Cell Image Analysis: Insights, Challenges, and CHO Use Case (Part 1 of 2, Intel Edge AI in the Realm of Biopharma and Drug Development)

In the ever-evolving landscape of biopharmaceutical technology and drug development, a recent effort in the field of Cell Analytics for Monoclonal Antibody Production has shed light on the crucial role of Edge AI Technology in navigating complex challenges of scaling and producing solutions.

In this 2-part blog series, we will explore the use of Intel Edge AI Technology in biopharma and drug development, addressing challenges and providing insights into the development of AI pipelines for cell segmentation and analysis.

Intel has been involved in this process with a variety of partners. One of Intel’s contributions to the cell image project centers around processing brightfield1 images using an AI pipeline containing multiple deep learning models. The pipeline's purpose is to identify cells and other biological components and provide feedback on dynamic biological characteristics such as cell morphology, viability, and phenotypic changes, among others. Throughout this process, working on cell-AI projects usually brings a unique set of challenges to the forefront.

First, it is an interdisciplinary field and the knowledge gap between data scientists and biopharma experts requires more back-and-forth clear communications for planning and validity checks. Frequently when attempting to implement AI solutions in the laboratory, data scientists and bench scientists struggle to fully grasp the nature and needs of each other’s role. This lack of mutual understanding can also hinder the usability and scalability of an AI solution needing to be integrated into diverse lab environments.

The second challenge is instrument variability. Different plate reader2 microscopes have different hardware, optics, and apertures which cause their produced images not to be consistent. This adds an extra layer of work to assess and address these inconsistencies along the way (like regular tracked calibration and adjustment). Additionally, equipment vendor-to-vendor differences, culture temperature, medium conditions, and genetic modifications can all affect the variability of data and the inherent transferability of the deep learning pipeline. This would drive the need to monitor the performance of DL models at the edge and cloud ML ops components.

The third challenge is obtaining peer-review labels because the process is based on supervised Machine Learning and obtaining clean accurate labels is very costly and time-consuming.

And the last challenge is about the model deployment. In most cases, cloud deployment is not an option due to data size and data privacy. Produced images from plate reader microscopes are huge and transferring data to the cloud and sending the results back would create high latency because a huge amount of data must be streamed (30Gb per hour). And more importantly, laboratories are usually not willing to share the data. Due to these two constraints, cloud deployments are not usually an option, and the pipeline must be deployed at the edge.

Now, let’s talk about a specific application of this technology: the CHO Cell Segmentation Use Case.

CHO Cell Segmentation Use Case

CHO cells, or Chinese Hamster Ovary cells, are a cornerstone in the production of complex protein molecules such as monoclonal antibodies, fusion proteins, hormones, and coagulation factors. Unlike stem cells or CAR-T cells, where the cells themselves are the therapeutic product, in CHO cells, it is the proteins they produce that are of paramount importance. Monitoring the health, viability, and production capability of these cells is a critical step in commercial protein production.

Traditionally, assessing the condition of CHO cells involves a multi-step process that is not only time-consuming but also requires the use of expensive reagents and chemicals. Depending on the process, the workflow can be something like below.

- Culture cells

- Fix cells – wash in expensive reagents to remove the culture medium.

- Permeabilization – wash in more expensive chemicals to permeabilize the cell membrane (to stain for intercellular proteins).

- Blocking – incubate cells in another expensive reagent to prevent binding of no specific antibodies.

- Primary Antibody Incubation – antibody specifically to bind to a protein that is being produced.

- Washing – removing unbound Primary Antibodies using more expensive chemicals.

- Nuclear staining – use nuclear stain like DAPI to visualize cell nuclei then wash with the same chemicals from the washing step

- Mounting – get ready to read in the microscope (plate reader1)

- Imaging – Stained cells …. count them up and determine the state in the protein production cycle and relative cell health (eventually they peter out and stop producing and the batch needs to be flushed. (Cell count, viability number, etc. are the output not the image)

From culturing to imaging, each step plays a vital role in ensuring the quality of the protein product. However, with the advent of AI and deep learning, there is an opportunity to streamline this workflow significantly. Using an AI pipeline including multiple Deep Learning models and data pre and post-processing, we can go from Step 1 directly to Step 9, removing the majority of the labor and latency in getting actionable results out of a staining workflow and bypassing expensive specialty chemicals requirement. Intel has put together a reference implementation for deploying said pipeline and inferencing of these images on the edge as part of the Cell Image project https://www.cellimage.ie/. OpenVINO Toolkit, OpenVINO Model Server, and AI Connect for Scientific Data are used in this design. Let’s briefly talk about each of these wonderful SW packages in part 2 of this article series. Stay tuned!

Conclusion

In conclusion, the integration of Intel Edge AI Technology into the biopharmaceutical sector represents a transformative step towards more efficient and scalable drug development processes. As we have seen in this first installment of our blog series, the deployment of AI pipelines for cell segmentation and analysis in monoclonal antibody production is not without its challenges. These include bridging the interdisciplinary knowledge gap, managing instrument variability, acquiring peer-reviewed labels, and overcoming the hurdles associated with model deployment.

Despite these challenges, the potential benefits of Edge AI in biopharma are substantial. By leveraging Intel's advanced AI technologies, we can significantly reduce the time and cost associated with traditional cell analysis methods, while also enhancing the accuracy and reliability of the results. The use of edge computing addresses the concerns of data size and privacy, allowing for real-time processing and analysis without the need for cloud transfer.

As we move forward in this blog series, we will delve deeper into the specifics of Intel's Edge AI solutions, including the OpenVINO toolkit, OpenVINO Model Server, and AI Connect for Scientific Data. We will explore how these tools are being applied in real-world scenarios to drive innovation and improve outcomes in the realm of biopharma and drug development in the next part of this series.

Reach out to Intel's Health and Life Sciences team at health.lifesciences@intel.com or learn more about what we do at https://www.intel.com/health.

We'd like to hear from you! Let us know in the comments or discuss – which AI use cases in health and life sciences do you think will have the greatest impact on global health?

If you enjoyed hearing from the Health and Life Sciences team and want to hear more, give this post a like and ensure you subscribe to get the latest updates from the team.

About the Author

Nooshin Nabizadeh has Ph.D. in Electrical and Computer Engineering from the University of Miami and works at Intel Corporation as AI Solutions Architect. She enjoys photography, writing poetry, reading about psychology and philosophy, and optimizing solutions to run as fast as possible on a given piece of hardware. Connect with her on LinkedIn https://www.linkedin.com/in/nooshin-nabizadeh/ by mentioning this blog.

- Brightfield microscopy is a widely used technique for observing the morphology of cells and tissues.

- A plate reader is a laboratory instrument used to obtain images from samples in microtiter plates. The reader shines a specific calibrated frequency of light (UV, visible, fluorescence, etc.) through the samples in the wells of the plate. Plate reader microscopy data sets have inherent variability which drives the requirement of regular tracked calibration and adjustment.

Optimizing Whisper and Distil-Whisper for Speech Recognition with OpenVINO and NNCF

Authors: Nikita Savelyev, Alexander Kozlov, Ekaterina Aidova, Maxim Proshin

Introduction

Whisper is a general-purpose speech recognition model from OpenAI. The model can transcribe speech across dozens of languages and even handle poor audio quality or excessive background noise. You can find more information about this model in the research paper, OpenAI blog, model card and GitHub repository.

Recently, a distilled variant of the model called Distil-Whisper has been proposed in the paper Robust Knowledge Distillation via Large-Scale Pseudo Labelling. Compared to Whisper, Distil-Whisper runs several times faster with 50% fewer parameters, while performing to within 1% word error rate (WER) on out-of-distribution evaluation data.

Whisper is a Transformer-based encoder-decoder model, also referred to as a sequence-to-sequence model. It maps a sequence of audio spectrogram features to a sequence of text tokens. First, the raw audio inputs are converted to a log-Mel spectrogram by action of the feature extractor. Then, the Transformer encoder encodes the spectrogram to form a sequence of encoder hidden states. Finally, the decoder autoregressively predicts text tokens, conditional on both the previous tokens and the encoder's hidden states.

You can see the model architecture in the diagram below:

In this article, we would like to demonstrate how to improve Whisper and Distil-Whisper inference speed with OpenVINO for Intel hardware. Additionally, we show how to make models even faster by applying 8-bit Post-training Quantization with Neural Network Compression Framework (NNCF). In the end we present evaluation results from accuracy and performance standpoints on a large-scale dataset.

All code snippets presented in this article are from the Automatic speech recognition using Distil-Whisper and OpenVINO Jupyter notebook, so you can follow along.

Converting Model to OpenVINO format

We are going to load models from Hugging Face Hub with the help of Optimum Intel library which makes it easier to load and run OpenVINO-optimized models. For more details, pleaes refer to the Hugging Face Optimum documentation.

For example, the following code loads the Distil-Whisper large-v2 model ready for inference with OpenVINO.

Models from the Distil-Whisper family are available at Distil-Whisper Models collection and Whisper models are available at OpenAI Hugging Face page.

To transcribe an input audio with the loaded model, we first compile the model to the device of choice and then call generate() method on input features prepared by corresponding processor.

The output is the following. As you can see the transcription equals the reference text.

Running Post-Training Quantization with NNCF

NNCF enables post-training quantization by adding quantization layers into the model graph and then using a subset of the training dataset to initialize parameters of these additional quantization layers. During quantization, some layers (e.g., MatMuls, Convolutions) are transformed to be executed in INT8 instead of FP16/FP32. If a quantized operation is parameterized then its corresponding weight variable is also converted to INT8.

In general, the optimization process contains the following steps:

- Create a calibration dataset for quantization.

- Run nncf.quantize() to obtain quantized encoder and decoder models.

- Serialize the INT8 models using openvino.save_model() function.

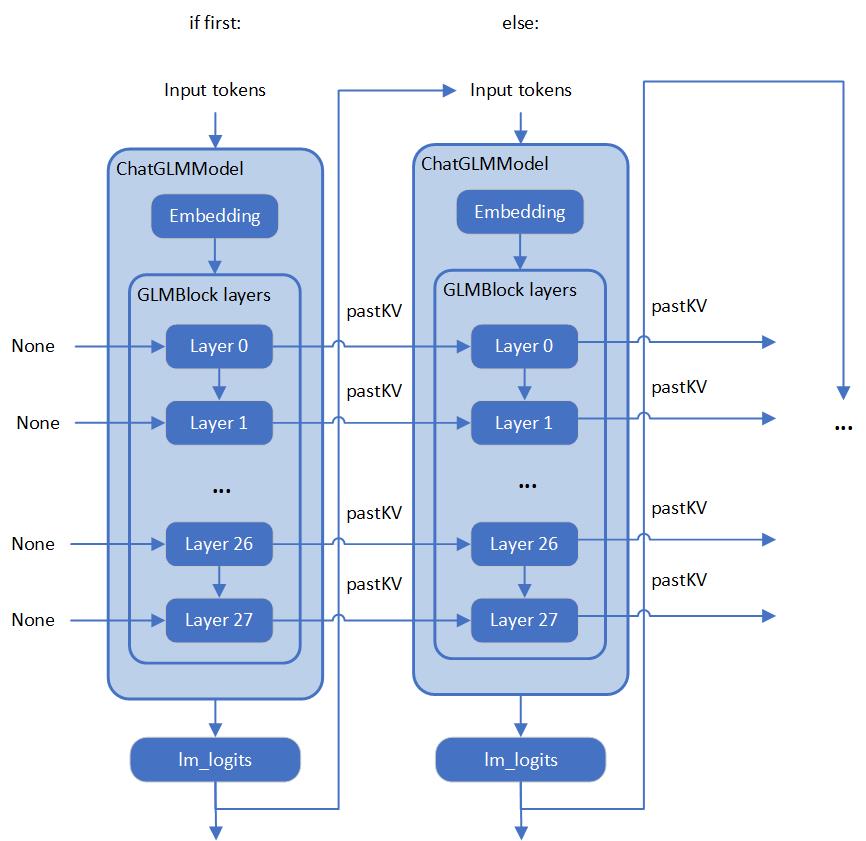

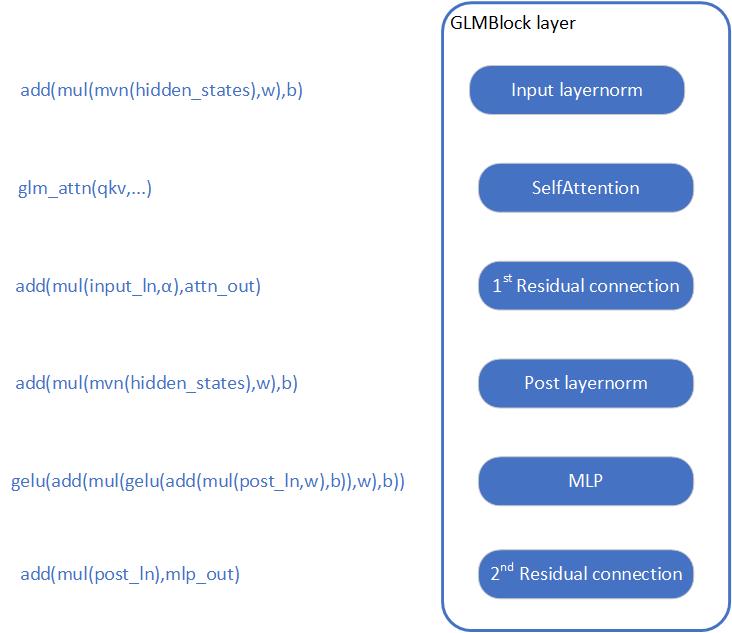

Whisper model consists of an encoder and decoder submodels. Furthermore, for the decoder model its forward() signature is different for the first call compared to all subsequent calls. During the first call, key-value cache is empty and is not needed for decoder inference. Starting from the second call, key-value cache is fed to the decoder. Because of this, these two cases are represented by two separate OpenVINO models: openvino_decoder_model.xml and openvino_decoder_with_past_model.xml. Since the first decoder model is inferred only once it does not make much sense to quantize it. So, we apply quantization to the encoder and the decoder with past models.

The first step towards quantization is collecting calibration data. For that, we need to collect some number of model inputs for both models. To do that, we patch OpenVINO model request objects with an InferRequestWrapper class instance that will intercept model inputs during inference and store them in a list. We infer the model on about 50 samples from validation split of librispeech_asr dataset.

With the collected calibration data for encoder and decoder models we can proceed to quantization itself. Let's examine the quantization call for the encoder model. For the decoder model, it is similar.

After both models are quantized and saved, the quantized Whisper model can be loaded and run the same way as shown previously. Comparing the transcriptions produced by original and quantized models results in the following.

As you can see for the quantized distil-whisper-large-v2 transcription is the same.

Evaluating on Common Voice Dataset

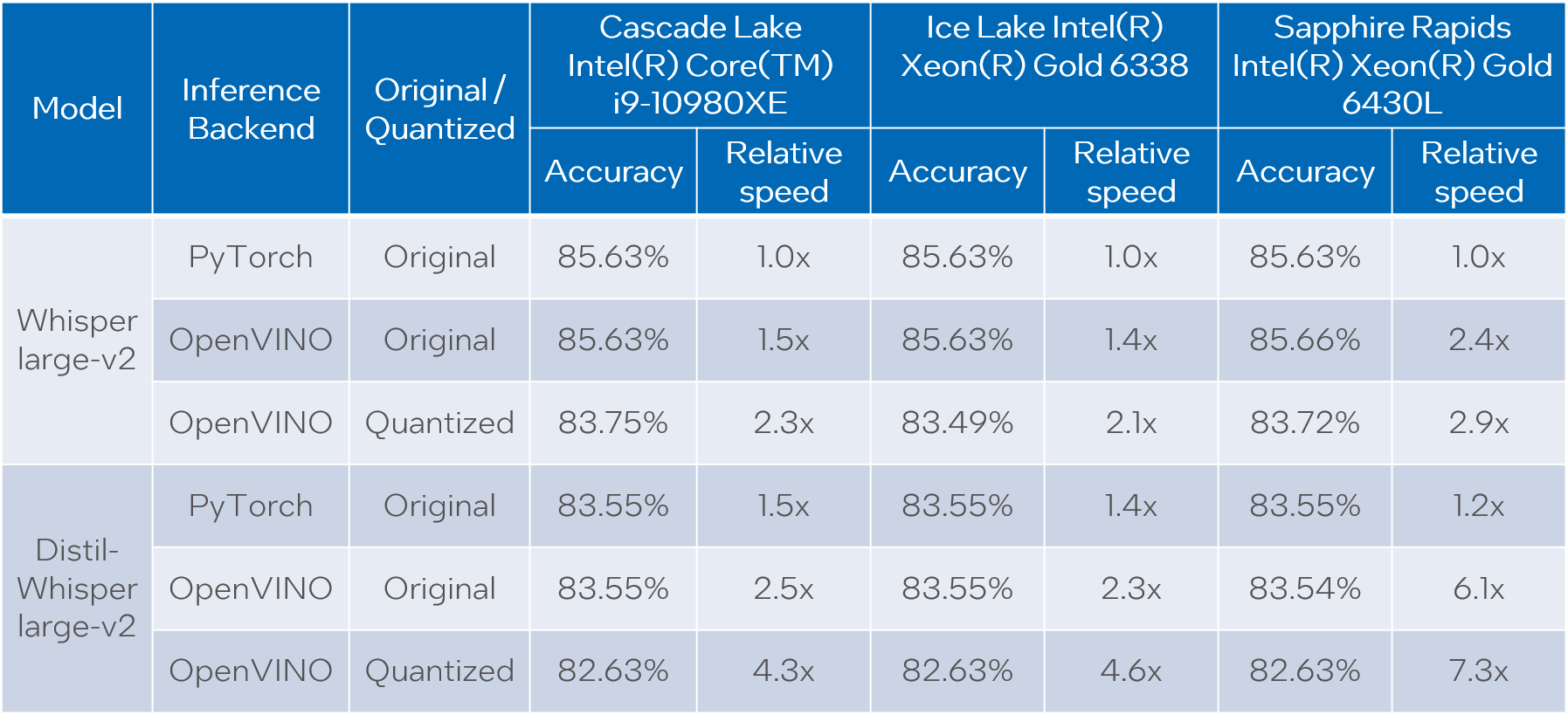

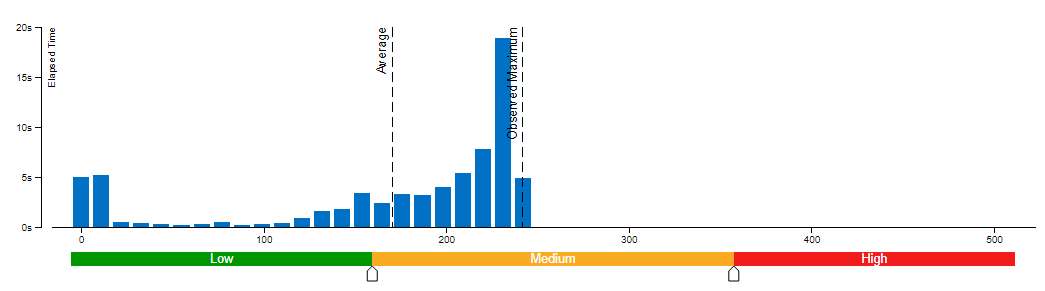

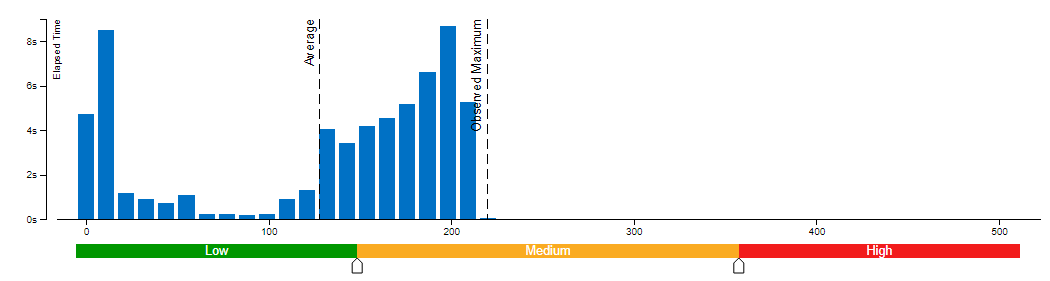

We evaluate Whisper and Distil-Whisper large-v2 model variants on a Common Voice 13.0 speech-to-text dataset. We use en/test split containing 16372 audio samples amounting to about 27 hours of recordings.

The evaluation is done across three model types: original PyTorch model, original OpenVINO model and quantized OpenVINO model. Additionally, we run tests on three Intel CPUs: Cascade Lake Intel(R) Core(TM) i9-10980XE, Ice Lake Intel(R) Xeon(R) Gold 6338 and Sapphire Rapids Intel(R) Xeon(R) Gold 6430L.

For all combinations above we measure transcription time and accuracy. When measuring time for a model we sum up generate() call durations for all audio samples. Transcription accuracy is represented as Accuracy = (100 - WER), WER stands for Word Error Rate. We compute accuracy for each audio sample and then take the average value across the dataset. The results are given in the table below.

Please note that we report transcription time in relative terms such that the values for each CPU are normalized over its corresponding column. The duration of audio data in the dataset is 27.06 hours and the absolute transcription time values for Whisper large-v2 PyTorch on each CPU are:

- 20.35 hours for Core i9-10980XE

- 14.09 hours for Xeon Gold 6338

- 15.03 hours for Xeon Gold 6430L

Based on the results we can conclude that:

- OpenVINO models execute 1.4x - 5.1x faster than PyTorch models with pretty much the same accuracy across all cases.

- When compared to original PyTorch models, quantized OpenVINO models provide 2.1x - 6.1x performance boost with 1-2% accuracy drop.

NOTE: in terms of this article we focus on presenting performance values. Accuracy of quantized models can be improved with a more careful selection of calibration data.

Notices and Disclaimers:

Performance varies by use, configuration, and other factors. Learn more at www.intel.com/PerformanceIndex. Performance results are based on testing as of dates shown in configurations and may not reflect all publicly available updates. No product or component can be absolutely secure. Intel technologies may require enabled hardware, software or service activation.

The products described may contain design defects or errors known as errata which may cause the product to deviate from published specifications. Current characterized errata are available on request.

Test Configuration: Intel® Core™ i9-10980XE CPU Processor at 3.00GHz with DDR4 128 GB at 3000MHz, OS: Ubuntu 20.04.3 LTS; Intel® Xeon® Gold 6338 CPU Processor at 2.00GHz with DDR4 256 GB at 3200MHz, OS: Ubuntu 20.04.3 LTS; Intel® Xeon® Gold 6430L CPU Processor at 1.90GHz with DDR5 1024 GB at 4800MHz, OS: Ubuntu 20.04.6 LTS. Testing was performed using distil-whisper-asr notebook for model export and whisper evaluation notebook for model evaluation.

The test was conducted by Intel in December 2023.

Conclusion

We demonstrated how to load and run Whisper and Distil-Whisper models for audio transcription task with OpenVINO and Optimum Intel, and how to perform INT8 post-training quantization of these models with NNCF. Further we evaluated these models on a large scale speech-to-text dataset across multiple CPU devices. The evaluation results show a significant performance boost of OpenVINO vs PyTorch models without loss of transcription quality, and even a larger boost with a tolerable accuracy drop when we apply INT8 quantization.

OpenVINO Latent Consistency Model C++ pipeline with LoRA model support

Introduction

Latent Consistency Models (LCMs) is the next generation of generative models after Latent Diffusion Models (LDMs). While Latent Diffusion Models (LDMs) like Stable Diffusion are capable of achieving the outstanding quality of generation, they often suffer from the slowness of the iterative image denoising process. LCM is an optimized version of LDM. Inspired by Consistency Models (CM), Latent Consistency Models (LCMs) enabled swift inference with minimal steps on any pre-trained LDMs, including Stable Diffusion. The Consistency Models is a new family of generative models that enables one-step or few-step generation. More details about the proposed approach and models can be found using the following resources: project page, paper, original repository.

This article will demonstrate a C++ application of the LCM model with Intel’s OpenVINO™ C++ API on Linux systems. For model inference performance and accuracy, the C++ pipeline is well aligned with the Python implementation.

The full implementation of the LCM C++ demo described in this post is available on the GitHub: openvino.genai/lcm_dreamshaper_v7.

Model Conversion

To leverage efficient inference with OpenVINO™ runtime on Intel platforms, the original model should be converted to OpenVINO™ Intermediate Representation (IR).

LCM model

Optimum Intel can be used to load SimianLuo/LCM_Dreamshaper_v7 model from Hugging Face Hub and convert PyTorch checkpoint to the OpenVINO™ IR on-the-fly, by setting export=True when loading the model, like:

Tokenizer

OpenVINO Tokenizers is an extension that adds text processing operations to OpenVINO Inference Engine. In addition, the OpenVINO Tokenizers project has a tool to convert a HuggingFace tokenizer into OpenVINO IR model tokenizer and detokenizer: it provides the convert_tokenizer function that accepts a tokenizer Python object and returns an OpenVINO Model object:

Note: Currently OpenVINO Tokenizers can be inferred on CPU devices only.

Conversion step

You can find the full script for model conversion at the original repo.

Note: The tutorial assumes that the current working directory is and <openvino.genai repo>/image_generation/lcm_ dreamshaper_v7/cpp all paths are relative to this folder.

Let’s prepare a Python environment and install dependencies:

Now we can use the script scripts/convert_model.py to download and convert models:

C++ Pipeline

Pipeline flow

Let’s now talk about the logical structure of the LCM model pipeline.

Just like the classic Stable Diffusion pipeline, the LCM pipeline consists of three important parts:

- A text encoder to create a condition to generate an image from a text prompt.

- U-Net for step-by-step denoising the latent image representation.

- Autoencoder (VAE) for decoding the latent space to an image.

The pipeline takes a latent image representation and a text prompt transformed to text embedding via CLIP’s text encoder as an input. The initial latent image representation is generated using random noise generator. LCM uses a guidance scale for getting time step conditional embeddings as input for the diffusion process, while in Stable Diffusion, it used for scaling output latents.

Next, the U-Net iteratively denoises the random latent image representations while being conditioned on the text embeddings. The output of the U-Net, being the noise residual, is used to compute a denoised latent image representation via a scheduler algorithm. LCM introduces its own scheduling algorithm that extends the denoising procedure introduced by denoising diffusion probabilistic models (DDPMs) with non-Markovian guidance. The denoising process is repeated for a given number of times to step-by-step retrieve better latent image representations. When complete, the latent image representation is decoded by the decoder part of the variational auto encoder.

The C++ implementations of the scheduler algorithm and LCM pipeline are available at the following links: LCM Scheduler, LCM Pipeline.

LoRA support

LoRA (Low-Rank Adaptation) is a training technique for fine-tuning Stable Diffusion models. There are various LoRA models available on https://civitai.com/tag/lora.

The main idea for LoRA weights enabling, is to append weights onto the OpenVINO LCM models at runtime before compiling the Unet/text_encoder model. The method is to extract LoRA weights from safetensors file, find the corresponding weights in Unet/text_encoder model and insert the LoRA bias weights. The common approach to add LoRA weights looks like:

The original LoRA safetensor model is loaded via safetensors.h. The layer name and weight of LoRA are modified with Eigen Lib and inserted into Unet/text_encoder OpenVINO model using ov::pass::MatcherPass - you can see the implementation in the file common/diffusers/src/lora.cpp.

To run the LCM demo with the LoRA model, first download LoRA, for example: LoRa/Soulcard.

Build and Run LCM demo

Let’s start with the dependencies installation:

Now we can build the application:

And finally we’re ready to run the LCM demo. By default the positive prompt is set to: “a beautiful pink unicorn”.

Please note, that the quality of the resulting image depends on the quality of the random noise generator, so there is a difference for output images generated by the C++ noise generator and the PyTorch generator. Use oprion -r to read the PyTorch generated noise from the provided textfiles for the alignment with Python pipeline.

Note: Run ./lcm_dreamshaper -h to see all the available demo options

Let’s try to run the application in a few modes:

Read the numpy latent input and noise for scheduler instead of C++ std lib for the alignment with Python pipeline: ./lcm_dreamshaper -r

Generate image with C++ std lib generated latent and noise : ./lcm_dreamshaper

Generate image with Soulcard LoRa and C++ generated latent and noise: ./lcm_dreamshaper -r -l path/to/soulcard.safetensors

See Also

- Optimizing Latent Consistency Model for Image Generation with OpenVINO™ and NNCF

- Image generation with Latent Consistency Model and OpenVINO

- C++ Pipeline for Stable Diffusion v1.5 with Pybind for Lora Enabling

- Enable LoRA weights with Stable Diffusion Controlnet Pipeline

C++ Pipeline for Stable Diffusion v1.5 with Pybind for Lora Enabling

Authors: Fiona Zhao, Xiake Sun, Su Yang

The purpose is to demonstrate the use of C++ native OpenVINO API.

For model inference performance and accuracy, the pipelines of C++ and python are well aligned.

Source code github: OV_SD_CPP.

Step 1: Prepare Environment

Setup in Linux:

C++ pipeline loads the Lora safetensors via Pybind

C++ Dependencies:

- OpenVINO: Tested with OpenVINO 2023.1.0.dev20230811 pre-release

- Boost: Install with sudo apt-get install libboost-all-dev for LMSDiscreteScheduler's integration

- OpenCV: Install with sudo apt install libopencv-dev for image saving

Notice:

SD Preparation in two steps above could be auto implemented with build_dependencies.sh in the scripts directory.

Step 2: Prepare SD model and Tokenizer Model

- SD v1.5 model:

Refer this link to generate SD v1.5 model, reshape to (1,3,512,512) for best performance.

With downloaded models, the model conversion from PyTorch model to OpenVINO IR could be done with script convert_model.py in the scripts directory.

Lora enabling with safetensors, refer this blog.

SD model dreamlike-anime-1.0 and Lora soulcard are tested in this pipeline.

- Tokenizer model:

- The script convert_sd_tokenizer.py in the scripts dir could serialize the tokenizer model IR

- Build OpenVINO extension:

Refer to PR OpenVINO custom extension ( new feature still in experiments )

- read model with extension in the SD pipeline

Step 3: Build Pipeline

Step 4: Run Pipeline

Usage: OV_SD_CPP [OPTION...]

- -p, --posPrompt arg Initial positive prompt for SD (default: cyberpunk cityscape like Tokyo New York with tall buildings at dusk golden hour cinematic lighting)

- -n, --negPrompt arg Default negative prompt is empty with space (default: )

- -d, --device arg AUTO, CPU, or GPU (default: CPU)

- -s, --seed arg Number of random seed to generate latent (default: 42)

- --height arg height of output image (default: 512)

- --width arg width of output image (default: 512)

- --log arg Generate logging into log.txt for debug

- -c, --useCache Use model caching

- -e, --useOVExtension Use OpenVINO extension for tokenizer

- -r, --readNPLatent Read numpy generated latents from file

- -m, --modelPath arg Specify path of SD model IR (default: /YOUR_PATH/SD_ctrlnet/dreamlike-anime-1.0)

- -t, --type arg Specify precision of SD model IR (default: FP16_static)

- -l, --loraPath arg Specify path of lora file. (*.safetensors). (default: /YOUR_PATH/soulcard.safetensors)

- -a, --alpha arg alpha for lora (default: 0.75)

- -h, --help Print usage

Example:

Positive prompt: cyberpunk cityscape like Tokyo New York with tall buildings at dusk golden hour cinematic lighting.

Negative prompt: (empty, here couldn't use OV tokenizer, check the issues for details).

Read the numpy latent instead of C++ std lib for the alignment with Python pipeline.

- Generate image without lora

- Generate image with Soulcard Lora

- Generate the debug logging into log.txt

Benchmark:

The performance and image quality of C++ pipeline are aligned with Python.

To align the performance with Python SD pipeline, C++ pipeline will print the duration of each model inferencing only.

For the diffusion part, the duration is for all the steps of Unet inferencing, which is the bottleneck.

For the generation quality, be careful with the negative prompt and random latent generation.

Limitation:

- Pipeline features:

- Program optimization: now parallel optimization with std::for_each only and add_compile_options(-O3 -march=native -Wall) with CMake

- The pipeline with INT8 model IR not improve the performance

- Lora enabling only for FP16

- Random generation fails to align, C++ random with MT19937 results is differ from numpy.random.randn(). Hence, please use -r, --readNPLatent for the alignment with Python

- OV extension tokenizer cannot recognize the special character, like “.”, ”,”, “”, etc. When write prompt, need to use space to split words, and cannot accept empty negative prompt. So use default tokenizer without config -e, --useOVExtension, when negative prompt is empty

Setup in Windows 10 with VS2019:

1. Python env: Setup Conda env SD-CPP with the anaconda prompt terminal

2. C++ dependencies:

- OpenVINO and OpenCV:

Download and setup Environment Variable: add the path of bin and lib (System Properties -> System Properties -> Environment Variables -> System variables -> Path )

- Boost:

- Download from sourceforge

- Unzip

- Setup: bootstrap.bat

- Build: b2.exe

- Install: b2.exe install

Installed boost in the path C:/Boost, add CMakeList with "SET(BOOST_ROOT"C:/Boost")"

3. Setup of conda env SD-CPP and Setup OpenVINO with setupvars.bat

4. CMake with build.bat like:

5. Setup of Visual Studio with release and x64, and build: open .sln file in the build Dir

6. Run the SD_generate.exe

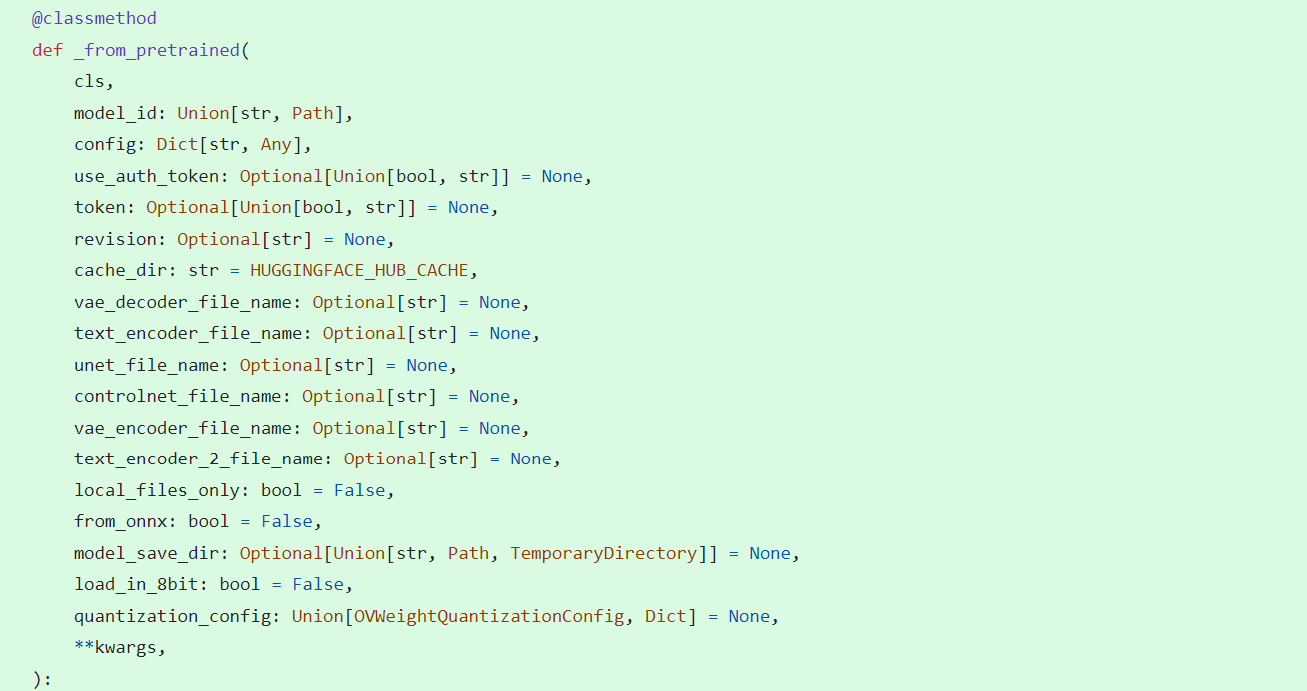

Enable Textual Inversion with Stable Diffusion Pipeline via Optimum-Intel

Introduction

Stable Diffusion (SD) is a state-of-the-art latent text-to-image diffusion model that generates photorealistic images from text. Recently, many fine-tuning technologies proposed to create custom Stable Diffusion pipelines for personalized image generation, such as Textual Inversion, Low-Rank Adaptation (LoRA). We’ve already published a blog for enabling LoRA with Stable Diffusion + ControlNet pipeline.

In this blog, we will focus on enabling pre-trained textual inversion with Stable Diffusion via Optimum-Intel. The feature is available in the latest Optimum-Intel, and documentation is available here.

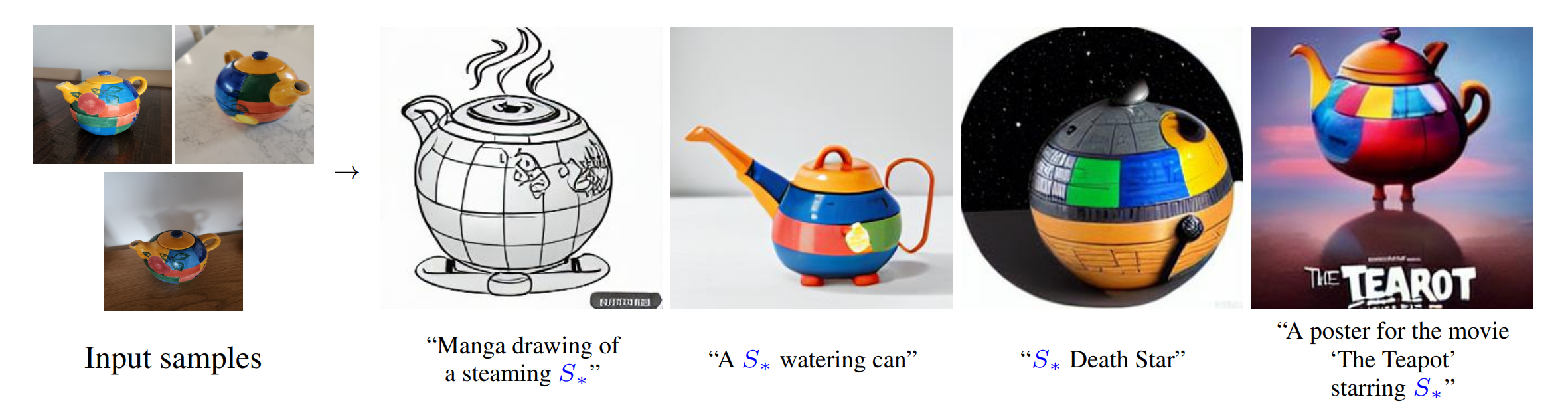

Textual Inversion is a technique for capturing novel concepts from a small number of example images in a way that can later be used to control text-to-image pipelines. It does so by learning new “words” in the embedding space of the pipeline’s text encoder.

As Figure 1 shows, you can teach new concepts to a model such as Stable Diffusion for personalized image generation using just 3-5 images.

Hugging Face Diffusers and Stable Diffusion Web UI provides useful tools and guides to train and save custom textual inversion embeddings. The pre-trained textual inversion embeddings are widely available in sd-concepts-library and civitai, which can be loaded for inference with the StableDiffusionPipeline using Pytorch as the runtime backend.

Here is an example to load pre-trained textual inversion embedding sd-concepts-library/cat-toy to inference with Pytorch backend.

Optimum-Intel provides the interface between the Hugging Face Transformers and Diffusers libraries to leverage OpenVINOTM runtime to accelerate end-to-end pipelines on Intel architectures.

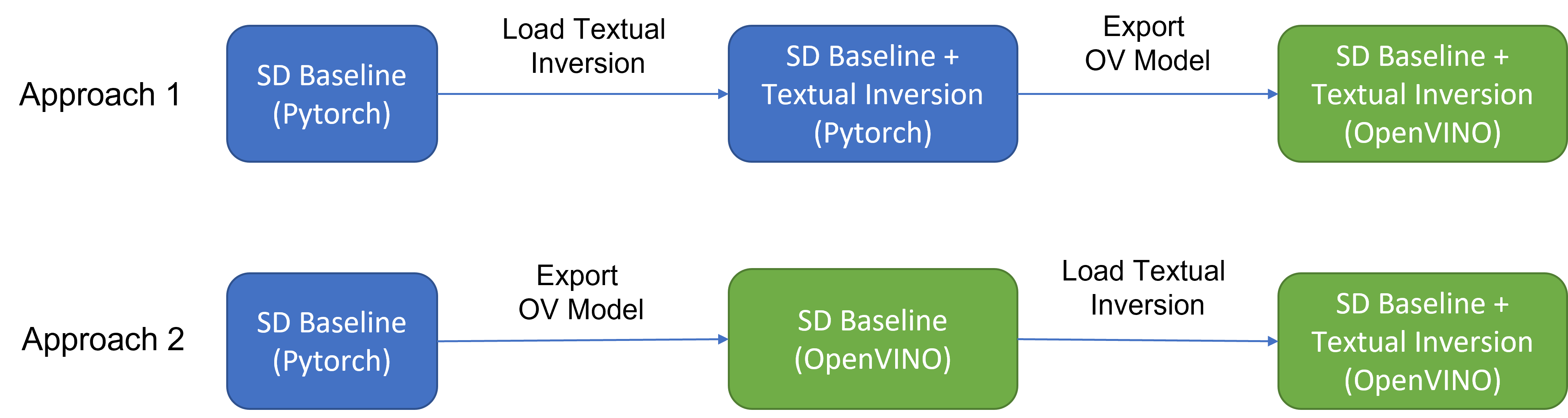

As Figure 2 shows that two approaches are available to enable textual inversion with Stable Diffusion via Optimum-Intel.

Although approach 1 seems quite straightforward and does not need any code modification in Optimum-Intel, the method requires the re-export ONNX model and then model conversion to the OpenVINOTM IR model whenever the SD baseline model is merged with anew textual inversion.

Instead, we propose approach 2 to support OVStableDiffusionPipelineBase to load pre-trained textual inversion embeddings in runtime to save disk storage while keeping flexibility.

- Save disk storage: We only need to save an SD baseline model converted to OpenVINOTM IR (e.g.: SD-1.5 ~5GB) and multiple textual embeddings (~10KB-100KB), instead of multiple SD OpenVINOTM IR with textual inversion embeddings merged (~n *5GB), since disk storage is limited, especially for edge/client use case.

- Flexibility: We can load (multiple) pre-trained textual inversion embeddings in the SD baseline model in runtime quickly, which supports the combination of embeddings and avoid messing up the baseline model.

How to enable textual inversion in runtime?

We implemented OVTextualInversionLoaderMixinbased on diffusers.loaders.TextualInversionLoaderMixin with the following features:

- Load and parse textual embeddings saved as*.bin, *.pt, *.safetensors as a list of Tensors.

- Update tokenizer for new “words” using new token id and expand vocabulary size.

- Update text encoder embeddings via InsertTextEmbedding class based on OpenVINOTM ngraph transformation.

For the implementation details of OVTextualInversionLoaderMixin, please refer to here.

Here is the sample code for InsertTextEmbedding class:

InsertTextEmbeddingclass utilizes OpenVINOTM ngraph MatcherPass function to insert subgraph into the model. Please note, the MacherPass function can only filter layers by type, so we run two phases of filtering to find the layer that matched with the pre-defined key in the model:

- Filter all Constant layers to trigger the callback function.

- Filter layer name with pre-defined key “TEXTUAL_INVERSION_EMBEDDING_KEY” in the callback function

If the root name matched the pre-defined key, we will loop all parsed textual inversion embedding and token id pair and create a subgraph (Constant + Unsqueeze + Concat) by OpenVINOTM operation sets to insert into the text encoder model. In the end, we update the root output node with the last node in the subgraph.

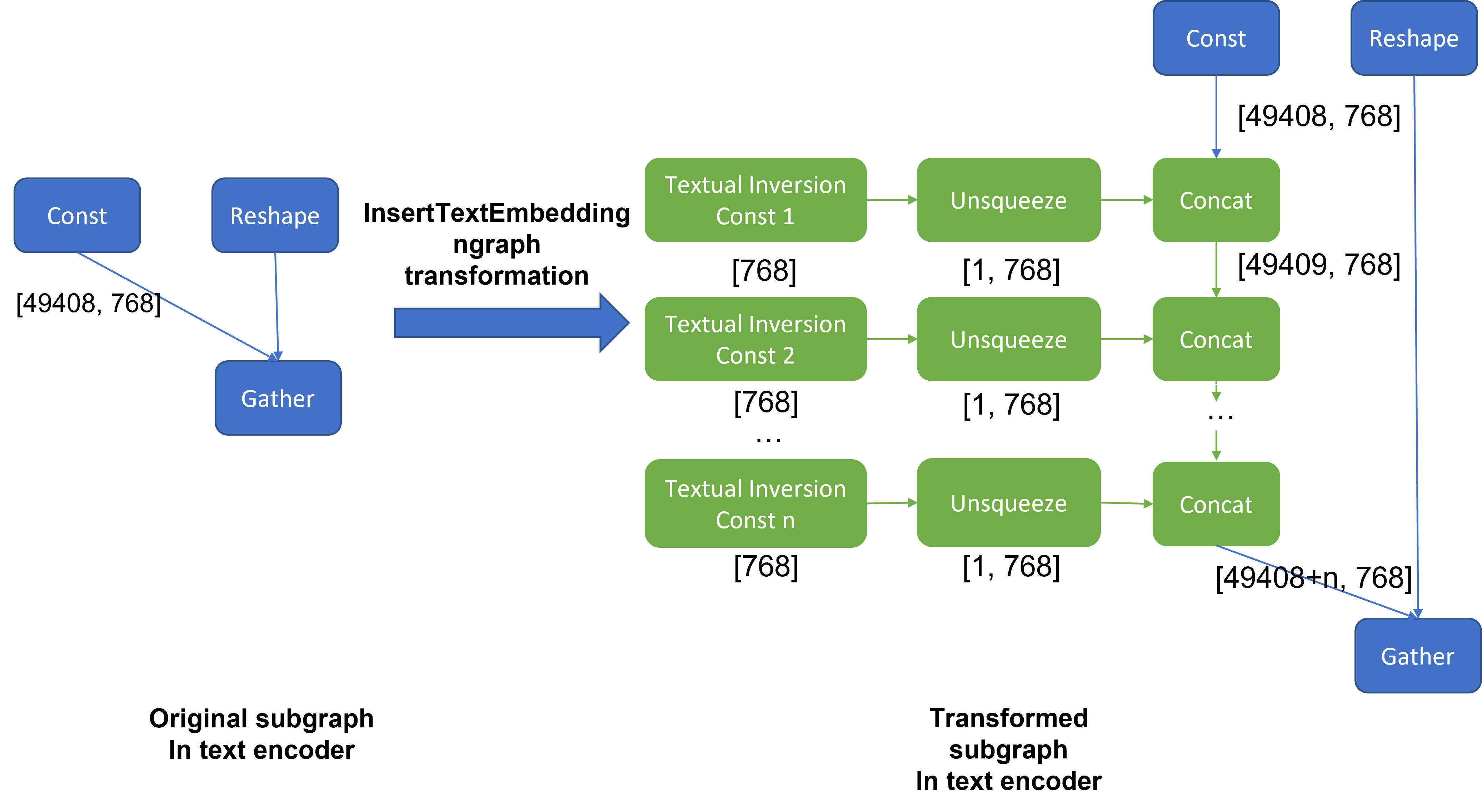

Figure 3 demonstrates the workflow of InsertTextEmbedding OpenVINOTM ngraph transformation. The left part shows the subgraph in SD 1.5 baseline text encoder model, where text embedding has a Constant node with shape [49408, 768], the 1st dimension is consistent with the original tokenizer (vocab size 49408), and the second dimension is feature length of each text embedding.

When we load (multiple) textual inversion, all textual inversion embeddings will be parsed as a list of tensors with shape[768], and each textual inversion constant will be unsqueezed and concatenated with original text embeddings. The right part is the result of applying InsertTextEmbedding ngraph transformation on the original text encoder, the green rectangle represents merged textual inversion subgraph.

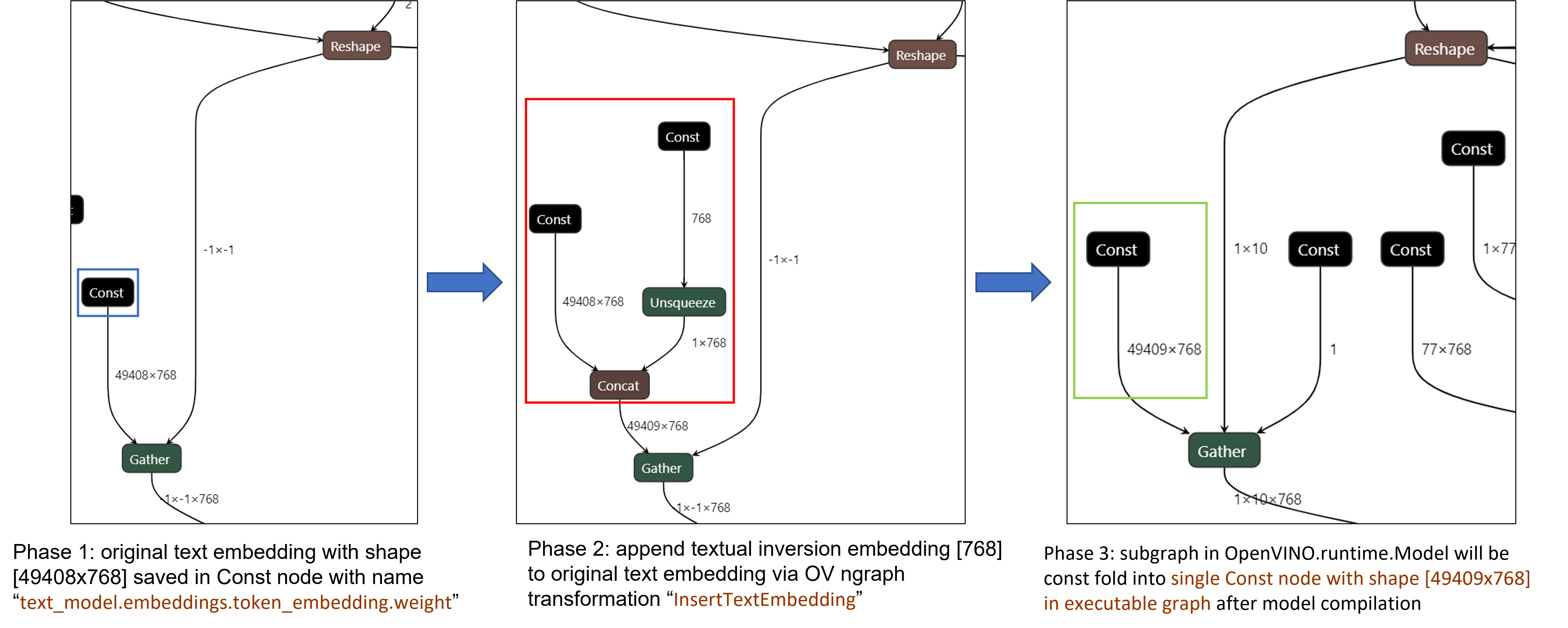

As Figure 4 shows, In the first phase, the original text embedding (marked as blue rectangle) is saved in Const node “text_model.embeddings.token_embedding.weight” with shape [49408,768], after InsertTextEmbedding ngraph transformation, new subgraph (marked as red rectangle) will be created in 2nd phase. In the 3rd phase, during model compilation, the new subgraph will be const folding into a single const node (marked as green rectangle) with a new shape [49409,768] by OpenVINOTM ConstantFolding transformation.

Stable Diffusion Textual Inversion Sample

Here are textual inversion examples verified with Stable Diffusion v1.5, Stable Diffusion v2.1 and Stable Diffusion XL 1.0 Base pipeline with latest optimum-intel

Setup Environment

Run SD 1.5 + Cat-Toy Textual Inversion Example

Run SD 2.1 + Midjourney 2.0 Textual Inversion Example

Run SDXL 1.0 Base + CharTurnerV2 Textual Inversion Example

Conclusion

In this blog, we proposed to load textual inversion embedding in the stable diffusion pipeline in runtime to save disk storage while keeping flexibility.

- Implemented OVTextualInversionLoaderMixin to update tokenizer with additional token id and update text encoder with InsertTextEmbedding OpenVNO ngraph transformation.

- Provides sample code to load textual inversion with SD 1.5, SD 2.1, and SDXL 1.0 Base and inference with Optimum-Intel

Reference

An Image is Worth One Word: Personalizing Text-to-Image Generation using Textual Inversion

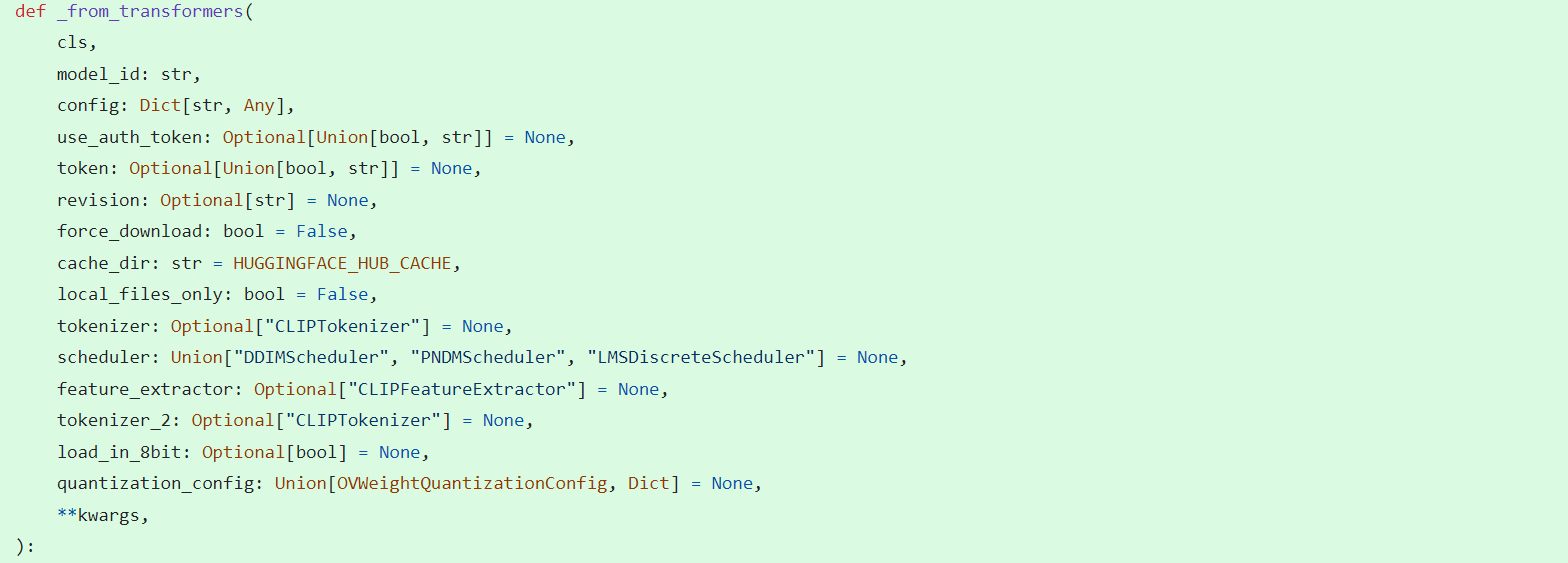

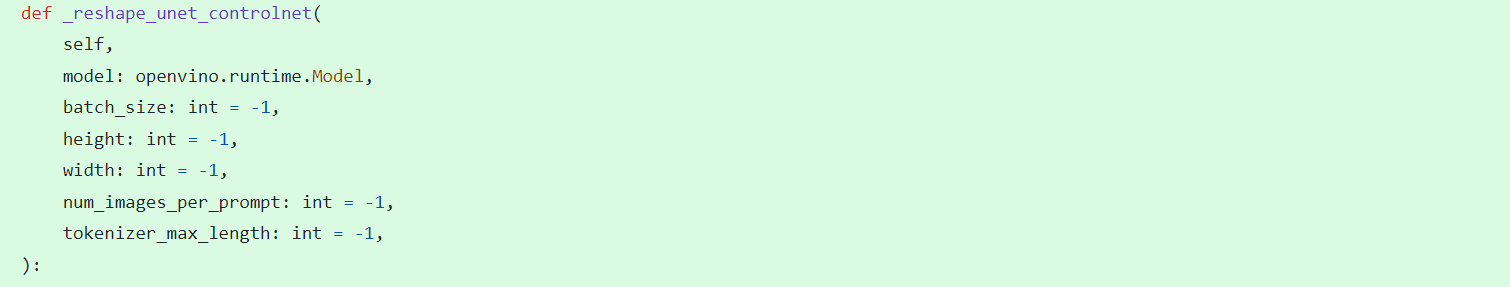

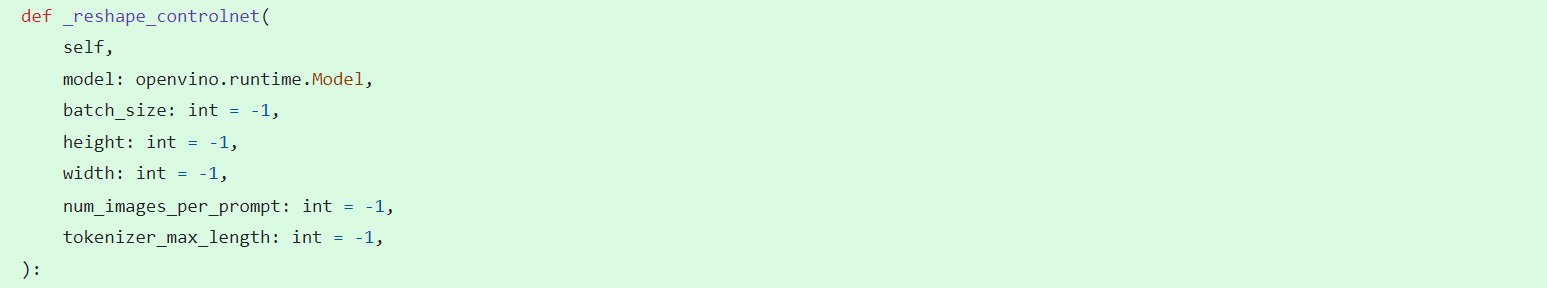

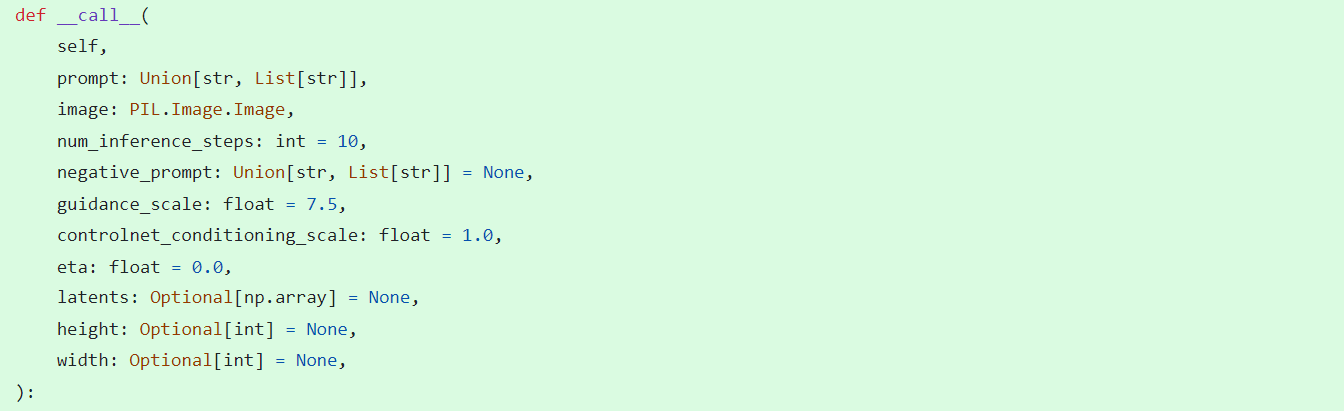

Enable LoRA weights with Stable Diffusion Controlnet Pipeline

Authors: Zhen Zhao(Fiona), Kunda Xu

Low-Rank Adaptation(LoRA) is a novel technique introduced to deal with the problem of fine-tuning Diffusers and Large Language Models (LLMs). In the case of Stable Diffusion fine-tuning, LoRA can be applied to the cross-attention layers for the image representations with the latent described. You can refer HuggingFace diffusers to understand the basic concept and method for model fine-tuning: https://huggingface.co/docs/diffusers/training/lora

In this blog, we aimed to introduce the method building up the pipeline for Stable Diffusion + ControlNet with OpenVINO™ optimization, and enable LoRA weights for Unet model of Stable Diffusion to generate images with different styles. The demo source code is based on: https://github.com/FionaZZ92/OpenVINO_sample/tree/master/SD_controlnet

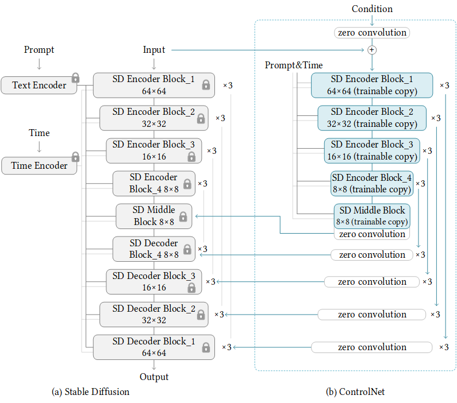

Stable Diffusion ControlNet Pipeline

Step 1: Environment preparation

First, please follow below method to prepare your development environment, you can choose download model from HuggingFace for better runtime experience. In this case, we choose controlNet for canny image task.

* Please note, the diffusers start to use `torch.nn.functional.scaled_dot_product_attention` if your installed torch version is >= 2.0, and the ONNX does not support op conversion for “Aten:: scaled_dot_product_attention”. To avoid the error during the model conversion by “torch.onnx.export”, please make sure you are using torch==1.13.1.

Step 2: Model Conversion

The demo provides two programs, to convert model to OpenVINO™ IR, you should use “get_model.py”. Please check the options of this script by:

In this case, let us choose multiple batch size to generate multiple images. The common application of vison generation has two concepts of batch:

- `batch_size`: Specify the length of input prompt or negative prompt. This method is used for generating N images with N prompts.

- `num_images_per_prompt`: Specify the number of images that each prompt generates. This method is used to generate M images with 1 prompts.

Thus, for common user application, you can well use these two attributes in diffusers to generate N*M images by N prompts with increased random seed values. For example, if your basic seed is 42, to generate N(2)*M(2) images, the actual generation is like below:

- N=1, M=1: prompt_list[0], seed=42

- N=1, M=2: prompt_list[0], seed=43

- N=2, M=1: prompt_list[1], seed=42

- N=2, M=2: prompt_list[1], seed=43

In this case, let’s use N=2, M=1 as a quick example for demonstration, thus the use`--batch 2`. This script will generate static shape model by default. If you are using different value of N and M, please specify `--dynamic`.

Please check your current path, make sure you already generated below models currently. Other ONNX files can be deleted for saving space.

- controlnet-canny.<xml|bin>

- text_encoder.<xml|bin>

- unet_controlnet.<xml|bin>

- vae_decoder.<xml|bin>

* If your local path already exists ONNX or IR model, the script will jump tore-generate ONNX/IR. If you updated the pytorch model or want to generate model with different shape, please remember to delete existed ONNX and IR models.

Step 3: Runtime pipeline test

The provided demo program `run_pipe.py` is manually build-up the pipeline for StableDiffusionControlNet which refers to the original source of `diffusers.StableDiffusionControlNetPipeline`

The difference is we simplify the pipeline with 4 models’ inference by OpenVINO™ runtime API which can make sure the model inference can be accelerated on Intel® CPU and GPU platform.

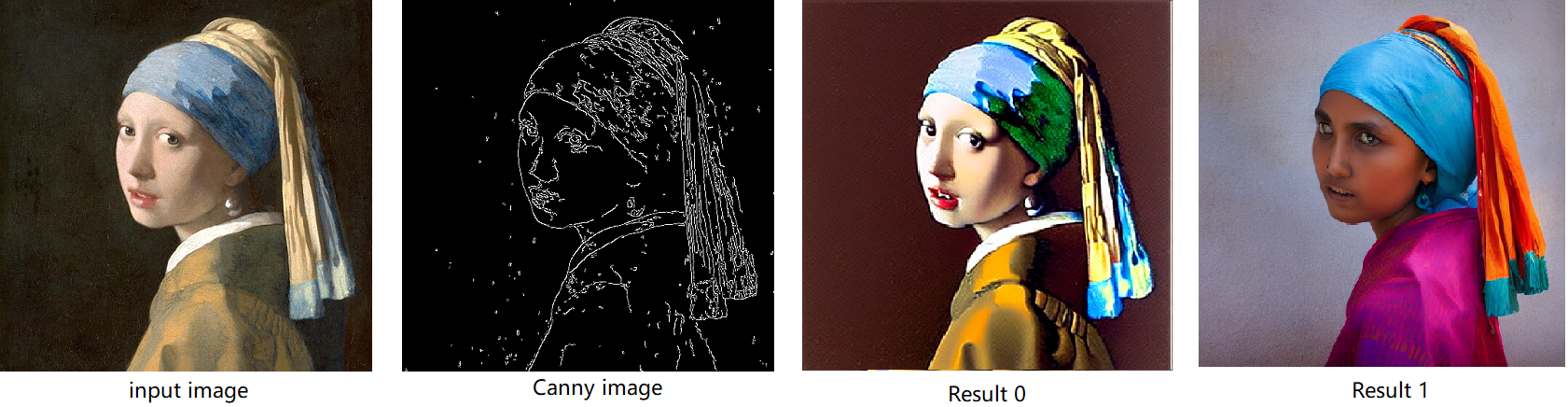

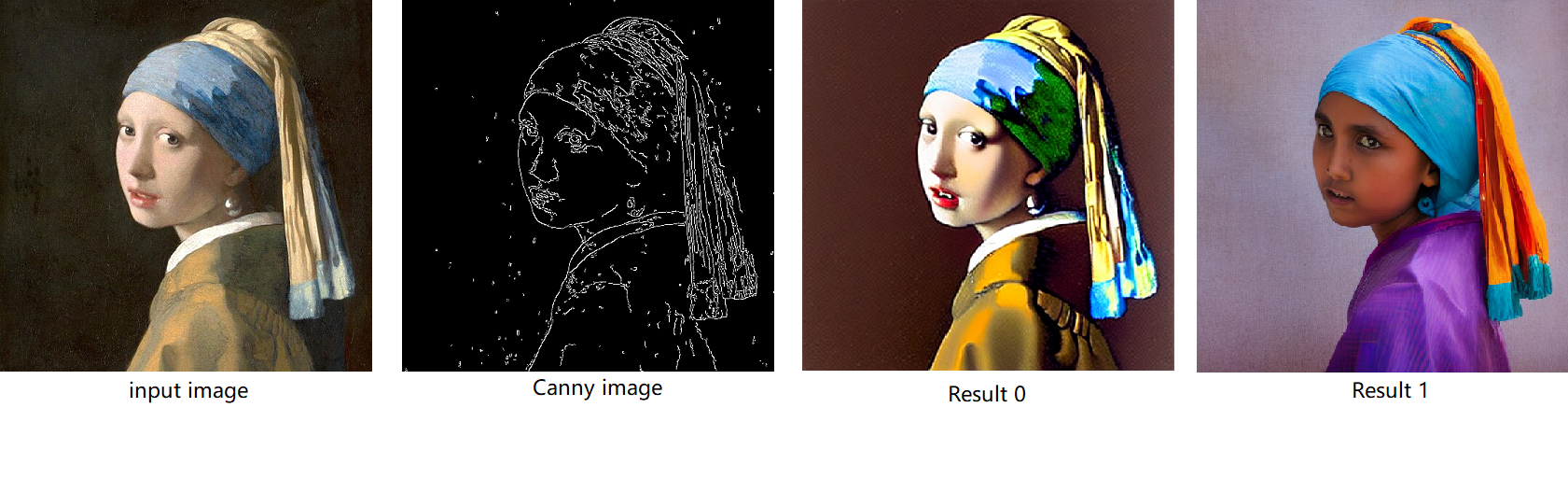

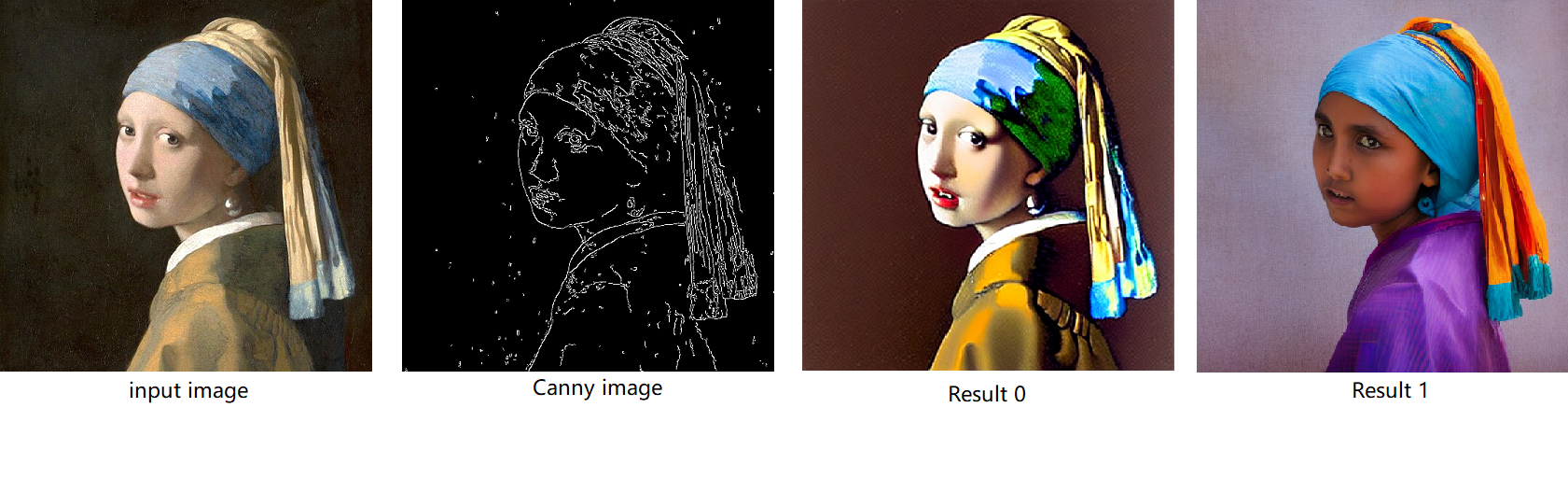

The default iteration is 20, image shape is 512*512, seed is 42, and the input image and prompt is for “Girl with Pearl Earring”. You can adjust or custom your own pipeline attributes for testing.

In the case with batch_size=2, the generated image is like below:

Enable LoRA weights for Stable Diffusion

Normal LoRA weights has two types, one is ` pytorch_lora_weights.bin`,the other is using safetensors. In this case, we introduce both methods for these two LoRA weights.

The main idea for LoRA weights enabling, is to append weights onto the original Unet model of Stable Diffusion, then export IR model of Unet which remains LoRA weights.

There are various LoRA models on https://civitai.com/tag/lora , we choose some public models on HuggingFace as an example, you can consider toreplace with your owns.

Step 4-1: Enable LoRA by pytorch_lora_weights.bin

This step introduces the method to add lora weights to Unet model of Stable Diffusion by `pipe.unet.load_attn_procs(...)` function. By using this way, the LoRA weights will be loaded into the attention layers of Unet model of Stable Diffusion.

* Remember to delete exist Unet model to generate the new IR with LoRA weights.

Then, run pipeline inference program to check results.

The LoRA weights appended Stable Diffusion model with controlNet pipeline can generate image like below:

Step 4-2: Enable LoRA by safetensors typed weights

This step introduces the method to add LoRA weights to Stable diffusion Unet model by `diffusers/scripts/convert_lora_safetensor_to_diffusers.py`. Diffusers provide the script to generate new Stable Diffusion model by enabling safetensors typed LoRA model. By this method, you will need to replace the weight path to new generated Stable Diffusion model with LoRA. You can adjust value of `alpha` option to change the merging ratio in `W = W0 + alpha * deltaW` for attention layers.

Then, run pipeline inference program to check results.

The LoRA weights appended SD model with controlnet pipeline can generate image like below:

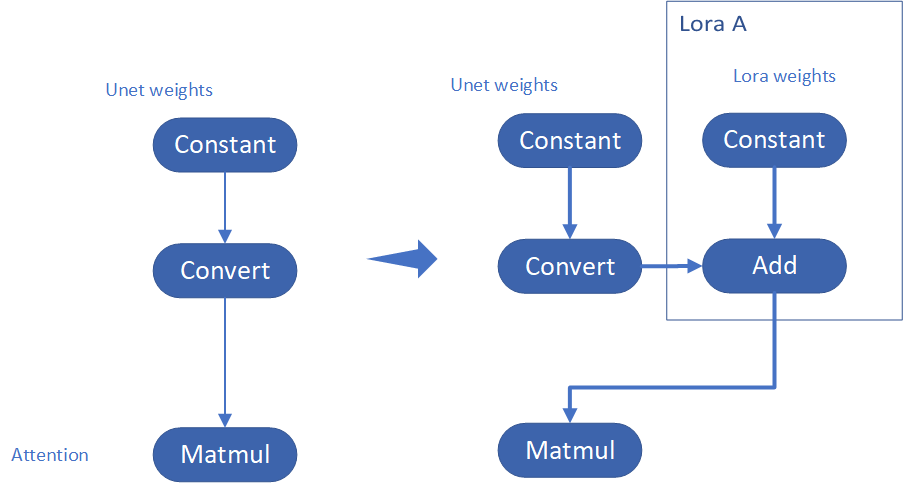

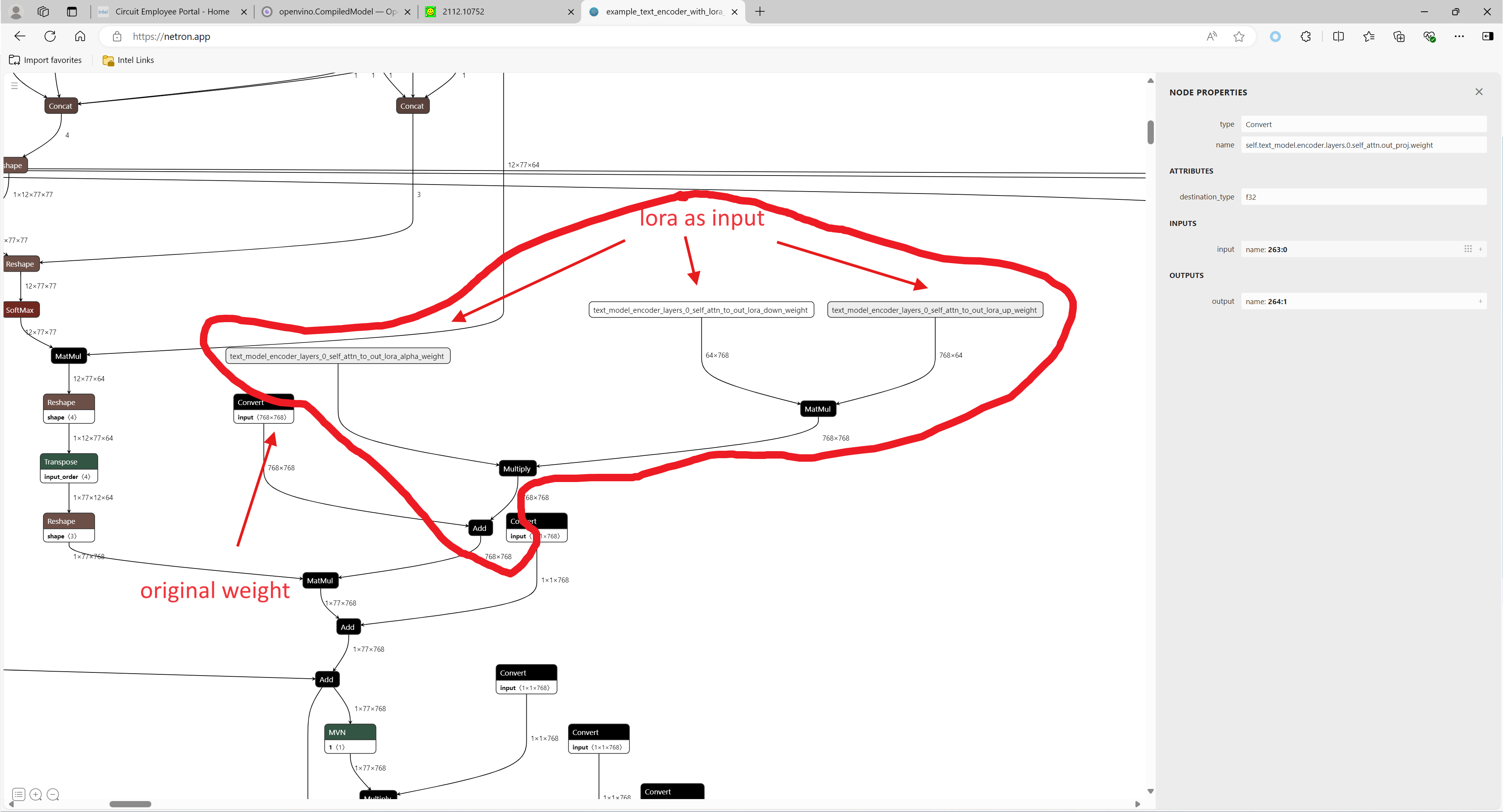

Step 4-3: Enable runtime LoRA merging by MatcherPass

This step introduces the method to add lora weights in runtime before Unet or text_encoder model compiling. It will be helpful to client application usage with multiple different LoRA weights to change the image style by reusing the same Unet/text_encoder structure.

This method is to extract lora weights in safetensors file and find the corresponding weights in Unet model and insert lora weights bias. The common method to add lora weights is like:

W = W0 + W_bias(alpha * torch.mm(lora_up, lora_down))

I intend to insert Add operation for Unet's attentions' weights by OpenVINO™ `opset10.add(W0,W_bias)`. The original attention weights in Unet model is loaded by `Const` op, the common processing path is `Const->Convert->Matmul->...`, if we add the lora weights, we should insert the calculated lora weight bias as `Const->Convert->Add->Matmul->...`. In this function, we adopt `openvino.runtime.passes.MatcherPass` to insert `opset10.add()` with call_back() function iteratively.

Your own transformation operations will insert opset.Add() firstly, then during the model compiling with device. The graph will do constant folding to combine the Add operation with following MatMul operation to optimize the model runtime inference. Thus, this is an effective method to merge LoRA weights onto original model.

You can check with the implementation source code, and find out the definition of the MatcherPass function called `InsertLoRA(MatcherPass)`:

The `InsertLoRA(MatcherPass)` function will be registered by `manager.register_pass(InsertLoRA(lora_dict_list))`, and invoked by `manager.run_passes(ov_unet)`. After this runtime MatcherPass operation, the graph compile with device plugin and ready for inference.

Run pipeline inference program to check the results. The result is same as Step 4-2.

The LoRA weights appended Stable Diffusion model with controlNet pipeline can generate image like below:

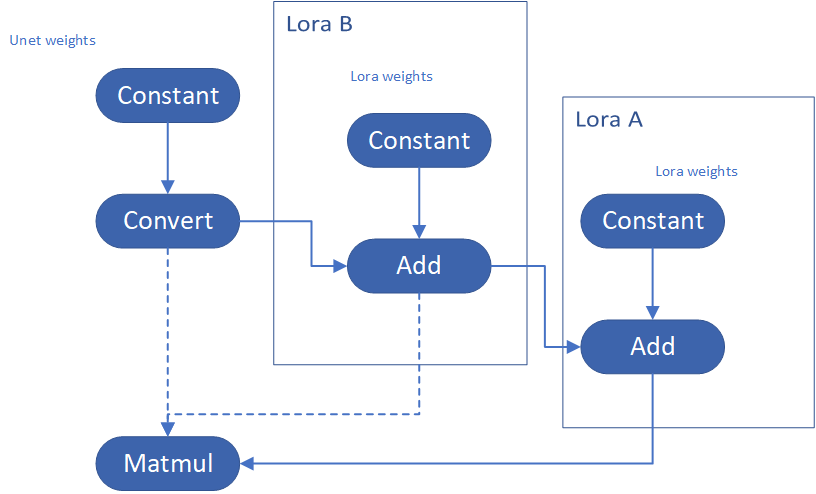

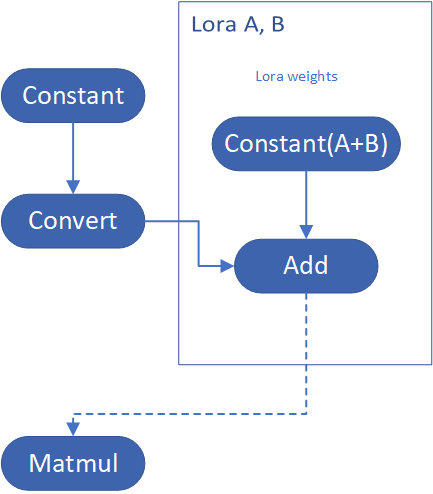

Step 4-4: Enable multiple LoRA weights

There are many different methods to add multiple LoRA weights. I list two methods here. Assume you have two LoRA weigths, LoRA A and LoRA B. You can simply follow the Step 4-3 to loop the MatcherPass function to insert between original Unet Convert layer and Add layer of LoRA A. It's easy to implement. However, it is not good at performance.

Please consider about the Logic of MatcherPass function. This fucntion required to filter out all layer with the Convert type, then through the condition judgement if each Convert layer connected by weights Constant has been fine-tuned and updated in LoRA weights file. The main costs of LoRA enabling is costed by InsertLoRA() function, thus the main idea is to just invoke InsertLoRA() function once, but append multiple LoRA files' weights.

By above method to add multiple LoRA, the cost of appending 2 or more LoRA weights almost same as adding 1 LoRA weigths.

Now, let's change the Stable Diffusion with dreamlike-anime-1.0 to generate image with styles of animation. I pick two LoRA weights for SD 1.5 from https://civitai.com/tag/lora.

- soulcard: https://civitai.com/models/67927?modelVersionId=72591

- epi_noiseoffset: https://civitai.com/models/13941/epinoiseoffset

You probably need to do prompt engineering work to generate a useful prompt like below:

- prompt: "1girl, cute, beautiful face, portrait, cloudy mountain, outdoors, trees, rock, river, (soul card:1.2), highly intricate details, realistic light, trending on cgsociety,neon details, ultra realistic details, global illumination, shadows, octane render, 8k, ultra sharp"

- Negative prompt: "3d, cartoon, lowres, bad anatomy, bad hands, text, error"

- Seed: 0

- num_steps: 30

- canny low_threshold: 100

You can get a wonderful image which generate an animated girl with soulcard typical border like below:

Additional Resources

Provide Feedback & Report Issues

Notices & Disclaimers

Intel technologies may require enabled hardware, software, or service activation.

No product or component can be absolutely secure.

Your costs and results may vary.

Intel does not control or audit third-party data. You should consult other sources to evaluate accuracy.

Intel disclaims all express and implied warranties, including without limitation, the implied warranties of merchantability, fitness for a particular purpose, and non-infringement, as well as any warranty arising from course of performance, course of dealing, or usage in trade.

No license (express or implied, by estoppel or otherwise) to any intellectual property rights is granted by this document.

© Intel Corporation. Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries. Other names and brands may be claimed as the property of others.

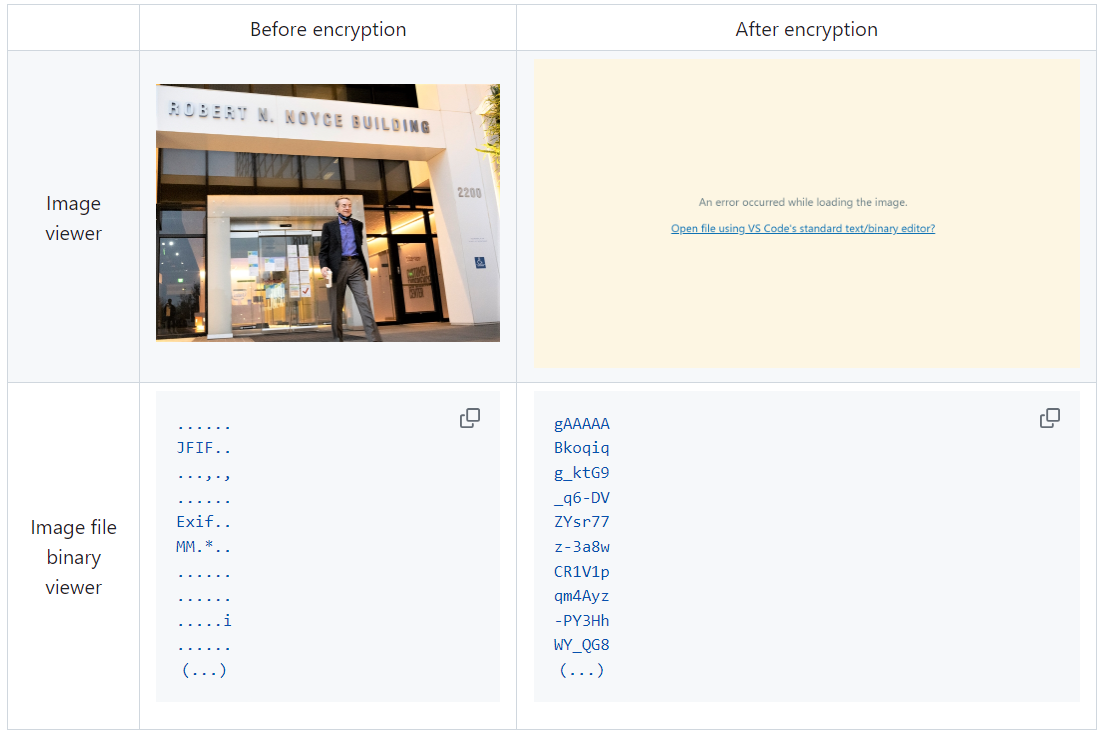

Encrypt Your Dataset and Train Your Model with It Directly

Encrypt Your Dataset and Train Your Model with It Directly

Introduction