AquilaChat-7B Language Model Enabling with Hugging Face Optimum Intel

Introduction

What is AquilaChat-7B Language Model?

Aquila Language Model is a set of open-source large language models (LLMs) developed by the Beijing Academy of Artificial Intelligence (BAAI). Aquila models support both Chinese and English, commercial license agreements, and compliance with Chinese domestic data regulations.

AquilaChat-7B is a conversational language model that supports Chinese and English dialogue. It is based on the Aquila-7B foundation model and fine-tuned using supervised fine-tuning (SFT). AquilaChat-7B original Pytorch model and configurations are publicly available here.

Hugging Face Optimum Intel

Hugging Face is one of the most popular open-source data science and machine learning platforms. It acts as a hub for AI experts and enthusiasts—like a GitHub for AI. Over 200,000 models are available across Natural language processing, Multimodal models, Computer Vision, and Audio domains.

Hugging Face provides wide support for model optimization and deployment of open-sourced LLMs such as LLaMA, Bloom, GPT-Neox, Dolly 2.0, to name a few. More details please refer to Open LLM Leaderboard.

Optimum-Intel provides a simple interface between the Hugging Face and OpenVINOTM ecosystem to leverage high-performance inference capabilities for Intel architecture. Here is a simple example to show how to run Dolly 2.0 models with OVModelForCausalLM using OpenVINOTM runtime.

Hola! So, for LLMs already supported by Hugging Face transformers and optimum, we can smoothly switch the model inference backend from Pytorch to OpenVINOTM by changing only two lines of code.

However, what if an LLM from an open-source community that not native supported by Hugging Face Transformers library? How can we still leverage the tools of Hugging Face and OpenVINOTM ecosystem for model optimization and deployment?

Indeed, AquilaChat-7B is a custom model for the Hugging Face Transformers. So, we use it as an example to elaborate the custom model enabling methodology step by step.

How to Enable a Custom Model on Hugging Face?

To leverage the Hugging Face ecosystem and optimization for AquilaChat-7B model, we need to convert the original Pytorch model to Hugging Face Format. Before we dive into conversion details, we need to figure out what is AquilaChat-7B’s model structure, tokenizer, and configurations.

According to Aquila’s official model description:

“The Aquila language model inherits the architectural design advantages of GPT-3 and LLaMA, replacing a batch of more efficient underlying operator implementations and redesigning the tokenizer for Chinese-English bilingual support. The Aquila language model is trained from scratch on high-quality Chinese and English corpora. “

Model Structure and Tokenizer

For model structure, Aquila Model adopts the original Meta LLaMA pytorch implementation, which combines RMSNorm (GPT-3) to improve training stability and Rotary Position Embedding (GPT-NeoX)to incorporate explicit relative position dependency in self-attention.

For tokenizer, instead of using byte-pair encoding (BPE) algorithms implemented by Sentence Piece, Aquila re-trained HuggingFace GPT-NeoX tokenizer with extended vocabulary (vocab_size =100008, including 8 special tokens, e.g. bos_token=100006, eos_token=100007, unk=0, pad=0 used for inference based on here.

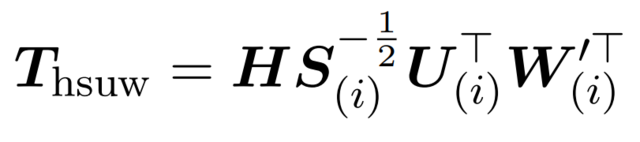

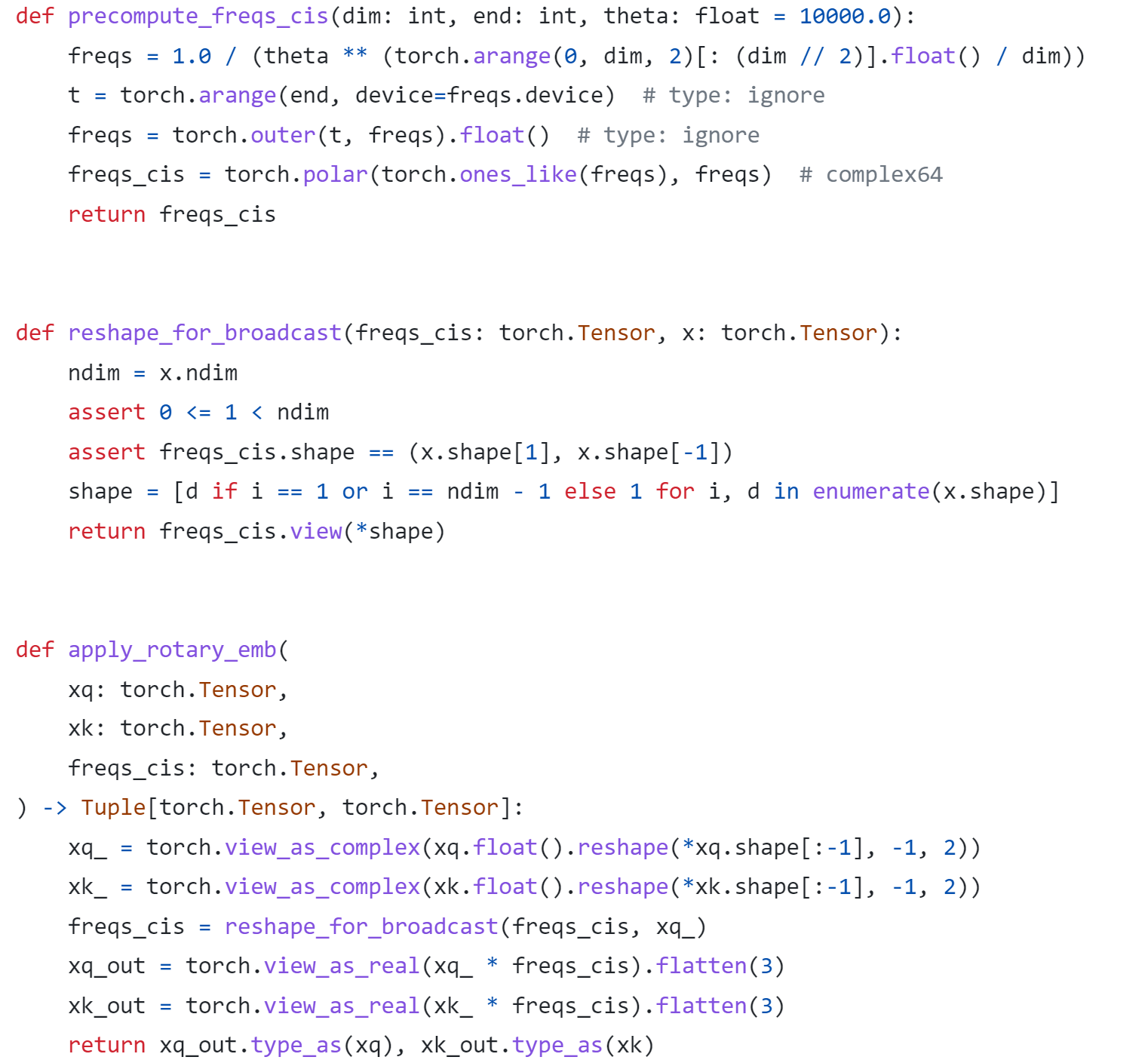

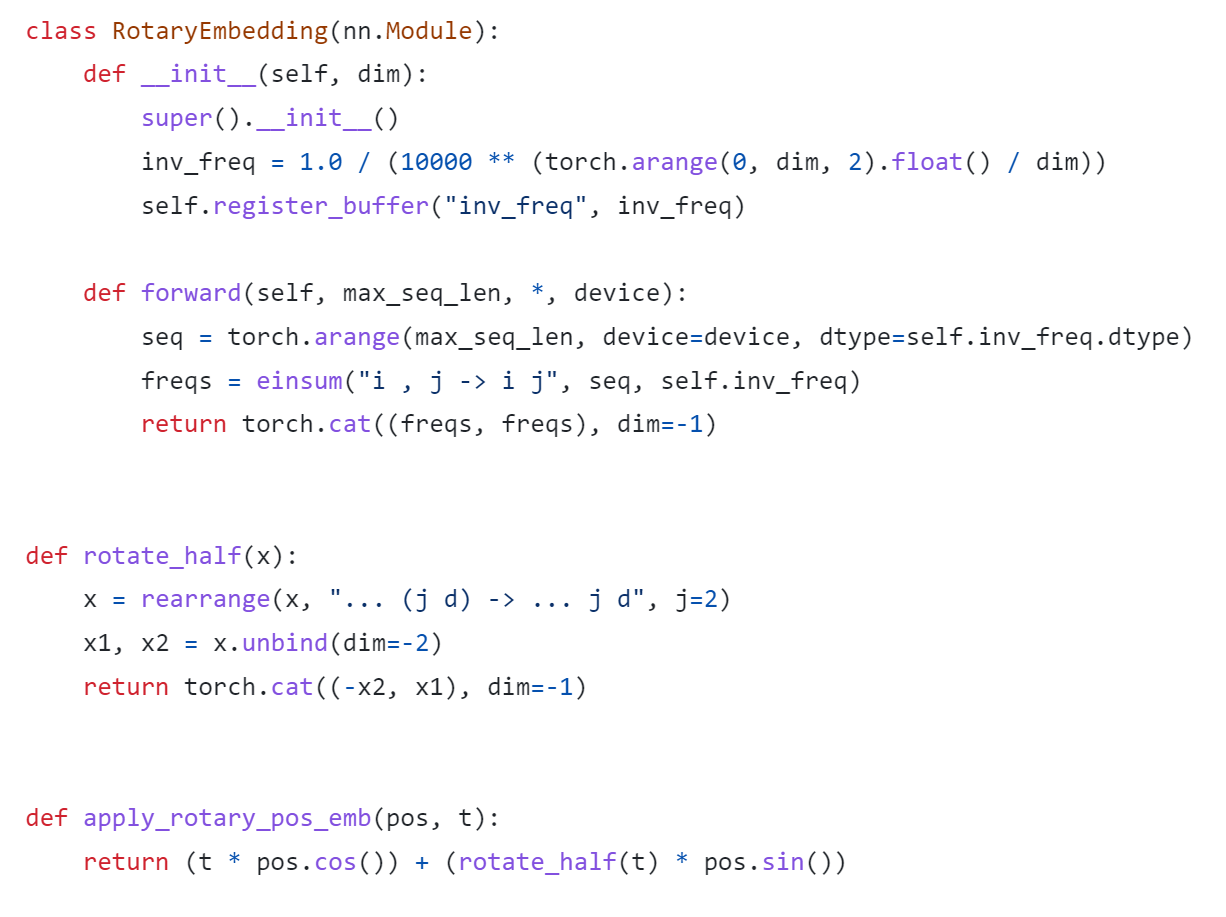

Rotary Position Embedding

Rotary Position Embedding (RoPE) encodes the absolute position with a rotation matrix and meanwhile incorporates the explicit relative position dependency in the self-attention formulation. Compare to other position embedding methods, RoPE provides valuable properties such as flexibility of sequence length, long-term decay, and linear self-attention with relative position embedding. Based on the original paper, there are two mainstream implementations of RoPE:

As show in Figure 3, Meta LLaMA’s implementation directly use complex number to calculate rotary position embedding.

As show in Figure 4, Google PaLM’s implementation expands the complex number operation and calculate sinusoidal functions in matrix equation of real numbers.

Both RoPE implementations are valid for the Pytorch model. Hugging Face LLaMA implementation adopts PaLM’s RoPE implementation due to the limitation of complex type support for ONNX export.

Besides, Hugging Face provides a useful script convert_llama_weights_to_hf.py to convert the original Meta LLaMA Pytorch Model to Hugging Face Format as follows:

- Extract Pytorch weights and convert Meta LlaMA RoPE implementation to Hugging Face RoPE implementation.

- Convert tokenizer.model trained with Sentence Piece to Hugging Face LLaMA tokenizer.

Convert AquilaChat-7B Model to Hugging Face Format

Similarly, we provide a convert_aquila_weights_to_hf.py to convert AquilaChat-7B Model to Hugging Face Format.

- Extract Pytorch weights and convert Aquila RoPE implementation to Hugging Face RoPE implementation

- Initialize and save a Hugging Face GPT-NeoX Tokenizer with extended vocabulary based on original tokenizer configurations provided by Aquila.

- Add a modeling_aquila.py to enable support forAutoModelForCausalLM and AutoTokenizer

Here is the converted Hugging Face version of AquilaChat-7B v0.6 model uploaded in Hugging Face.

You may convert pytorch weights to Hugging Face format in two steps:

- Download AquilaChat-7B Pytorch Model and configurations here

- Convert AquilaChat-7B Pytorch Model and configurations to Hugging Face Format

Hugging Face AquilaChat-7B Demo

Setup Environment

Run inference with AutoModelForCausalLM

Run inference with OVModelForCausalLM

Conclusion

In this blog, we show how to convert a custom Large Language Model (LLM) to Hugging Face format to leverage efficient optimization and deployment with Hugging Face and OpenVINOTM Ecosystem.

Please note, this is the initial model enabling step for AquilaChat-7B model with OpenVINOTM. We will continue to optimize performance along with upgrading OpenVINOTM for LLM scaling. Please refer to OpenVINOTM and Optimum-Intel official release to get latest efficient support for LLMs with OpenVINOTM backend.

Reference

- FlagAI AquilaChat-7B

- AquilaChat-7B Hugging Face Model

- Hugging Face Optimum Intel

- LLaMA:Open and Efficient Foundation Language Models

- RoFormer:Enhanced Transformer with Rotary Position Embedding

- RotaryEmbeddings: A Relative Revolution

.png)