MiniCPM-V-2 model enabling with OpenVINO

Introduction

MiniCPM is an End-Side LLM developed by ModelBest Inc. and TsinghuaNLP. MiniCPM-V is a series of end-side multimodal LLMs (MLLMs) designed for vision-language understanding. The models take image and text as inputs and provide high-quality text outputs. MiniCPM-V 2.0 is an efficient version with promising performance for deployment. The model is built based on SigLip-400Mand MiniCPM-2.4B, connected by a perceiver resampler. On this blog, we provide the OpenVINO™ optimization for MiniCPM-V 2.0 on Intel® platforms.

You can find more information on GitHub repository:https://github.com/OpenBMB/MiniCPM-V

OpenVINO™backend on Minicpm-V-2

Step 1: Install system dependency and setup environment

Create and enable python virtual environment

Clone the MiniCPM-V repository from GitHub

Chage the current directory to the MiniCPM-V OpenVINO™ Runtime folder

Install python dependency

Step2: Export to OpenVINO™ models

Step3: Simple inference test with OpenVINO™

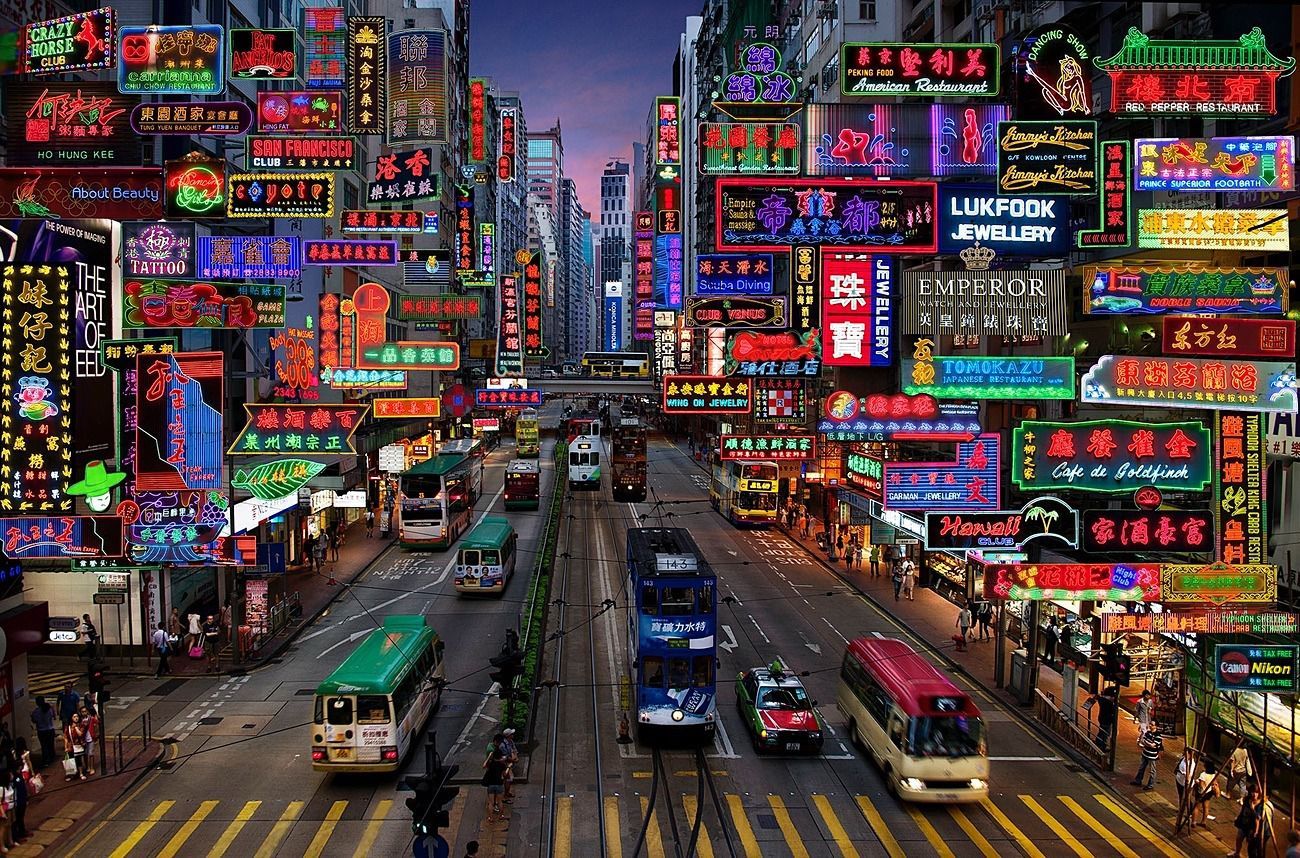

Question: Describe the content of the image

Answer:

The image captures the vibrant and bustling atmosphere of abusy city street in Hong Kong. The street is lined with an array of neon signsand billboards, each one advertising a different business or establishment. Thesigns are in a variety of languages, including English, Chinese, and Japanese,reflecting the multicultural nature of the city.

The street itself is a hive of activity with several busesand a tram making their way through the traffic. The vehicles are in motion,adding a dynamic element to the scene.

The sky above is a beautiful gradient of colors,transitioning from a deep blue at the top to a lighter shade at the bottom.This suggests that the photo was taken during either sunrise or sunset, castinga warm glow over the cityscape.

The image also contains several text elements, including thenames of various establishments and the brand names of products. These textsadd another layer of information to the scene, providing insights into thenature of the businesses and the products they offer.

Overall, the image provides a vivid snapshot of life in HongKong, capturing the city's vibrant energy and the diverse range of businessesand products that make up its bustling streets.

.png)